5 Reproducible Research Techniques - Data Training

5.1 Writing Good Data Management Plans

5.1.1 Learning Objectives

In this lesson, you will learn:

- Why create data management plans

- The major components of data management plans

- Tools that can help create a data management plan

- Features and functionality of the DMPTool

5.1.2 When to Plan: The Data Life Cycle

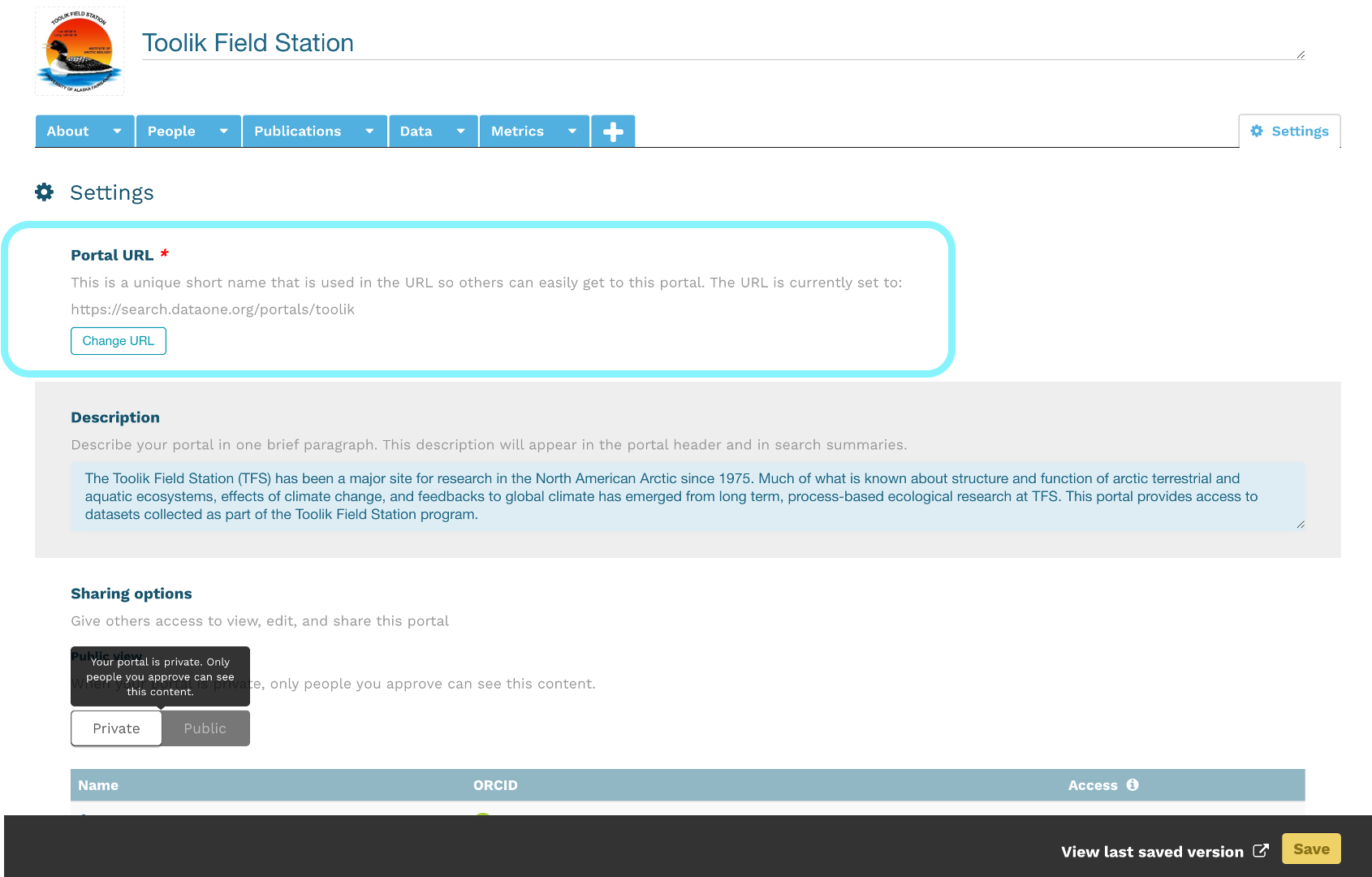

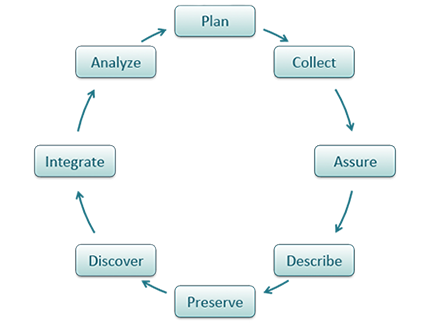

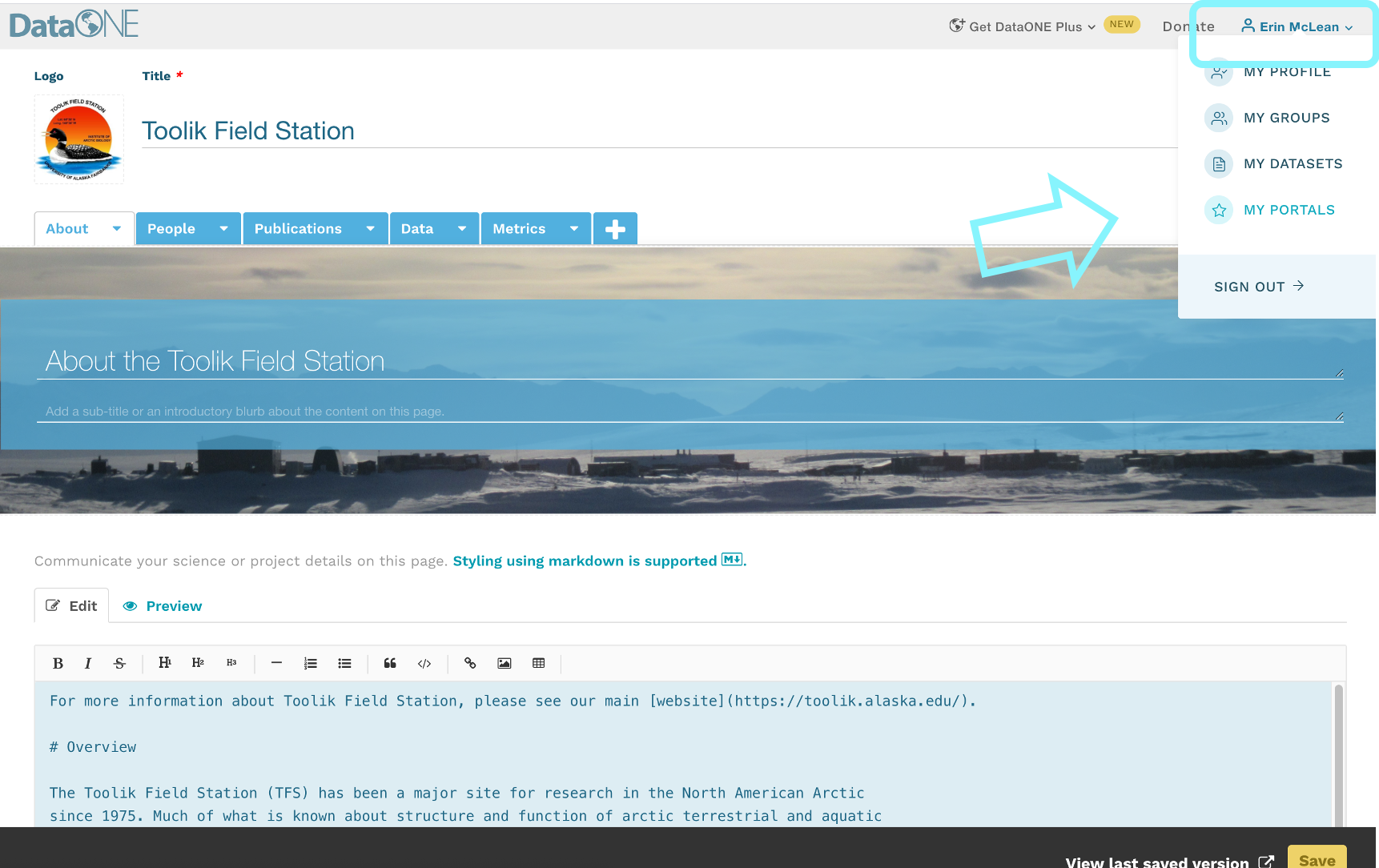

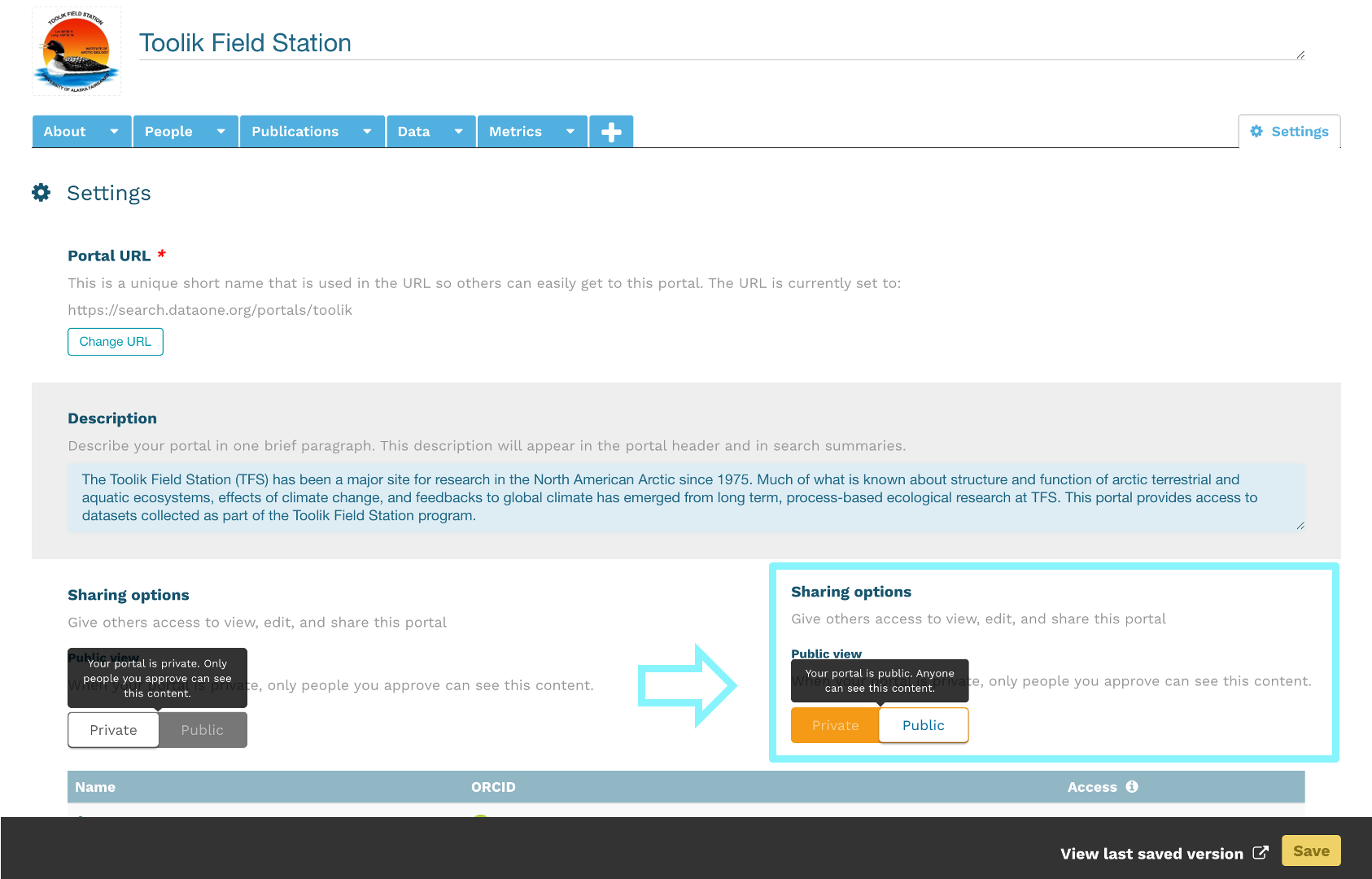

Shown below is one version of the Data Life Cycle that was developed by DataONE. The data life cycle provides a high level overview of the stages involved in successful management and preservation of data for use and reuse. Multiple versions of a data life cycle exist with differences attributable to variation in practices across domains or communities. It is not neccesary for researchers to move through the data life cycle in a cylical fashion and some research activities might use only part of the life cycle. For instance, a project involving meta-analysis might focus on the Discover, Integrate, and Analyze steps, while a project focused on primary data collection and analysis might bypass the Discover and Integrate steps. However, ‘Plan’ is at the top of the data life cycle as it is advisable to initiate your data management planning at the beginning of your research process, before any data has been collected.

5.1.3 Why Plan?

Planning data management in advance povides a number of benefits to the researcher.

- Saves time and increases efficiency; Data management planning requires that a researcher think about data handling in advance of data collection, potentially raising any challenges before they are encountered.

- Engages your team; Being able to plan effectively will require conversation with multiple parties, engaging project participants from the outset.

- Allows you to stay organized; It will be easier to organize your data for analysis and reuse.

- Meet funder requirements; Funders require a data management plan as part of the proposal process.

- Share data; Information in the DMP is the foundation for archiving and sharing data with community.

5.1.4 How to Plan

- As indicated above, engaging your team is a benefit of data management planning. Collaborators involved in the data collection and processing of your research data bring diverse expertise. Therefore, plan in collaboration with these individuals.

- Make sure to plan from the start to avoid confusion, data loss, and increase efficiency. Given DMPs are a requirement of funding agencies, it is nearly always neccesary to plan from the start. However, the same should apply to research that is being undertaken outside of a specific funded proposal.

- Make sure to utilize resources that are available to assist you in helping to write a good DMP. These might include your institutional library or organization data manager, online resources or education materials such as these.

- Use tools available to you; you don’t have to reinvent the wheel.

- Revise your plan as situations change and you potentially adapt/alter your project. Like your research projects, data management plans are not static, they require changes and updates throughout the research project process.

5.1.5 What to include in a DMP

If you are writing a data management plan as part of a solicitation proposal, the funding agency will have guidelines for the information they want to be provided in the plan. A good plan will provide information on the study design; data to be collected; metadata; policies for access, sharing & reuse; long-term storage & data management; and budget.

A note on Metadata: Both basic metadata (such as title and researcher contact information) and comprehensive metadata (such as complete methods of data collection) are critical for accurate interpretation and understanding. The full definitions of variables, especially units, inside each dataset are also critical as they relate to the methods used for creation. Knowing certain blocking or grouping methods, for example, would be necessary to understand studies for proper comparisons and synthesis.

5.1.6 NSF DMP requirements

In the 2014 Proposal Preparation Instructions, Section J ‘Special Information and Supplementary Documentation’ NSF put foward the baseline requirements for a data management plan. In addition, there are specific divison and program requirements that provide additional detail. If you are working on a research project with funding that does not require a data management plan, or are developing a plan for unfunded research, the NSF generic requirements are a good set of guidelines to follow.

Five Sections of the NSF DMP Requirements

1. Products of research

Types of data, samples, physical collections, software, curriculum materials, other materials produced during project

2. Data formats and standards

Standards to be used for data and metadata format and content (for initial data collection, as well as subsequent storageand processing)

3. Policies for access and sharing

Provisions for appropriate protection of privacy, confidentiality, security, intellectual property, or other rights or requirements

4. Policies and provisions for re-use

Including re-distribution and the production of derivatives

5. Archiving of data

Plans for archiving data, samples, research products and for preservation of access

5.1.7 Tools in Support of Creating a DMP

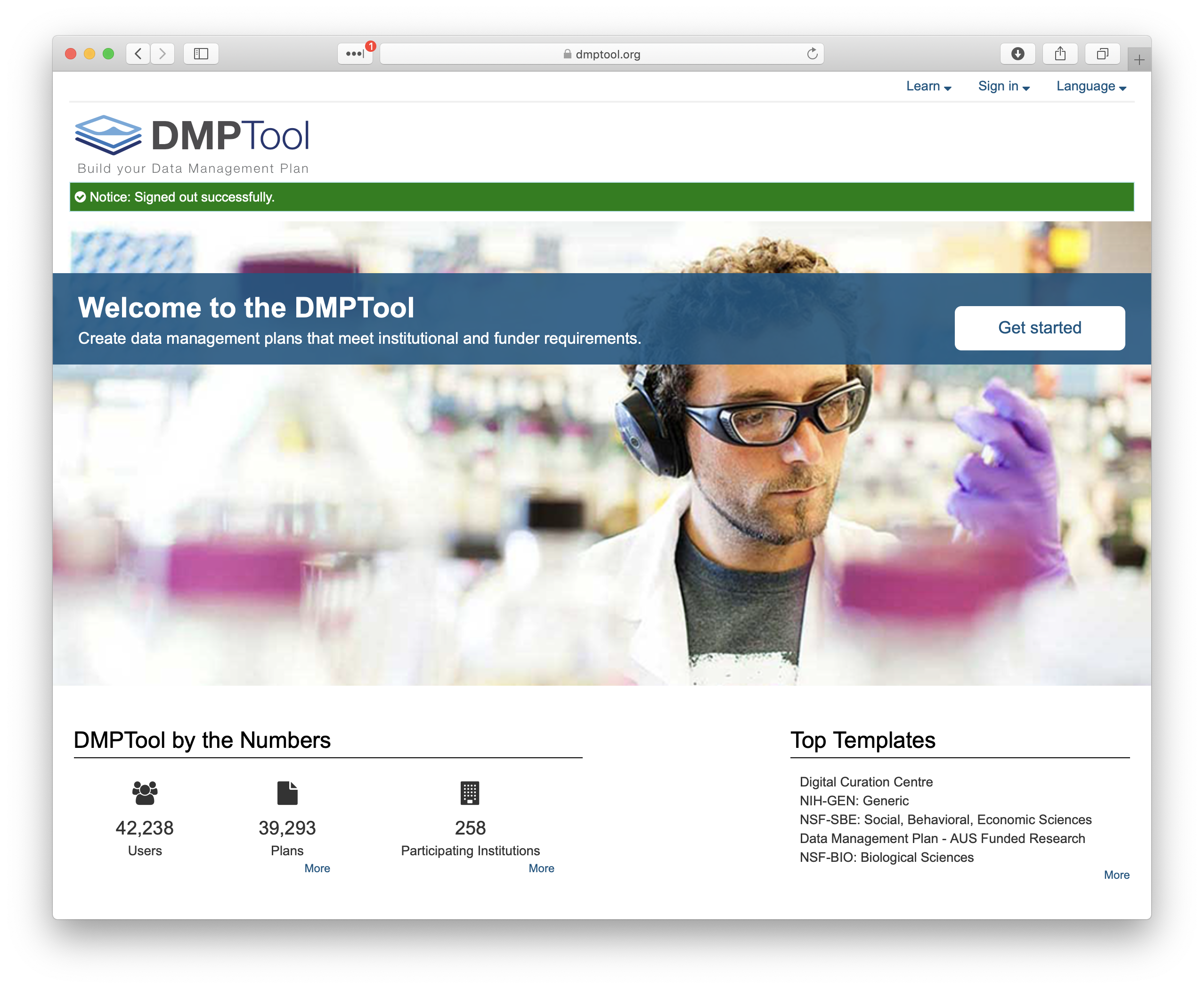

The DMP Tool and DMP Online are both easy to use web based tools that support the development of a DMP. The tools are partnered and share a code base; the DMPTool incorporates templates from US funding agencies and the DMP Online is focussed on EU requirements.

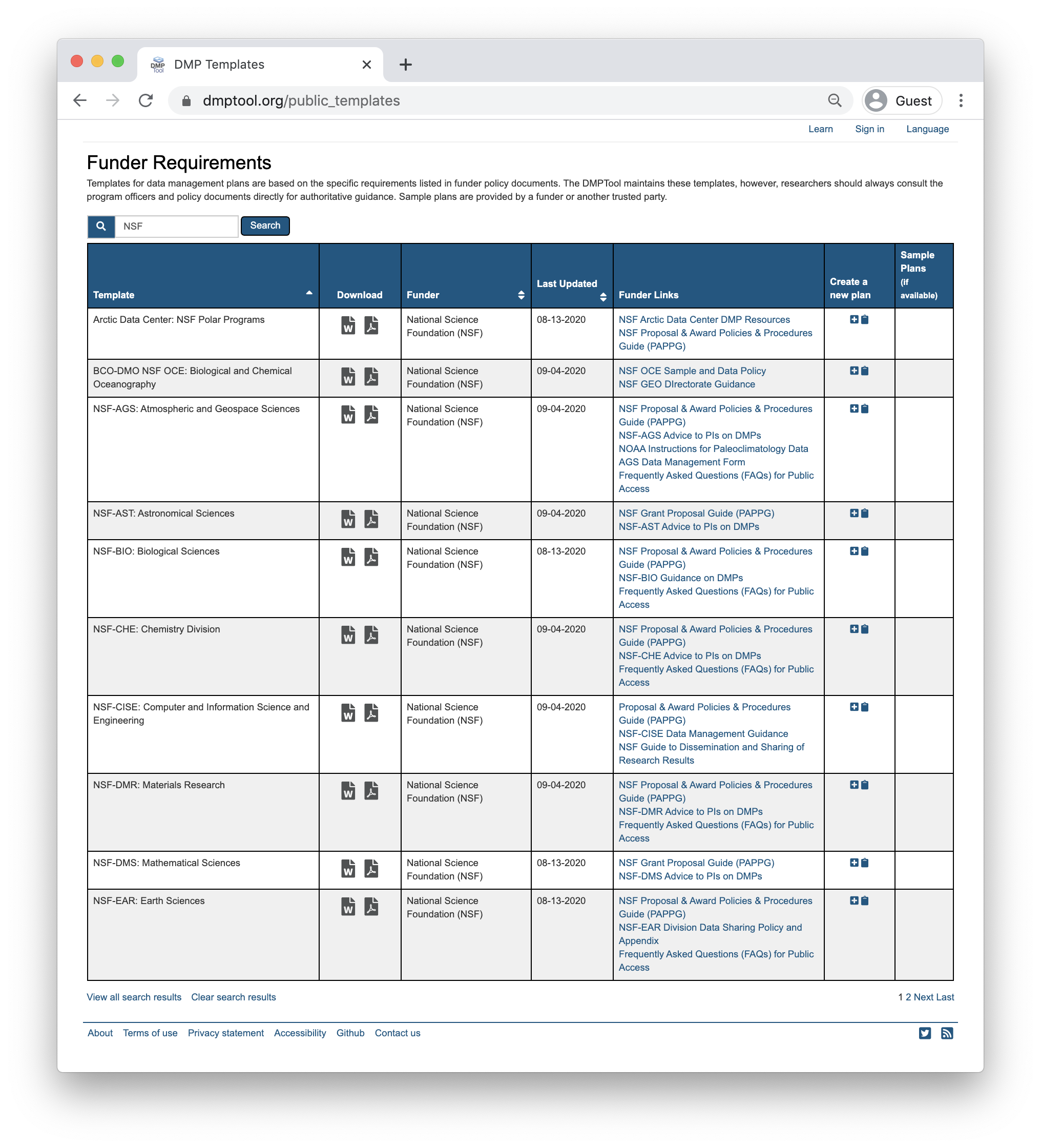

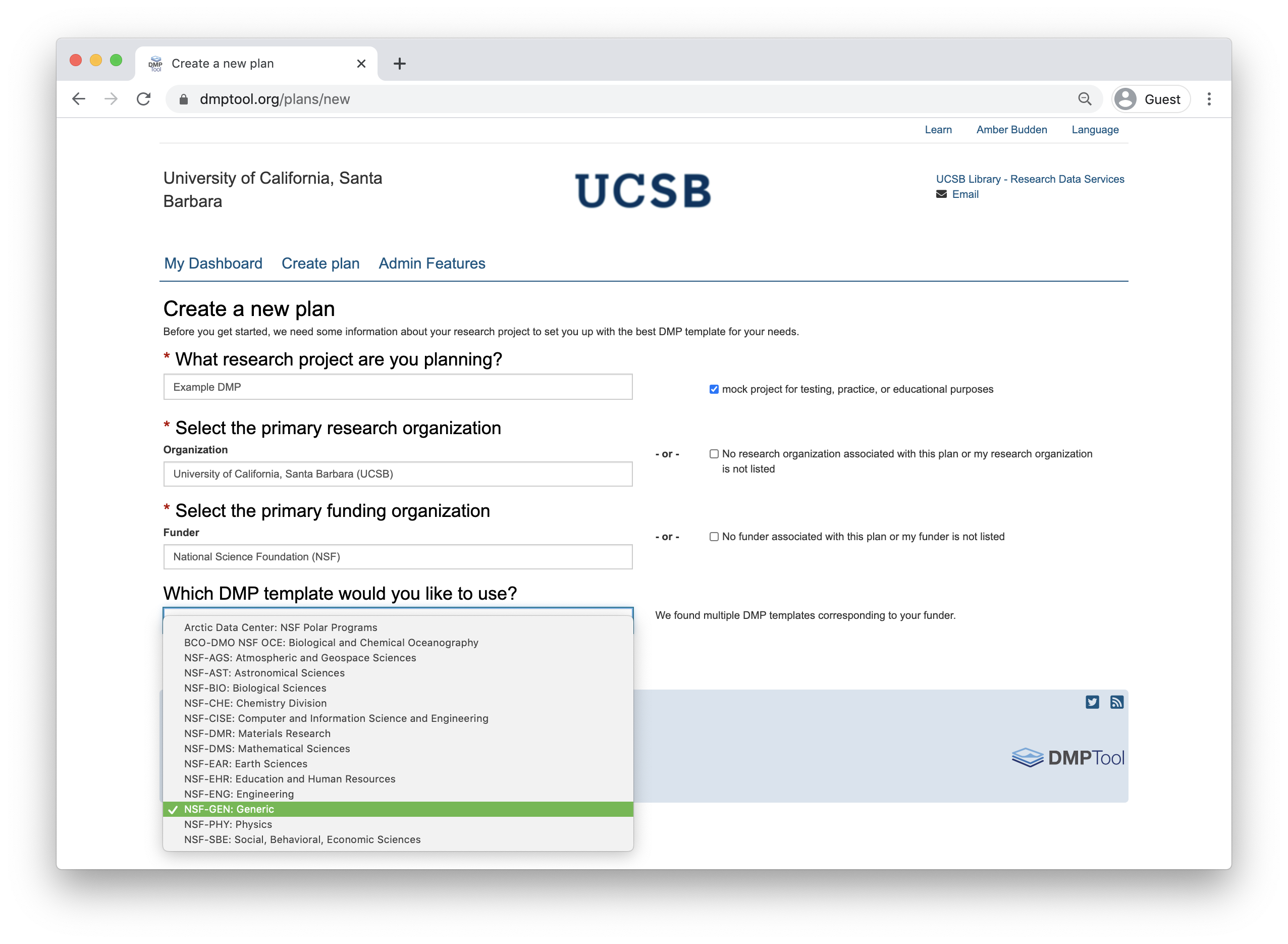

5.1.8 NSF (and other) Template Support for DMPs

To support researchers in creating Data Management Plans that fulfil funder requirements, the DMPTool includes templates for multiple funders and agencies. These templates form the backbone of the tool structure and enable users to ensure they are responding to specific agency guidelines. There is a template for NSF-BIO, NSF-EAR as well as other NSF Divisions. If your specific agency or division is not represented, the NSF-GEN (generic) template is a good choice to work from.

When creating a new plan, indicate that your funding agency is the National Science Foundation and you will then have the option to select a template. Below is the example with NSF-GEN.

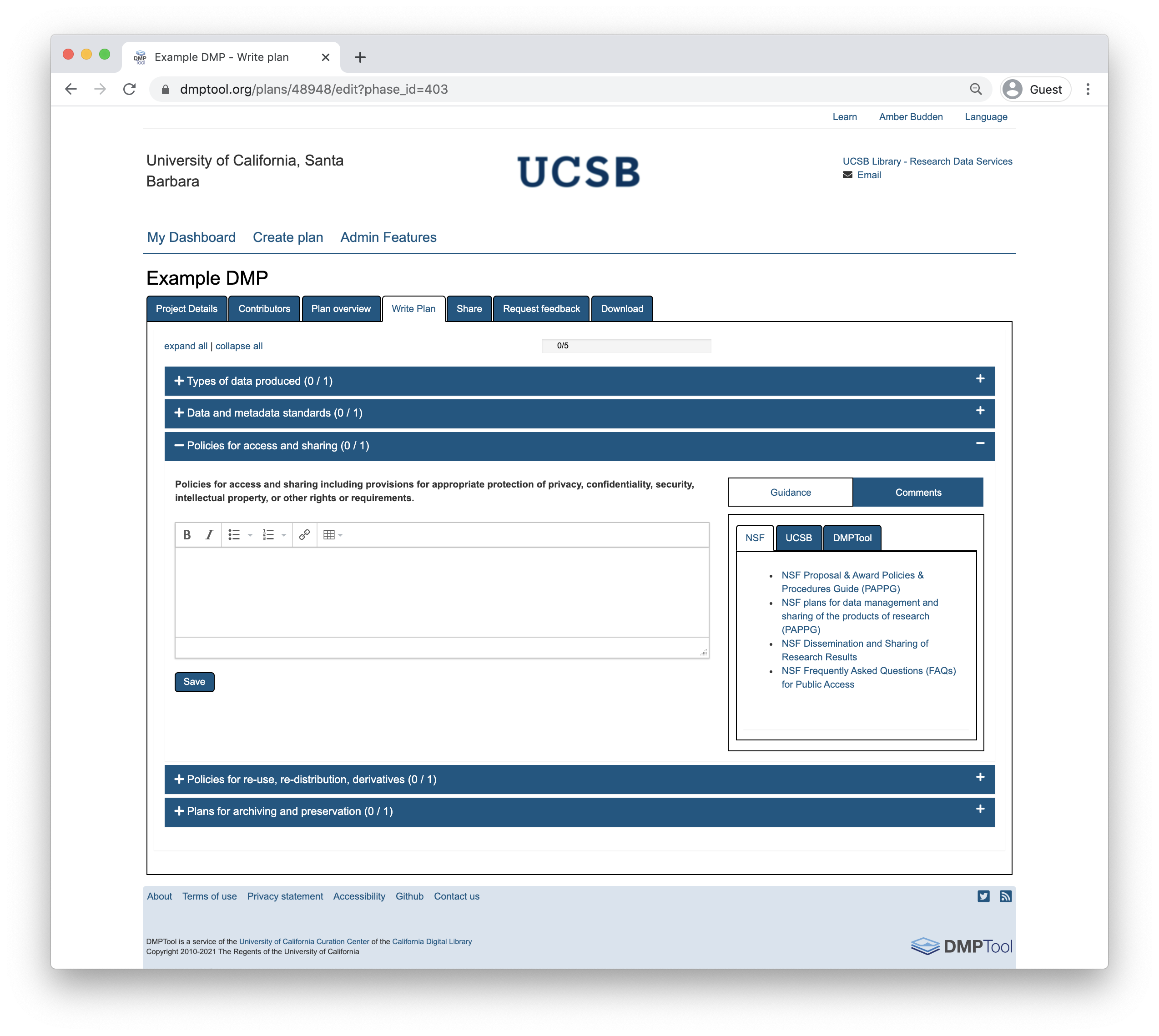

As you answer the questions posed, guidance information from the NSF, the DMPTool and your institution (if applicable) will be visible under the ‘Guidance’ tab on the right hand side. In some cases, the template may include an example answer at the bottom. If so, it is not intended that you copy and paste this verbatim. Rather, this is example prose that you can refer to for answering the question.

5.1.10 Additional Resources

The article Ten Simple Rules for Creating a Good Data Management Plan is a great resource for thinking about writing a data management plan and the information you should include within the plan.

5.2 Reproducible Research, RStudio and Git/GitHub Setup

5.2.1 Learning Objectives

In this lesson, you will learn:

- Why reproducibility in research is useful

- What is meant by computational reproducibility

- How to check to make sure your RStudio environment is set up properly for analysis

- How to set up git

5.2.2 Introduction to reproducible research

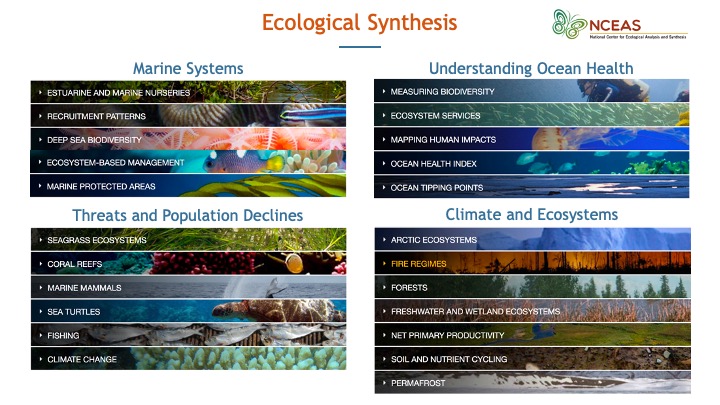

Reproducibility is the hallmark of science, which is based on empirical observations coupled with explanatory models. And reproducible research is at the core of what we do at NCEAS, research synthesis.

The National Center for Ecological Analysis and Synthesis was funded over 25 years ago to bring together interdisciplinary researchers in exploration of grand challenge ecological questions through analysis of existing data. Such questions often require integration, analysis and synthesis of diverse data across broad temporal, spatial and geographic scales. Data that is not typically collected by a single individual or collaborative team. Synthesis science, leveraging previously collected data, was a novel concept at that time and the approach and success of NCEAS has been a model for other synthesis centers.

During this course you will learn about some of the challenges that can be encountered when working with published data, but more importantly, how to apply best practices to data collection, documentation, analysis and management to mitigate these challenges in support of reproducible research.

During this course you will learn about some of the challenges that can be encountered when working with published data, but more importantly, how to apply best practices to data collection, documentation, analysis and management to mitigate these challenges in support of reproducible research.

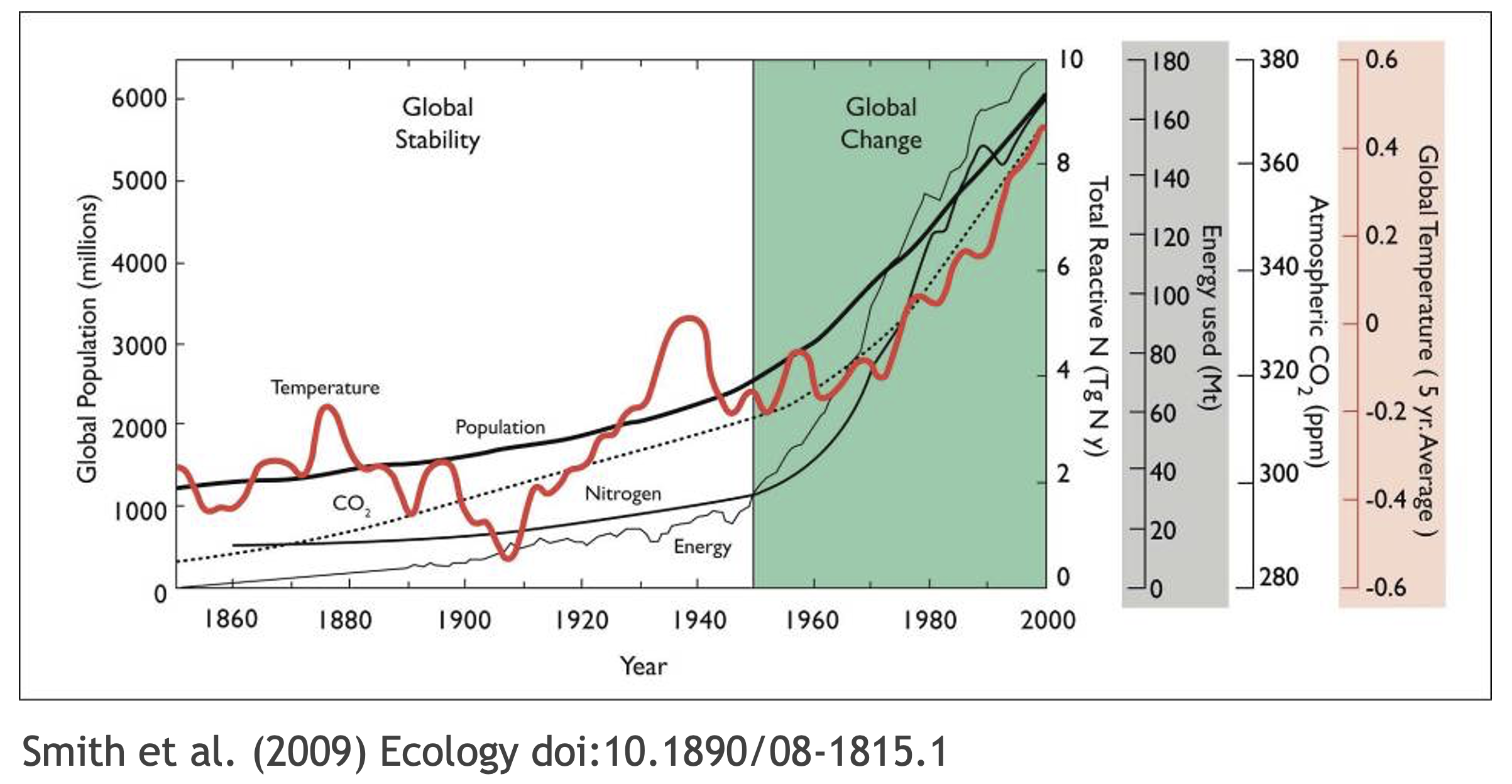

Why is reproducible research important?

Working in a reproducible manner builds efficiencies into your own research practices. The ability to automate processes and rerun analyses as you collect more data, or share your full workflow (including data, code and products) with colleagues, will accelerate the pace of your research and collaborations. However, beyond these direct benefits, reproducible research builds trust in science with the public, policy makers and others.

What data were used in this study? What methods applied? What were the parameter settings? What documentation or code are available to us to evaluate the results? Can we trust these data and methods?

Are the results reproducible?

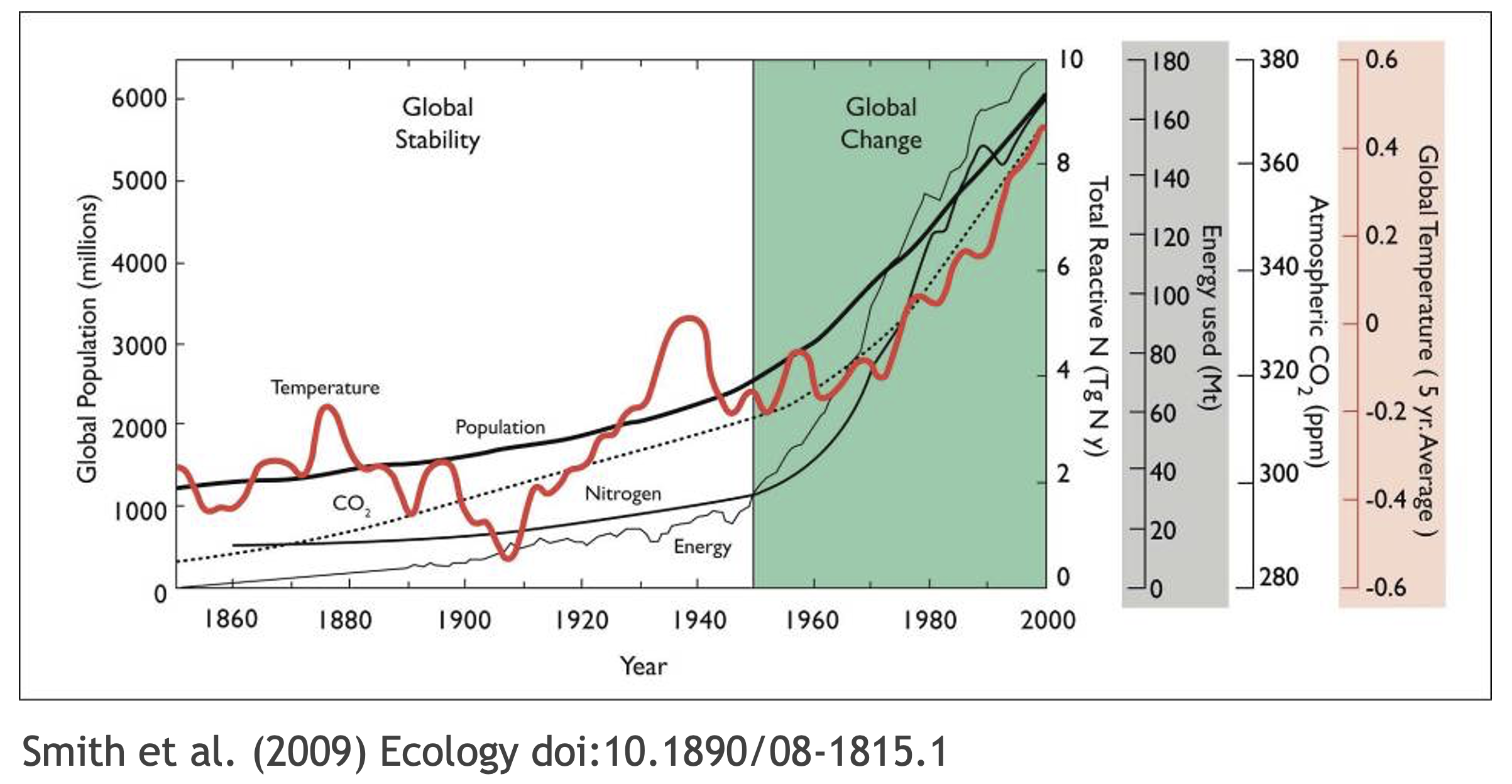

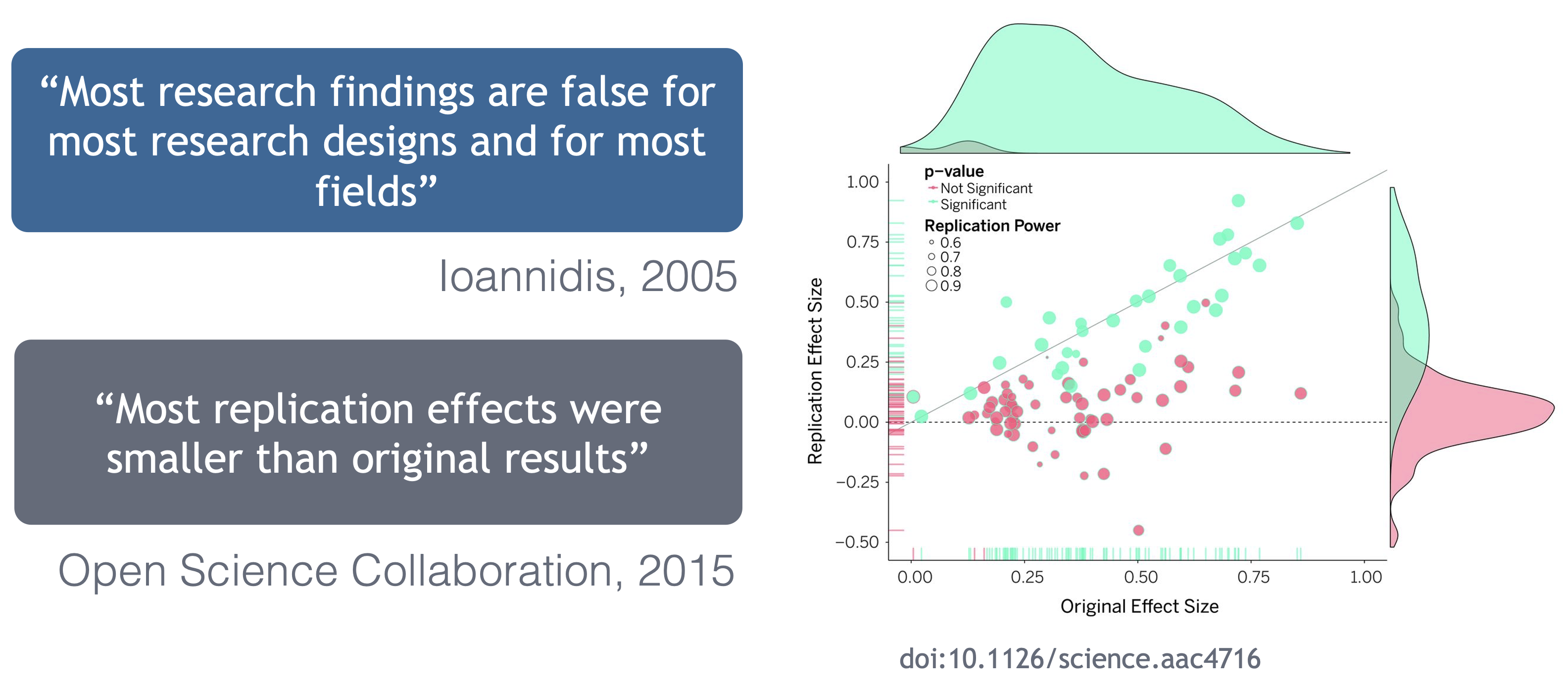

Ionnidis (2005) contends that “Most research findings are false for most research designs and for most fields”, and a study of replicability in psychology experiments found that “Most replication effects were smaller than the original results” (Open Science Collaboration, 2015).

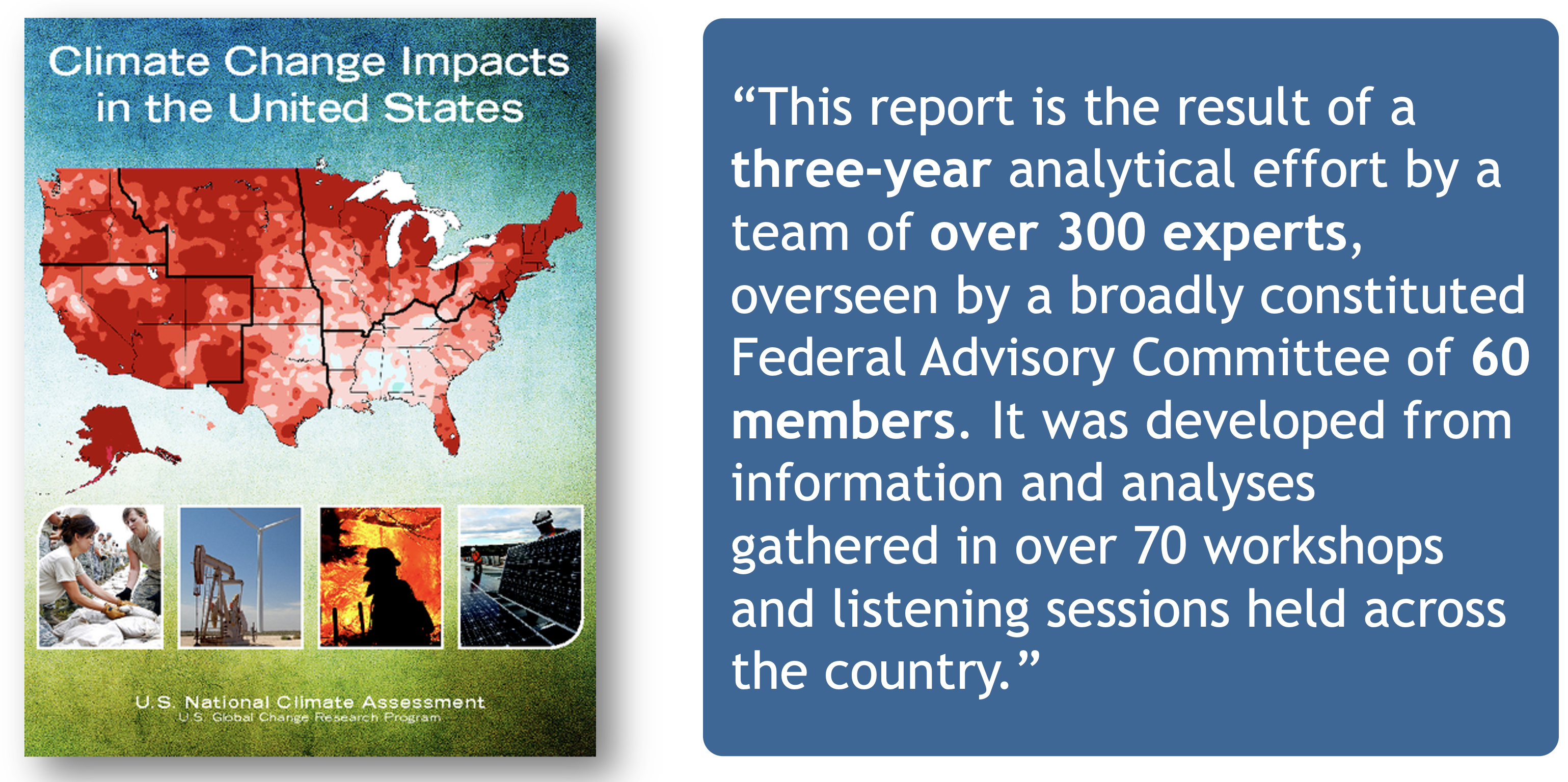

In the case of ‘climategate’, it took three years, and over 300 personnel, to gather the necessary provenance information in order to document how results, figures and other outputs were derived from input sources. Time and effort that could have been significantly reduced with appropriate documentation and reproducible practices. Moving forward, through reproducible research training, practices, and infrastructure, the need to manually chase this information will be reduced enabling replication studies and great trust in science.

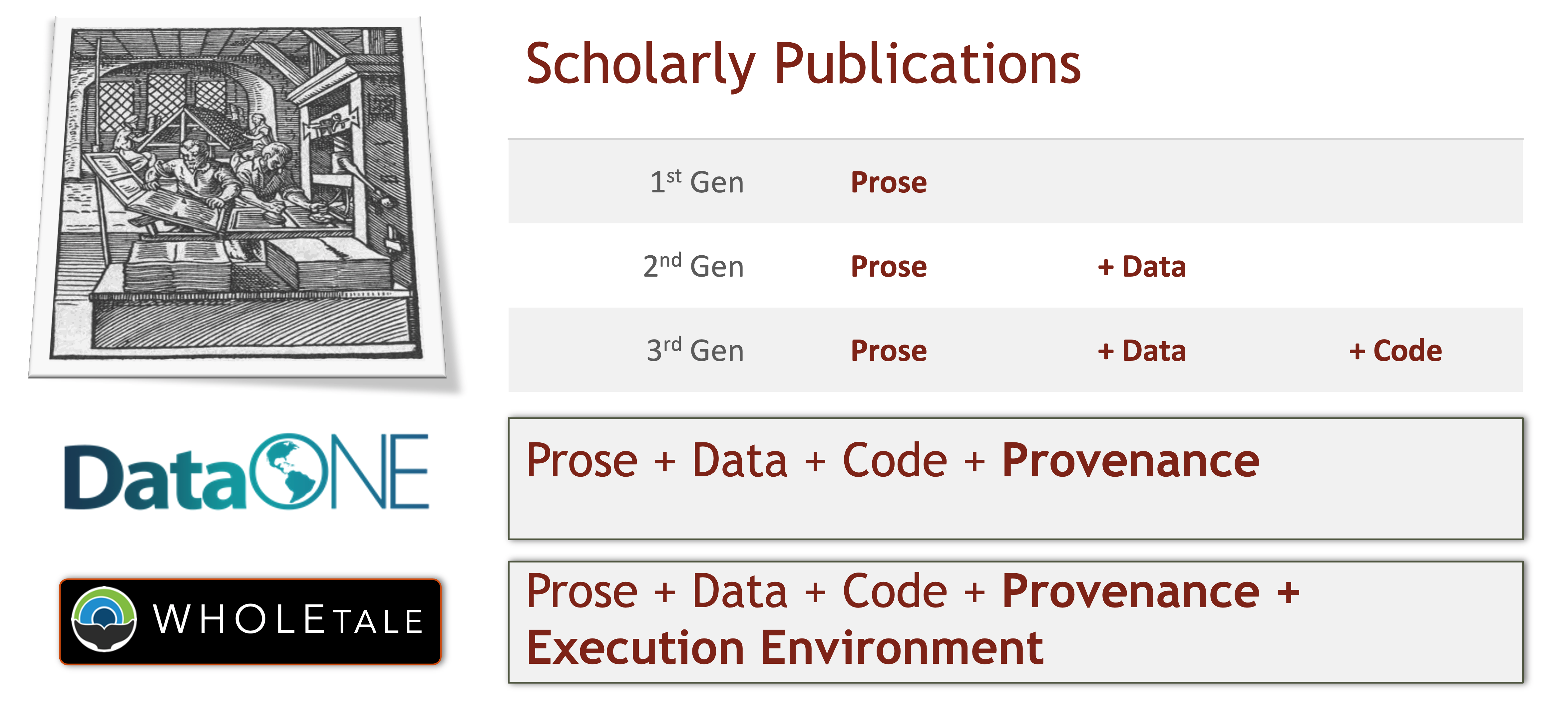

Computational reproducibility

While reproducibility encompasses the full science lifecycle, and includes issues such as methodological consistency and treatment of bias, in this course we will focus on computational reproducibility: the ability to document data, analyses, and models sufficiently for other researchers to be able to understand and ideally re-execute the computations that led to scientific results and conclusions.

The first step towards addressing these issues is to be able to evaluate the data, analyses, and models on which conclusions are drawn. Under current practice, this can be difficult because data are typically unavailable, the method sections of papers do not detail the computational approaches used, and analyses and models are often conducted in graphical programs, or, when scripted analyses are employed, the code is not available.

And yet, this is easily remedied. Researchers can achieve computational reproducibility through open science approaches, including straightforward steps for archiving data and code openly along with the scientific workflows describing the provenance of scientific results (e.g., Hampton et al. (2015), Munafò et al. (2017)).

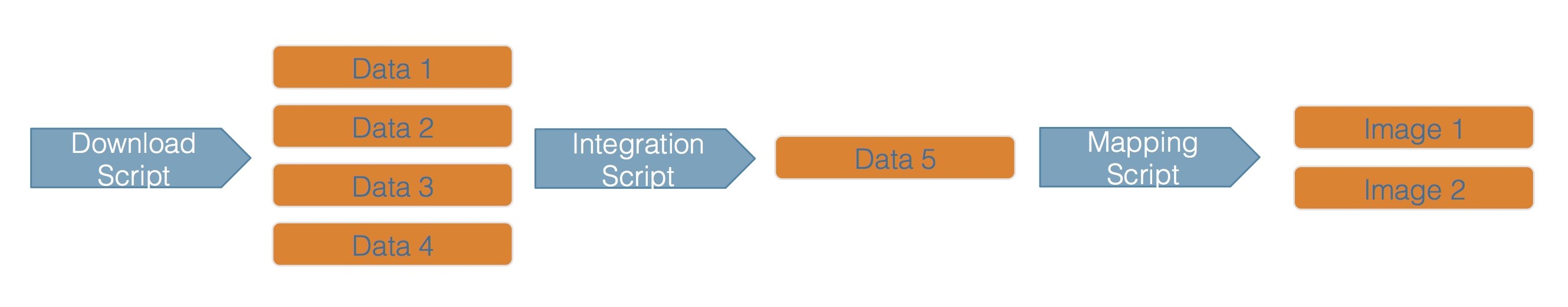

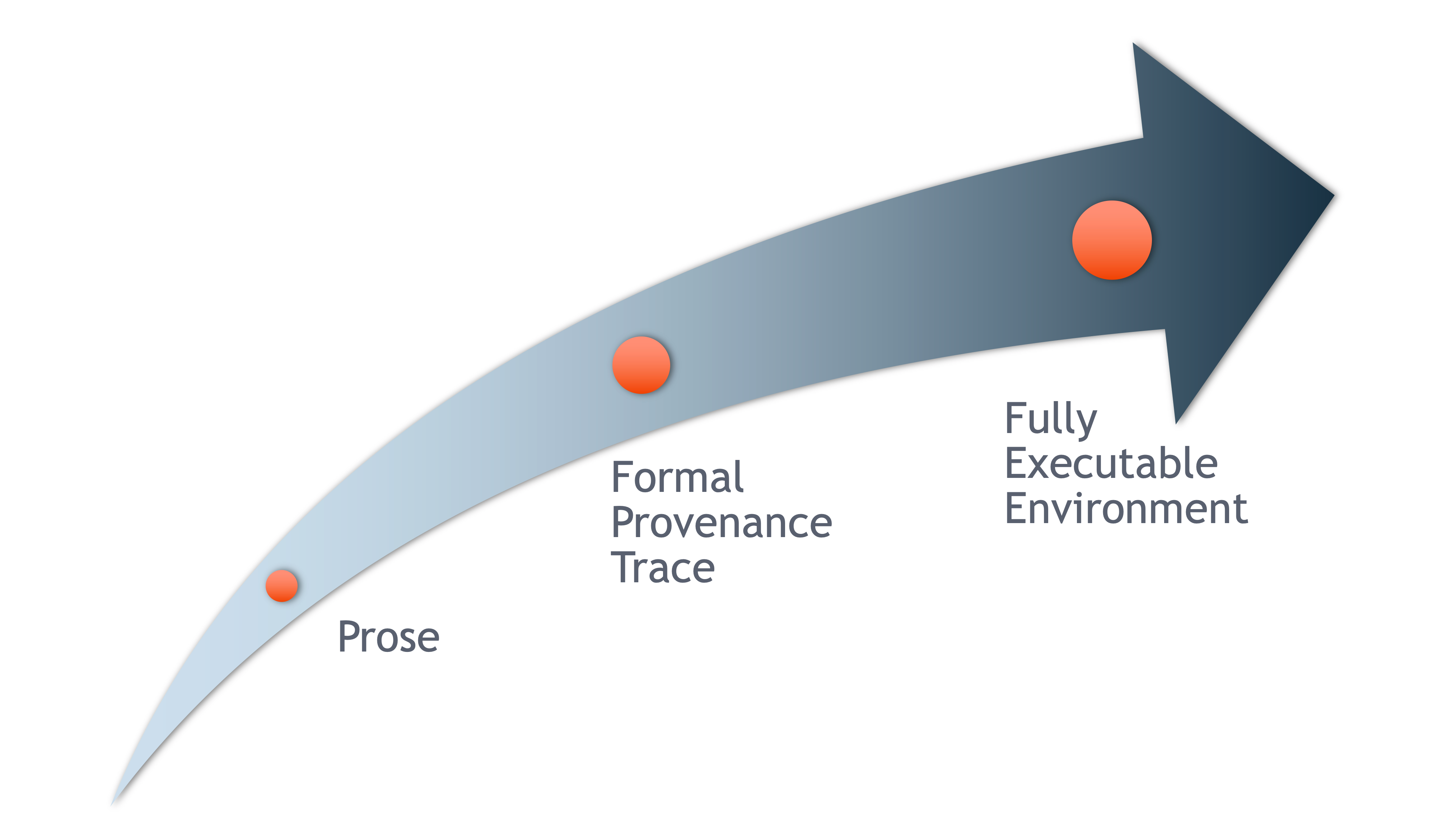

Conceptualizing workflows

Scientific workflows encapsulate all of the steps from data acquisition, cleaning, transformation, integration, analysis, and visualization.

Workflows can range in detail from simple flowcharts to fully executable scripts. R scripts and python scripts are a textual form of a workflow, and when researchers publish specific versions of the scripts and data used in an analysis, it becomes far easier to repeat their computations and understand the provenance of their conclusions.

5.2.2.1 Summary

Computational reproducibility provides:

- transparency by capturing and communicating scientific workflows

- research to stand on the shoulders of giants (build on work that came before)

- credit for secondary usage and supports easy attribution

- increased trust in science

Preserving computational workflows enables understanding, evaluation, and reuse for the benefit of future you and your collaborators and colleagues across disciplines.

Reproducibility means different things to different researchers. For our purposes, practical reproducibility looks like:

- Preserving the data

- Preserving the software workflow

- Documenting what you did

- Describing how to interpret it all

During this course will outline best practices for how to make those four components happen.

5.2.3 Setting up the R environment on your local computer

To get started on the rest of this course, you will need to set up your own computer with the following software so that you can step through the exercises and are ready to take the tools you learn in the course into your daily work.

R Version

We will use R version 4.0.2, which you can download and install from CRAN. To check your version, run this in your RStudio console:

If you have R version 4.0.0 that will likely work fine as well.

RStudio Version

We will be using RStudio version 1.3.1000 or later, which you can download and install here To check your RStudio version, run the following in your RStudio console:

If the output of this does not say 1.2.500 or higher, you should update your RStudio. Do this by selecting Help -> Check for Updates and follow the prompts.

Package installation

Run the following lines to check that all of the packages we need for the training are installed on your computer.

packages <- c("dplyr", "tidyr", "readr", "devtools", "usethis", "roxygen2", "leaflet", "ggplot2", "DT", "scales", "shiny", "sf", "ggmap", "broom", "captioner", "MASS")

for (package in packages) { if (!(package %in% installed.packages())) { install.packages(package) } }

rm(packages) #remove variable from workspace

# Now upgrade any out-of-date packages

update.packages(ask=FALSE)If you haven’t installed all of the packages, this will automatically start installing them. If they are installed, it won’t do anything.

Next, create a new R Markdown (File -> New File -> R Markdown). If you have never made an R Markdown document before, a dialog box will pop up asking if you wish to install the required packages. Click yes.

At this point, RStudio and R should be all set up.

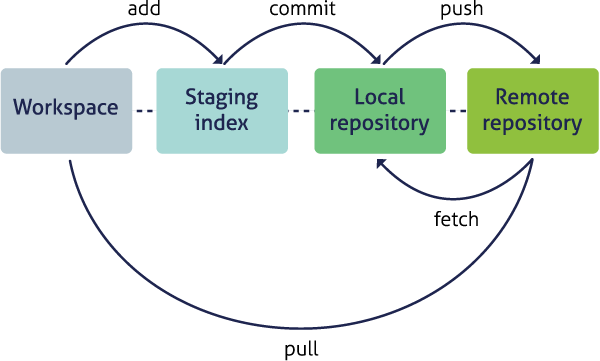

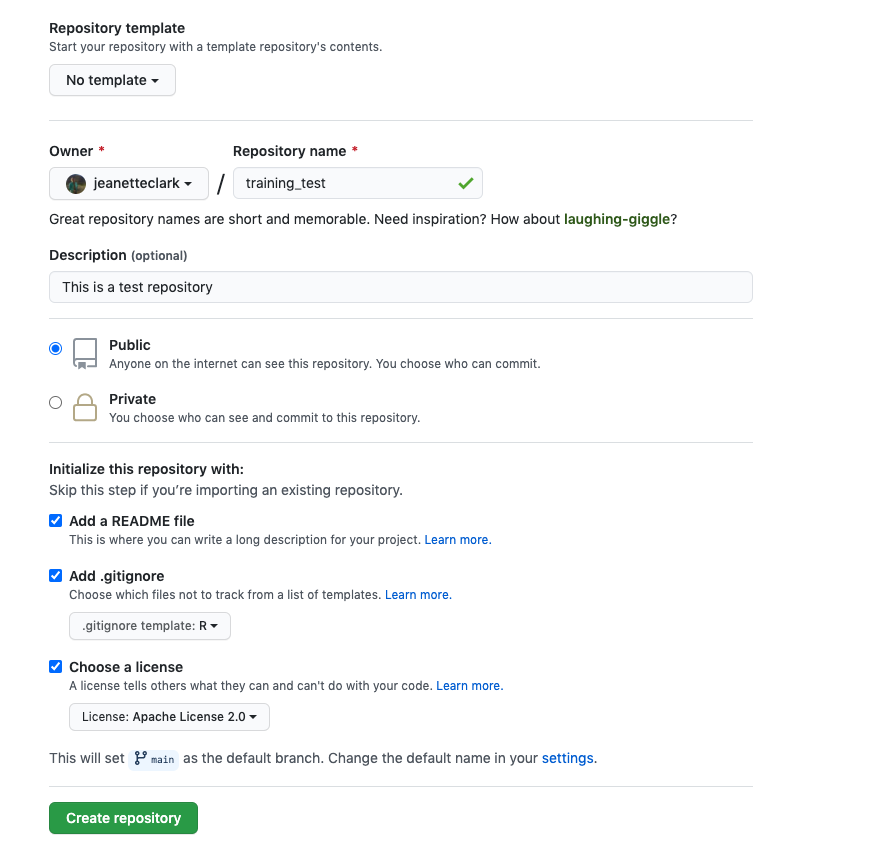

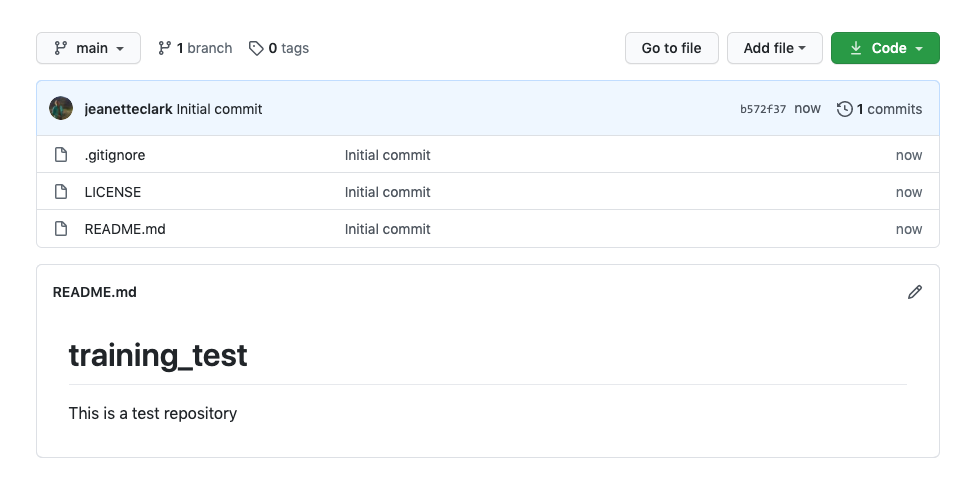

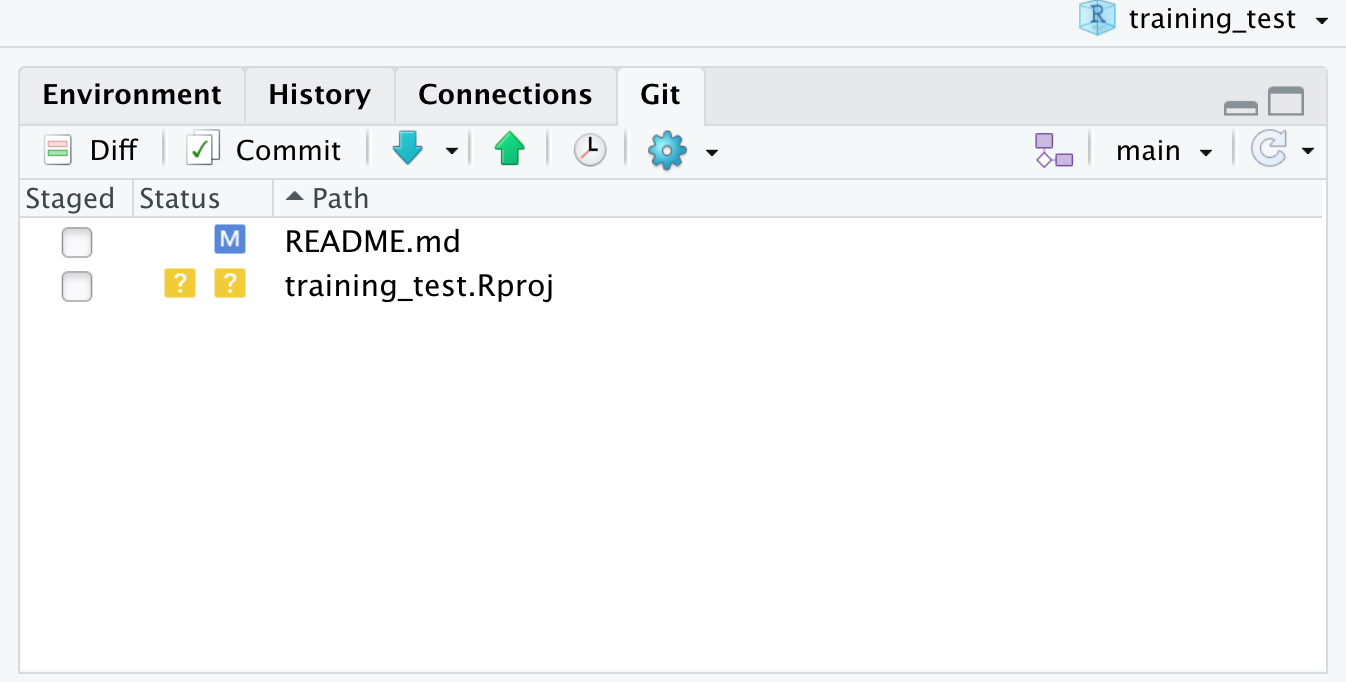

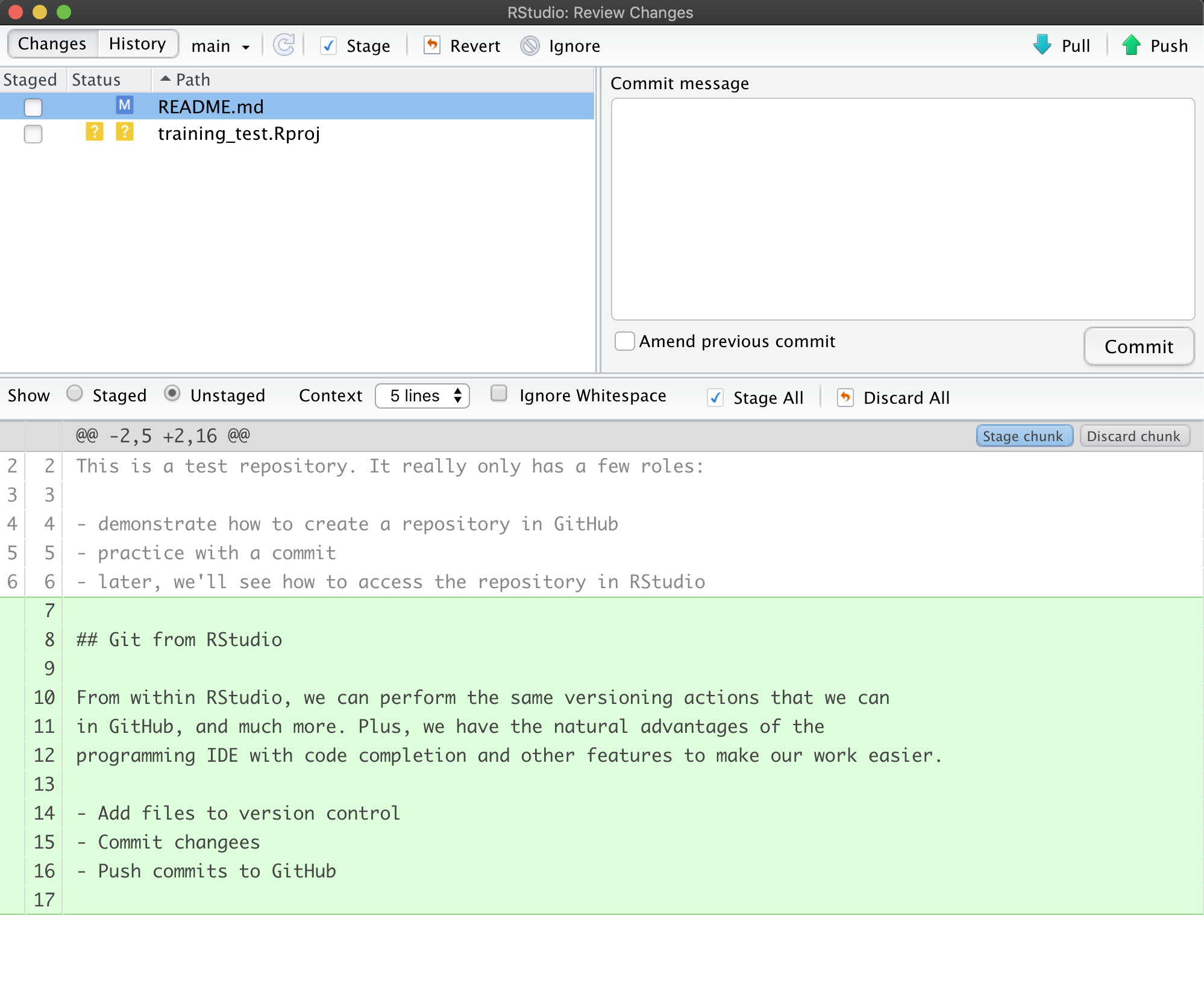

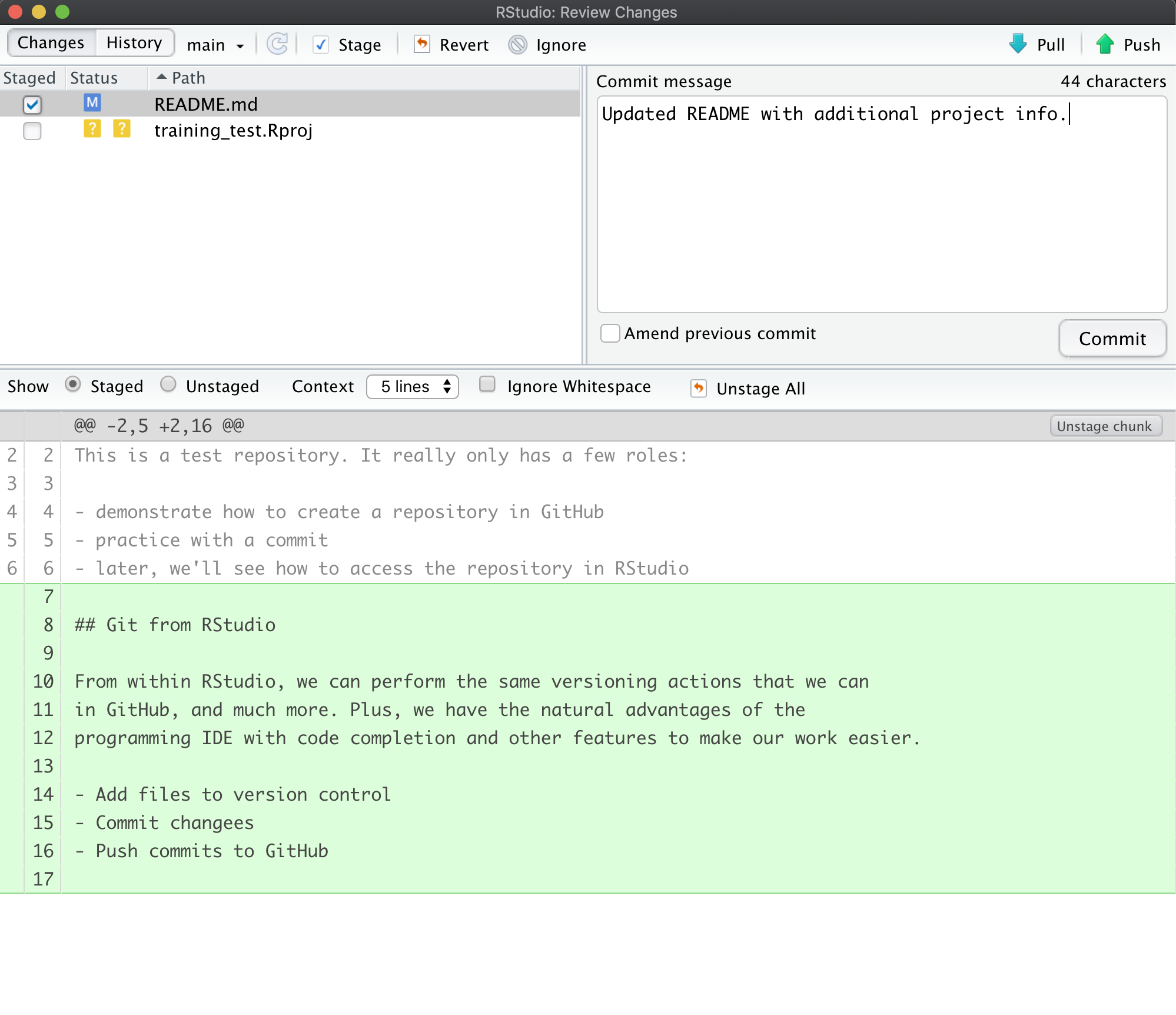

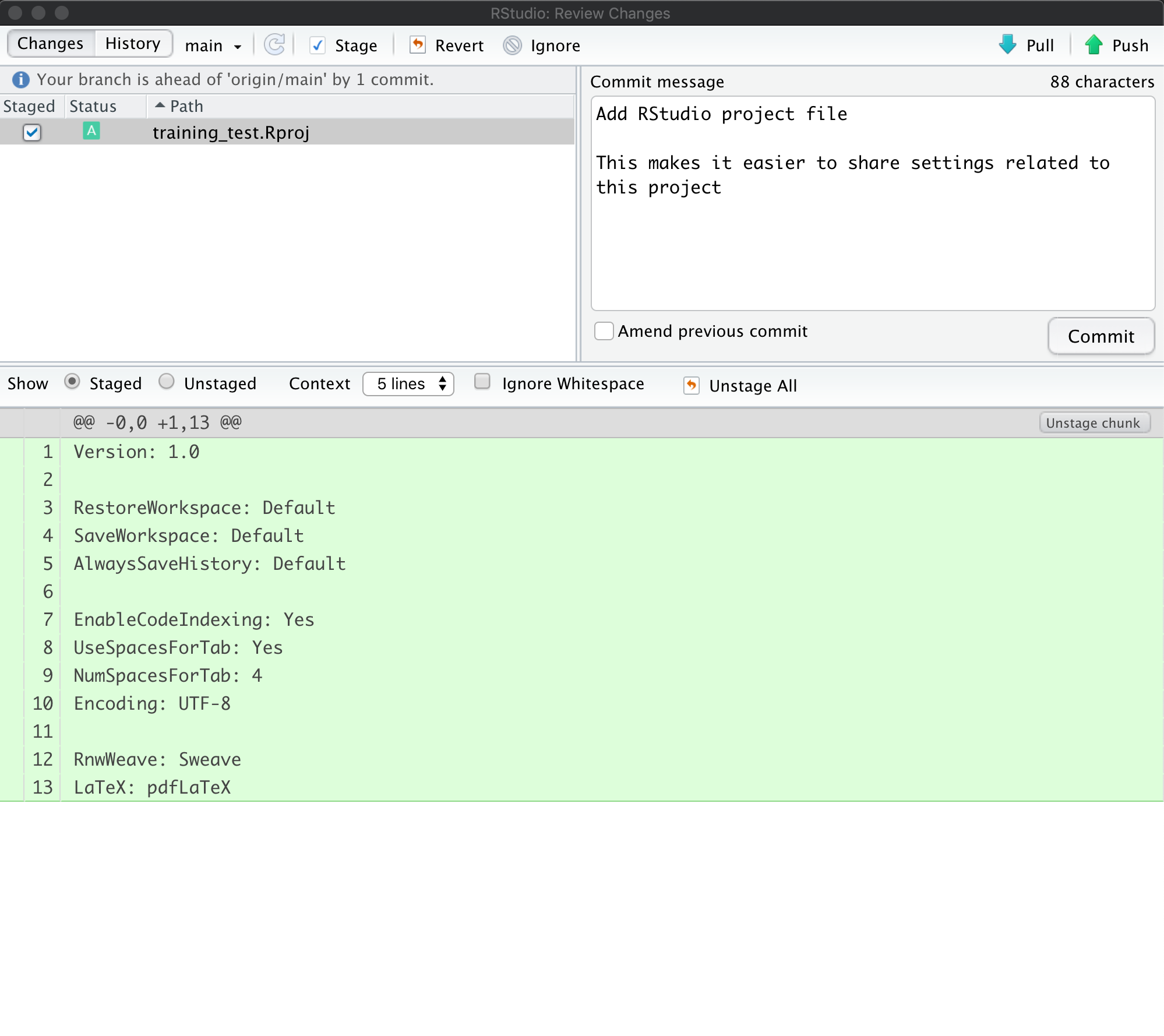

Setting up git

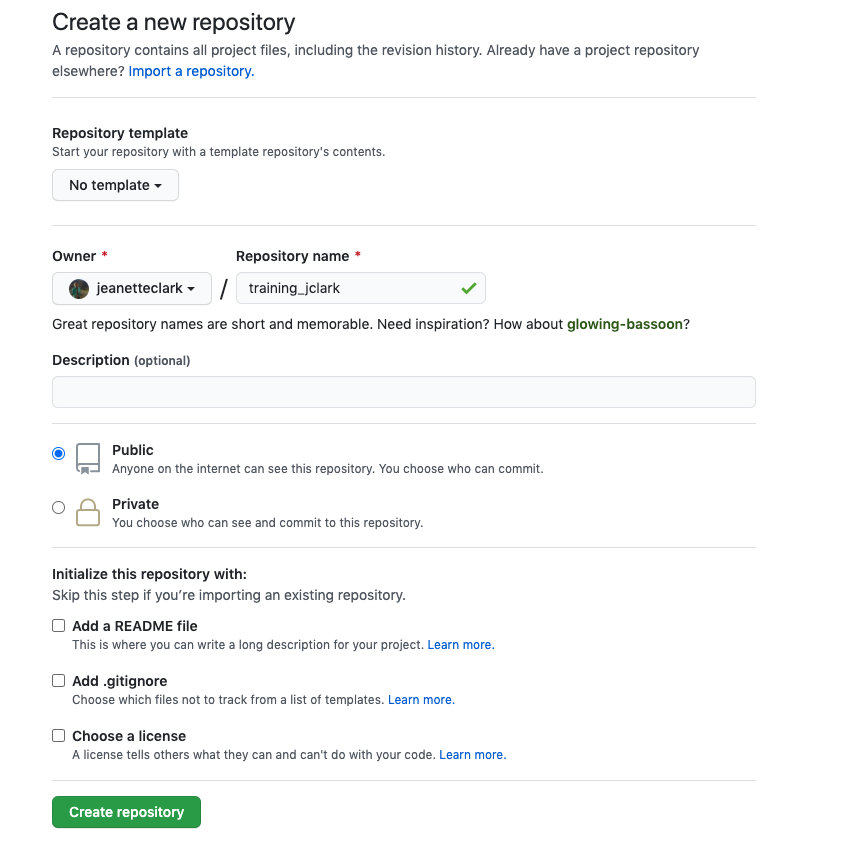

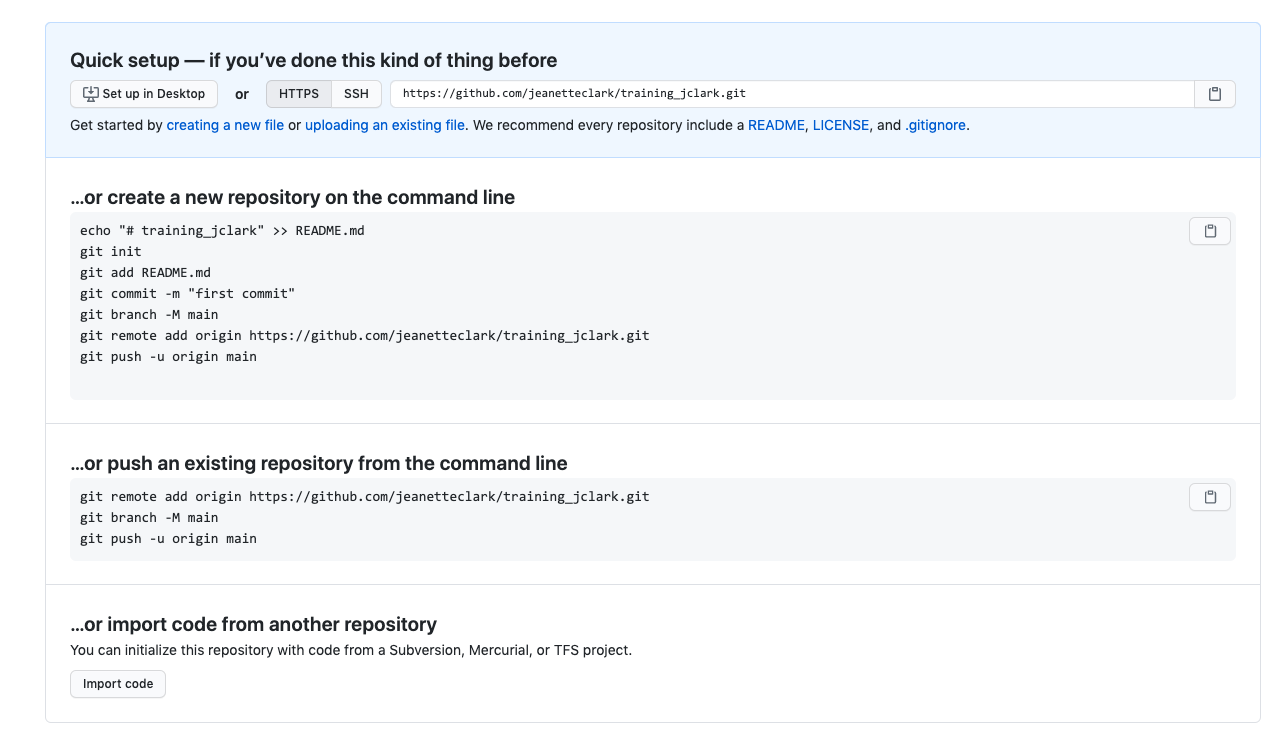

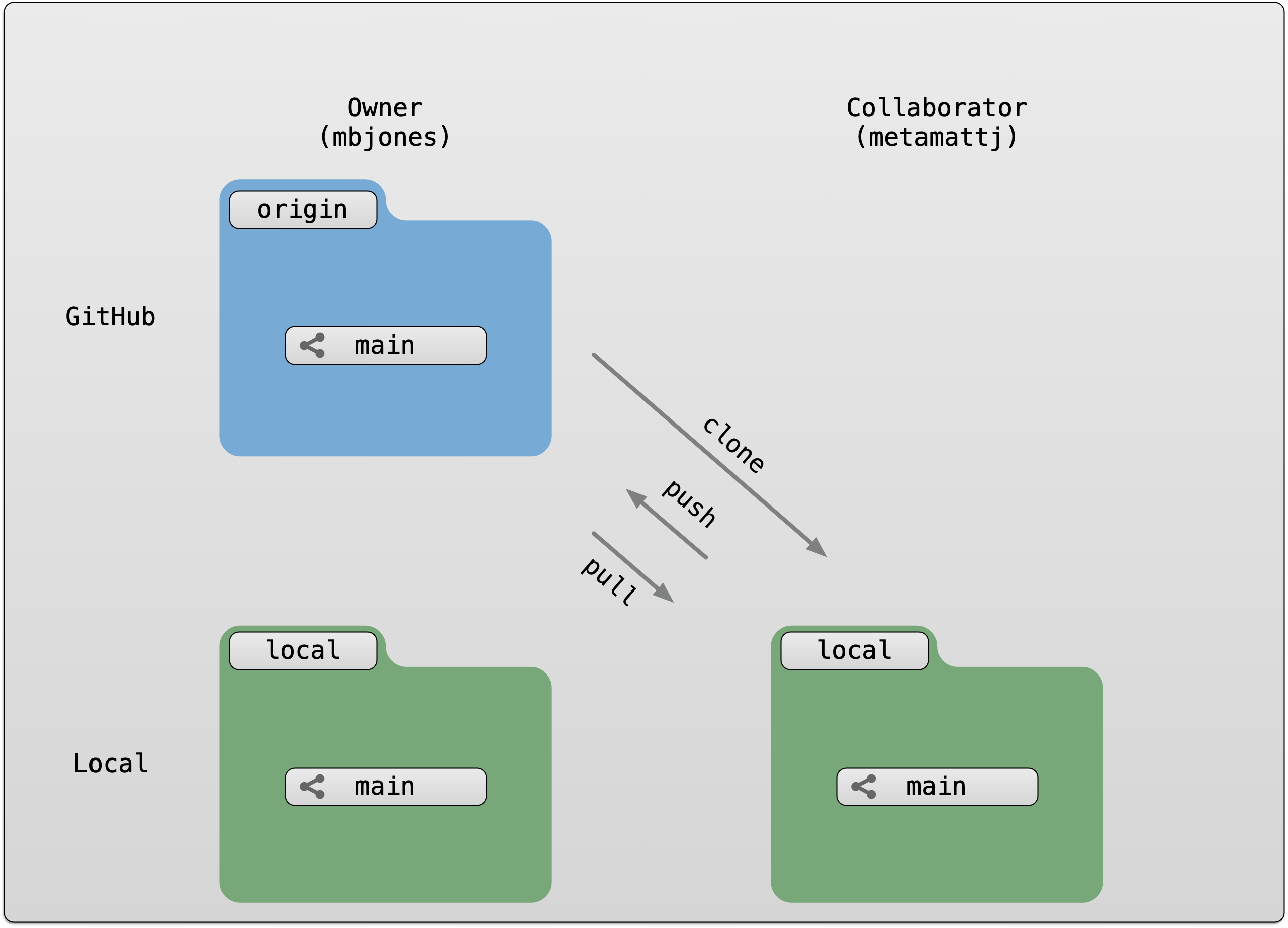

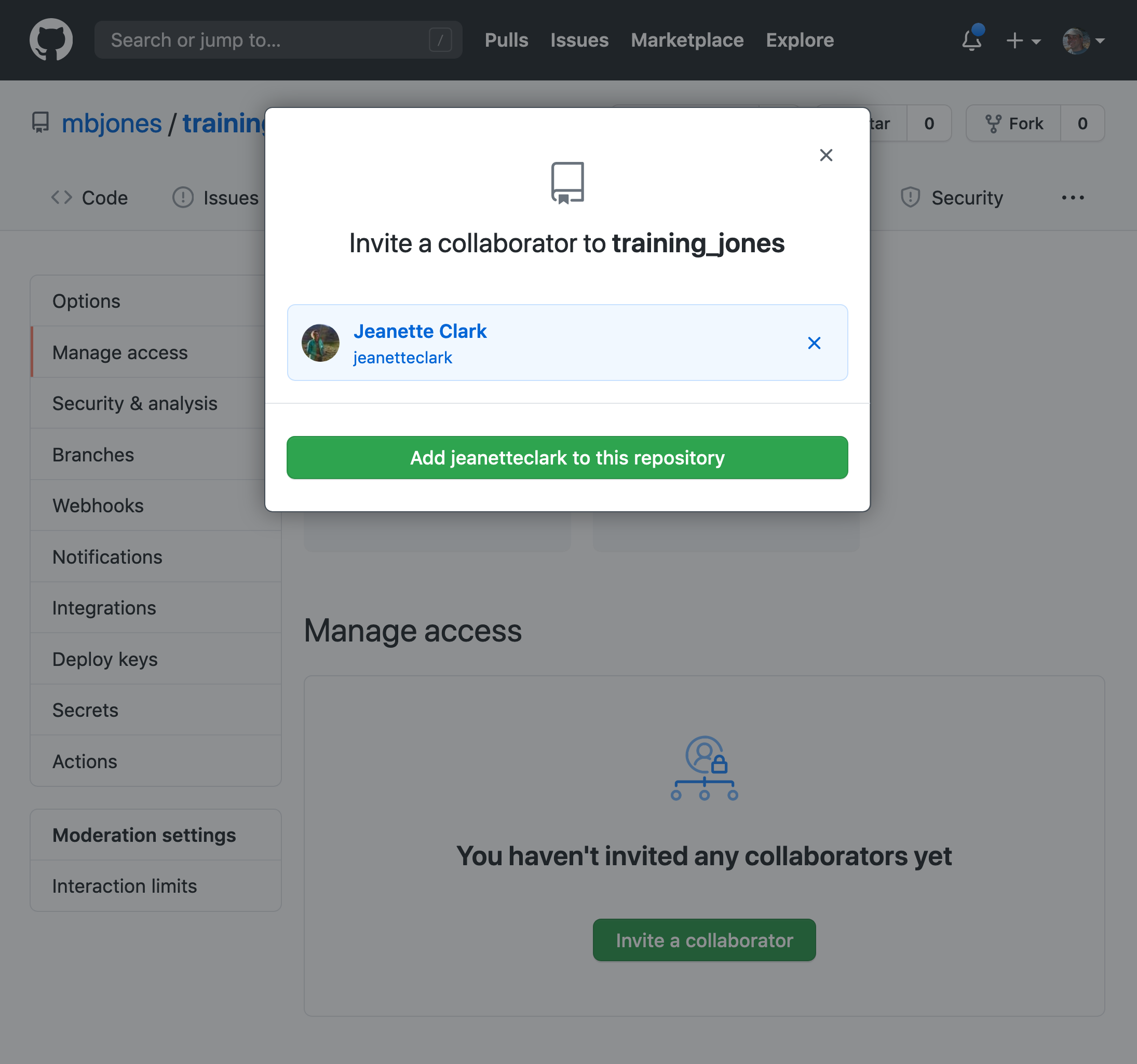

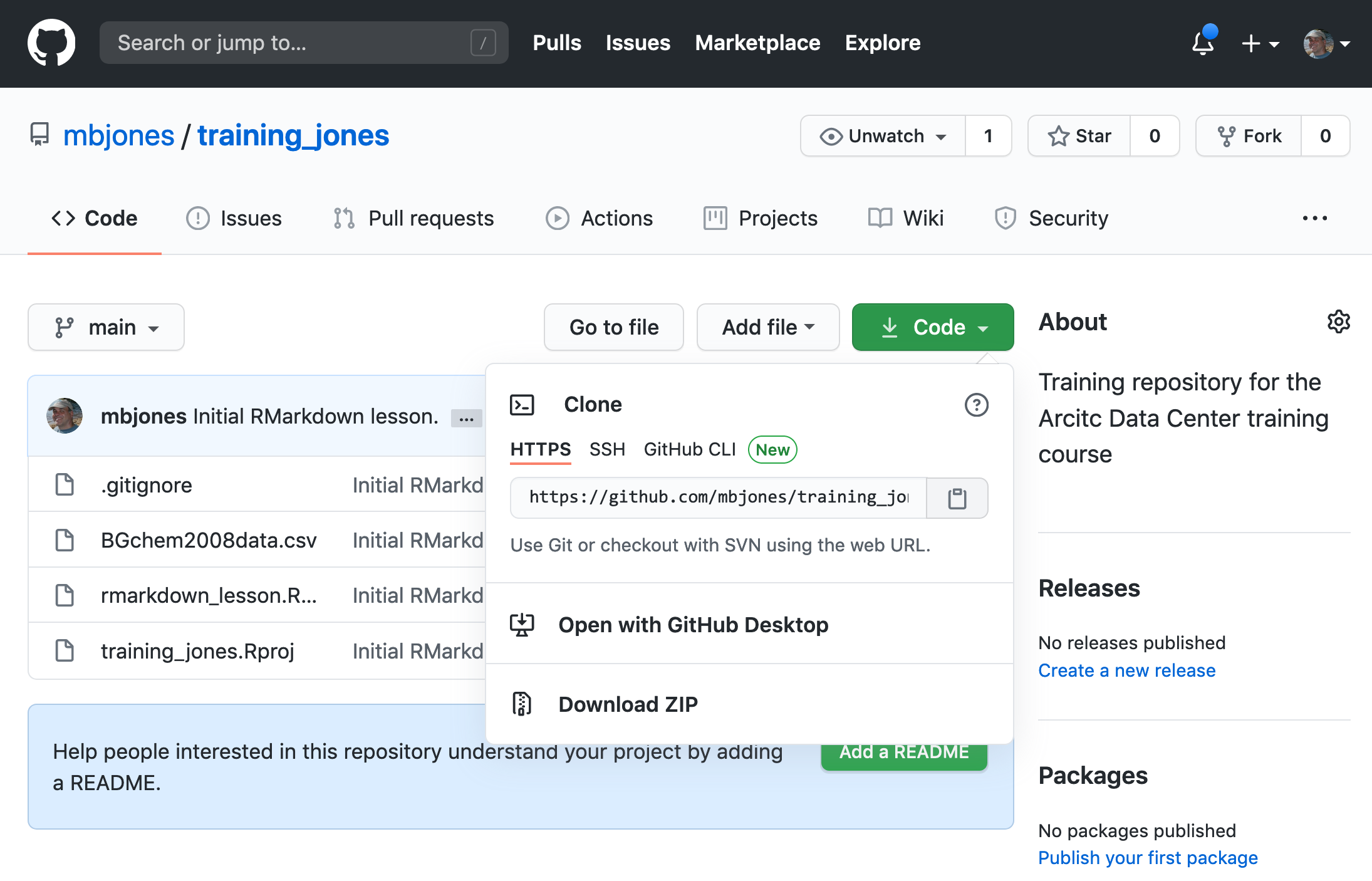

If you haven’t already, go to github.com and create an account.

Before using git, you need to tell it who you are, also known as setting the global options. The only way to do this is through the command line. Newer versions of RStudio have a nice feature where you can open a terminal window in your RStudio session. Do this by selecting Tools -> Terminal -> New Terminal.

A terminal tab should now be open where your console usually is.

To see if you aleady have your name and email options set, use this command from the terminal:

To set the global options, type the following into the command prompt, with your actual name, and press enter:

Next, enter the following line, with the email address you used when you created your account on github.com:

Note that these lines need to be run one at a time.

Finally, check to make sure everything looks correct by entering these commands, which will return the options that you have set.

Setting up git locally

Every time you set up git on a new computer, the steps we took above to set up git are required. See the above section on setting up git to set your git username and email address.

Note for Windows Users

If you get “command not found” (or similar) when you try these steps through the RStudio terminal tab, you may need to set the type of terminal that gets launched by RStudio. Under some git install scenarios, the git executable may not be available to the default terminal type. Follow the instructions on the RStudio site for Windows specific terminal options. In particular, you should choose “New Terminals open with Git Bash” in the Terminal options (Tools->Global Options->Terminal).

In addition, some versions of windows have difficulty with the command line if you are using an account name with spaces in it (such as “Matt Jones”, rather than something like “mbjones”). You may need to use an account name without spaces.

Updating a previous R installation

This is useful for users who already have R with some packages installed and need to upgrade R, but don’t want to lose packages. If you have never installed R or any R packages before, you can skip this section.

If you already have R installed, but need to update, and don’t want to lose your packages, these two R functions can help you. The first will save all of your packages to a file. The second loads the packages from the file and installs packages that are missing.

Save this script to a file (eg package_update.R).

#' Save R packages to a file. Useful when updating R version

#'

#' @param path path to rda file to save packages to. eg: installed_old.rda

save_packages <- function(path){

tmp <- installed.packages()

installedpkgs <- as.vector(tmp[is.na(tmp[,"Priority"]), 1])

save(installedpkgs, file = path)

}

#' Update packages from a file. Useful when updating R version

#'

#' @param path path to rda file where packages were saved

update_packages <- function(path){

tmp <- new.env()

installedpkgs <- load(file = path, envir = tmp)

installedpkgs <- tmp[[ls(tmp)[1]]]

tmp <- installed.packages()

installedpkgs.new <- as.vector(tmp[is.na(tmp[,"Priority"]), 1])

missing <- setdiff(installedpkgs, installedpkgs.new)

install.packages(missing)

update.packages(ask=FALSE)

}Source the file that you saved above (eg: source(package_update.R)). Then, run the save_packages function.

Then quit R, go to CRAN, and install the latest version of R.

Source the R script that you saved above again (eg: source(package_update.R)), and then run:

This should install all of your R packages that you had before you upgraded.

5.3 Best Practices: Data and Metadata

5.3.1 Learning Objectives

In this lesson, you will learn:

- How to acheive practical reproducibility

- Some best practices for data and metadata management

5.3.2 Best Practices: Overview

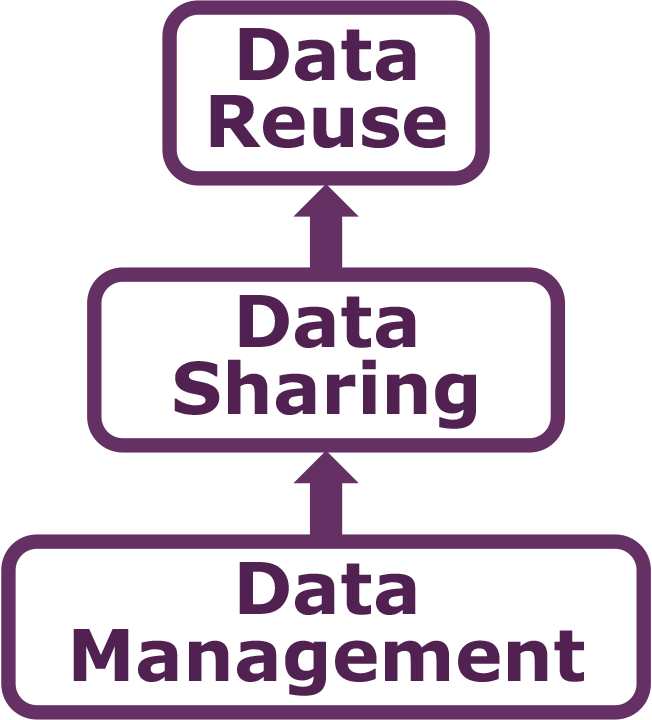

The data life cycle has 8 stages: Plan, Collect, Assure, Describe, Preserve, Discover, Integrate, and Analyze. In this section we will cover the following best practices that can help across all stages of the data life cycle:

- Organizing Data

- File Formats

- Large Data Packages

- Metadata

- Data Identifiers

- Provenance

- Licensing and Distribution

5.3.2.1 Organizing Data

We’ll spend an entire lesson later on that’s dedicated to organizing your data in a tidy and effective manner, but first, let’s focus on the benefits on having “clean” data and complete metadata.

- Decreases errors from redundant updates

- Enforces data integrity

- Helps you and future researchers to handle large, complex datasets

- Enables powerful search filtering

Much has been written on effective data management to enable reuse. The following two papers offer words of wisdom:

- Some simple guidelines for effective data management. Borer et al. 2009. Bulletin of the Ecological Society of America.

- Nine simple ways to make it easier to (re)use your data. White et al. 2013. Ideas in Ecology and Evolution 6.

In brief, some of the best practices to follow are:

- Have scripts for all data manipulation that start with the uncorrected raw data file and clean the data programmatically before analysis.

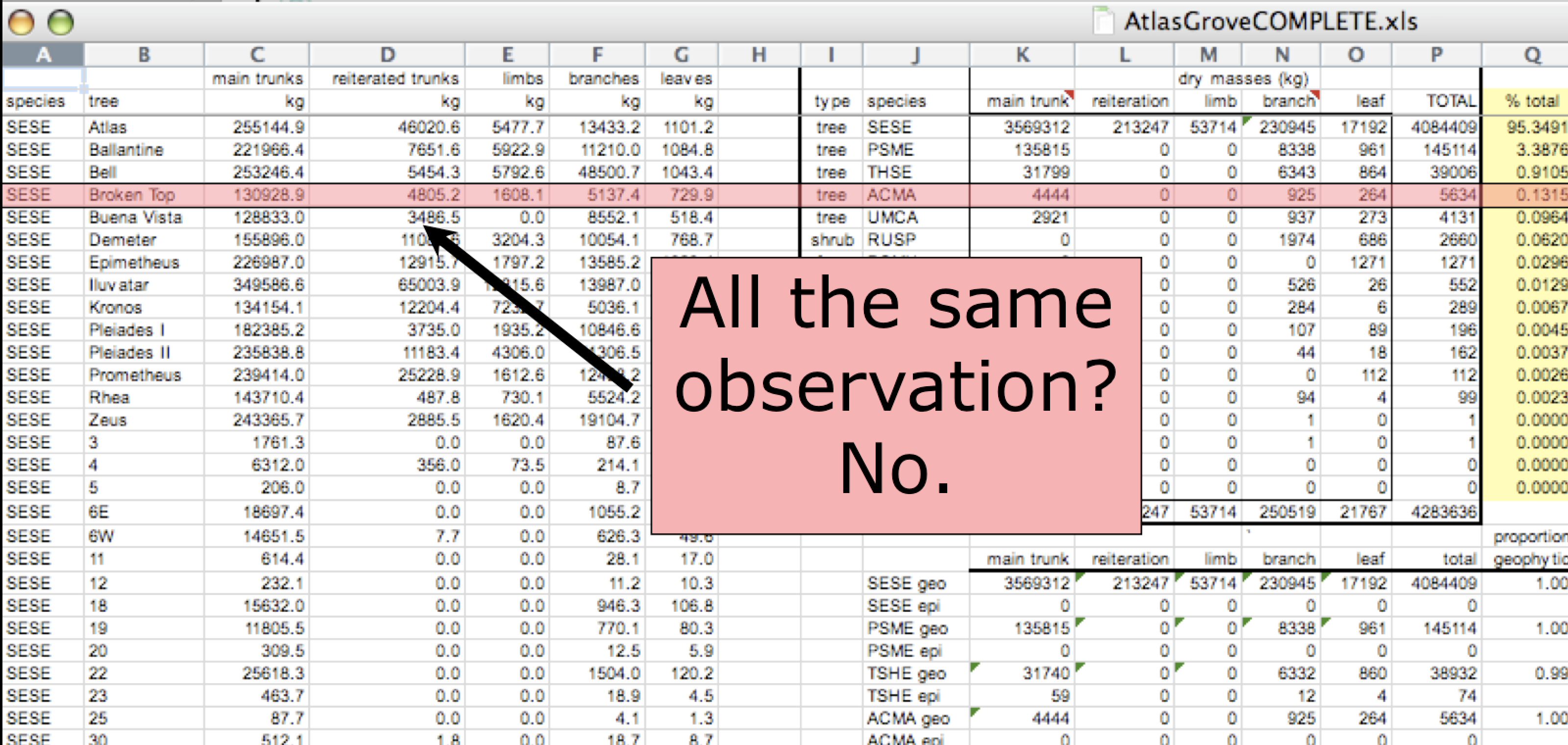

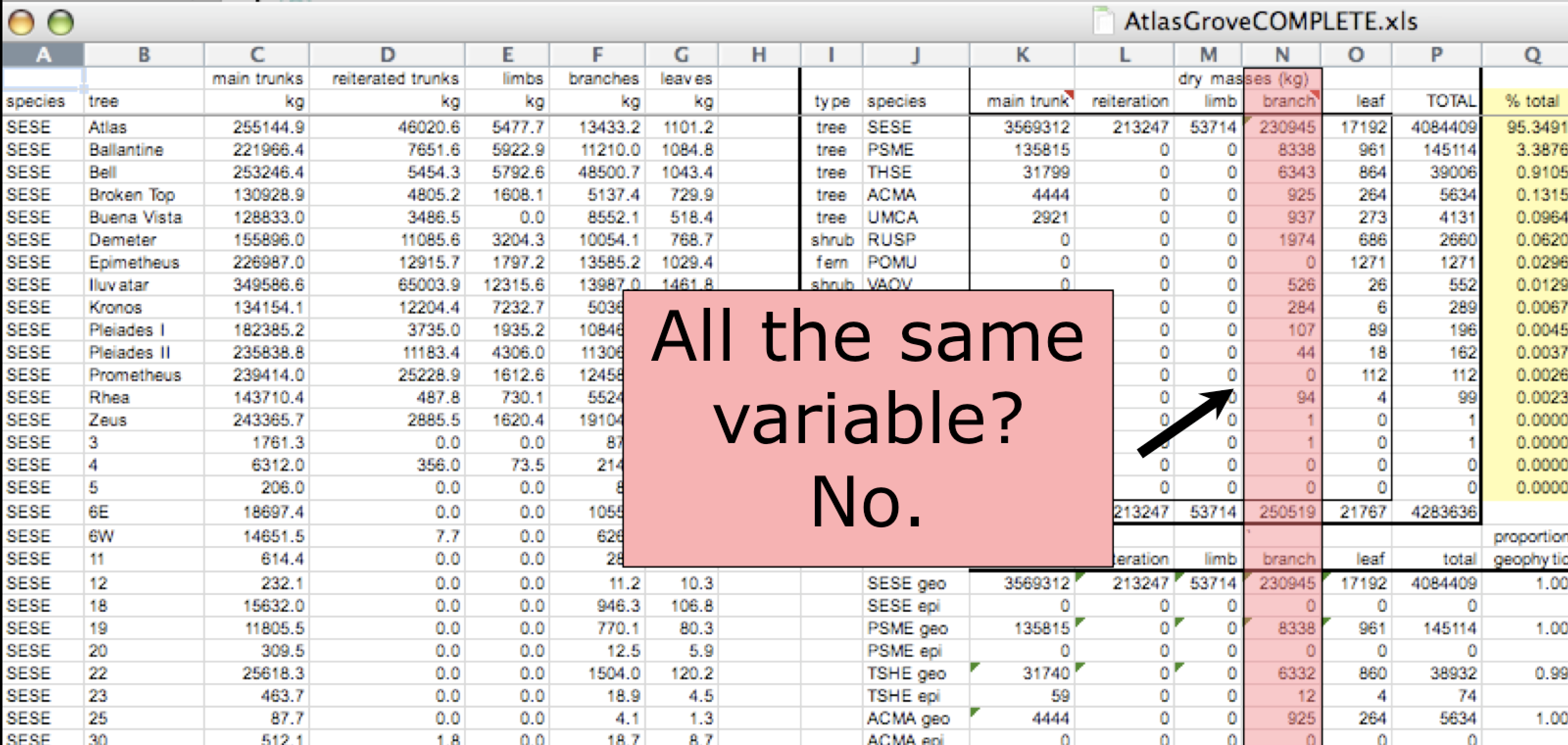

- Design your tables to add rows, not columns. A column should be only one variable and a row should be only one observation.

- Include header lines in your tables

- Use non-proprietary file formats (ie, open source) with descriptive file names without spaces.

Non-proprietary file formats are essential to ensure that your data can still be machine readable long into the future. Open formats include text files and binary formats such as NetCDF.

Common switches:

- Microsoft Excel (.xlsx) files - export to text (.txt) or comma separated values (.csv)

- GIS files - export to ESRI shapefiles (.shp)

- MATLAB/IDL - export to NetCDF

When you have or are going to generate large data packages (in the terabytes or larger), it’s important to establish a relationship with the data center early on. The data center can help come up with a strategy to tile data structures by subset, such as by spatial region, by temporal window, or by measured variable. They can also help with choosing an efficient tool to store the data (ie NetCDF or HDF), which is a compact data format that helps parallel read and write libraries of data.

5.3.2.2 Metadata Guidelines

Metadata (data about data) is an important part of the data life cycle because it enables data reuse long after the original collection. Imagine that you’re writing your metadata for a typical researcher (who might even be you!) 30+ years from now - what will they need to understand what’s inside your data files?

The goal is to have enough information for the researcher to understand the data, interpret the data, and then re-use the data in another study.

Another way to think about it is to answer the following questions with the documentation:

- What was measured?

- Who measured it?

- When was it measured?

- Where was it measured?

- How was it measured?

- How is the data structured?

- Why was the data collected?

- Who should get credit for this data (researcher AND funding agency)?

- How can this data be reused (licensing)?

Bibliographic Details

The details that will help your data be cited correctly are:

- a global identifier like a digital object identifier (DOI);

- a descriptive title that includes information about the topic, the geographic location, the dates, and if applicable, the scale of the data

- a descriptive abstract that serves as a brief overview off the specific contents and purpose of the data package

- funding information like the award number and the sponsor;

- the people and organizations like the creator of the dataset (ie who should be cited), the person to contact about the dataset (if different than the creator), and the contributors to the dataset

Discovery Details

The details that will help your data be discovered correctly are:

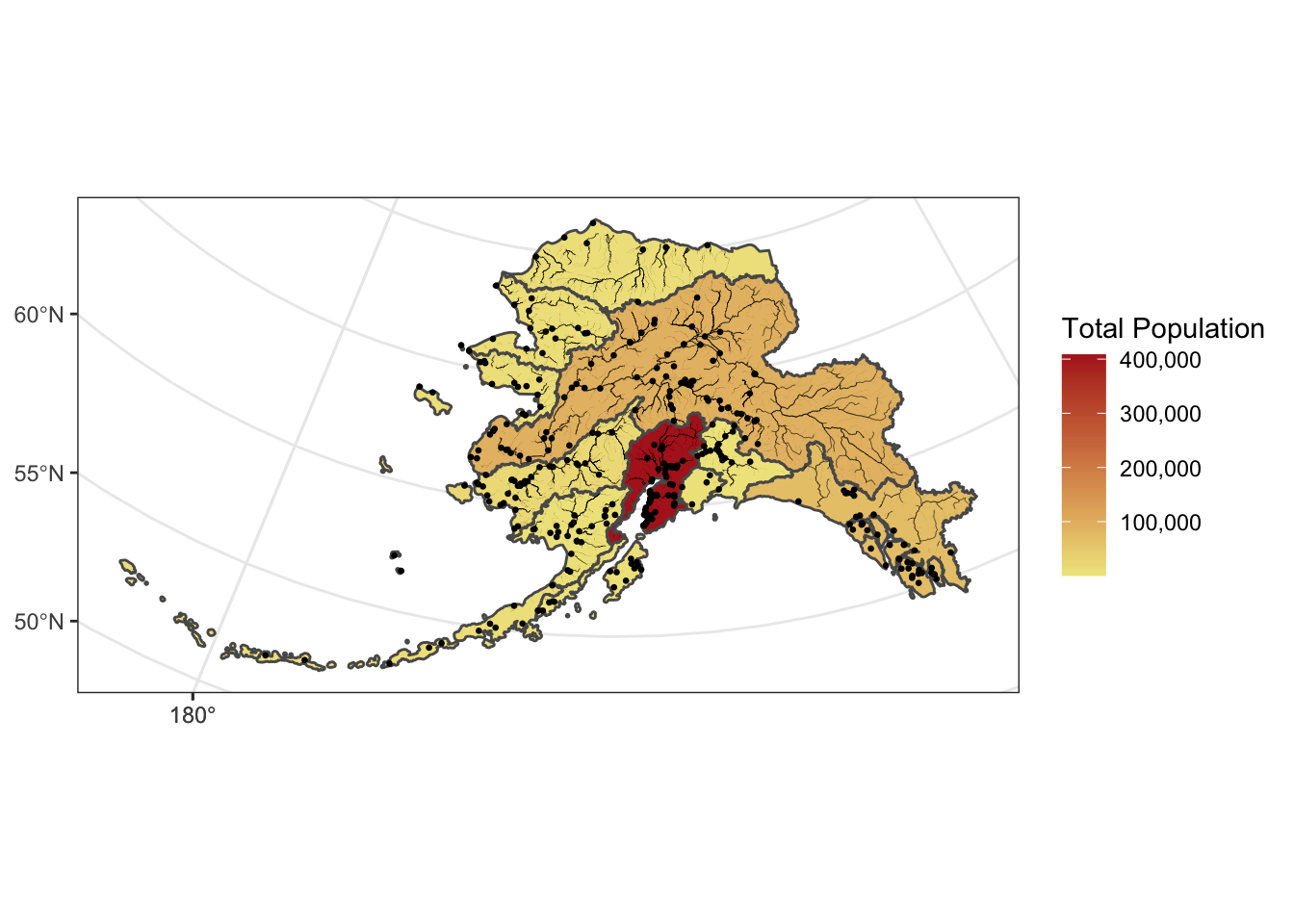

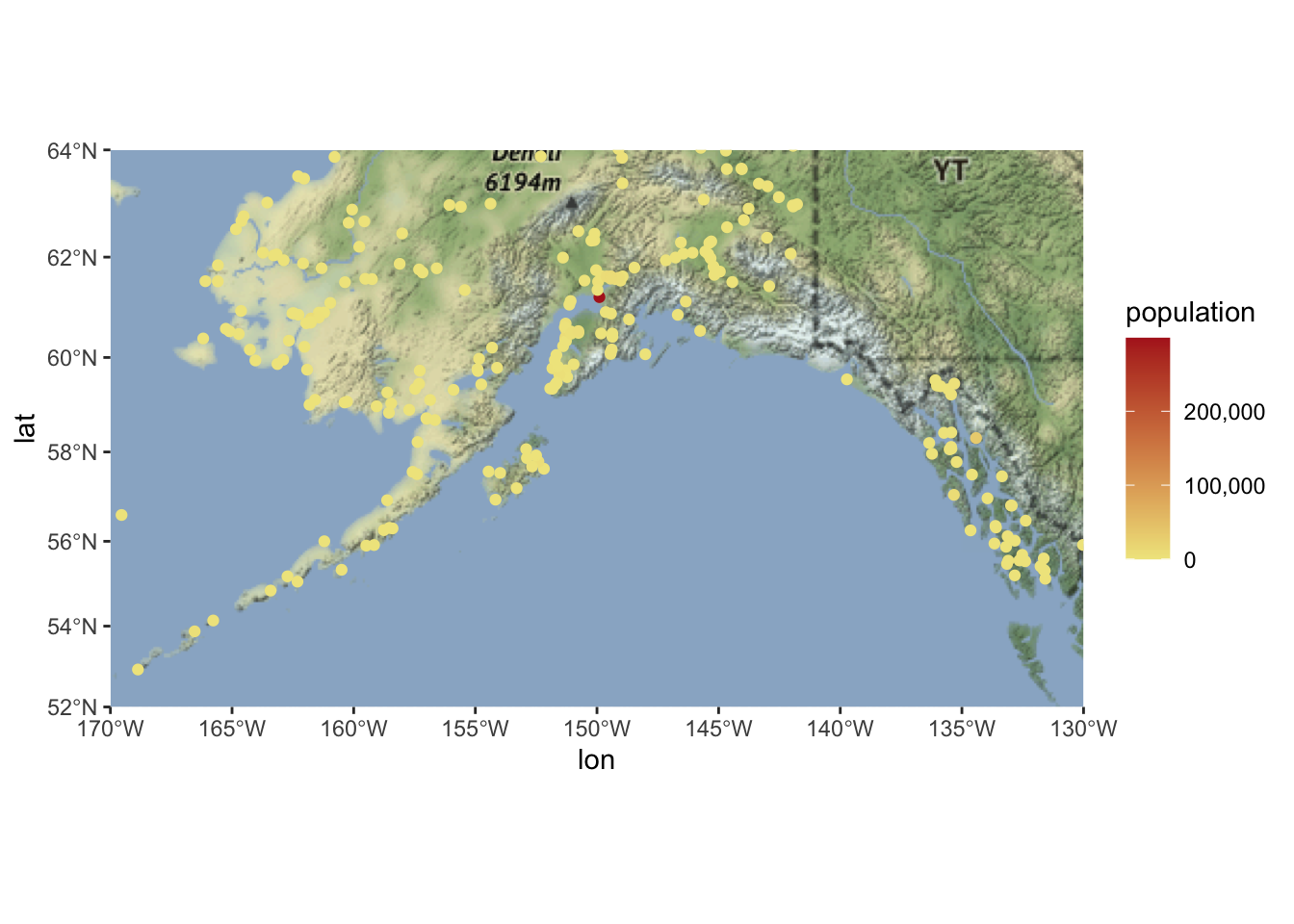

- the geospatial coverage of the data, including the field and laboratory sampling locations, place names and precise coordinates;

- the temporal coverage of the data, including when the measurements were made and what time period (ie the calendar time or the geologic time) the measurements apply to;

- the taxonomic coverage of the data, including what species were measured and what taxonomy standards and procedures were followed; as well as

- any other contextual information as needed.

Interpretation Details

The details that will help your data be interpreted correctly are:

- the collection methods for both field and laboratory data;

- the full experimental and project design as well as how the data in the dataset fits into the overall project;

- the processing methods for both field and laboratory samples IN FULL;

- all sample quality control procedures;

- the provenance information to support your analysis and modelling methods;

- information about the hardware and software used to process your data, including the make, model, and version; and

- the computing quality control procedures like any testing or code review.

Data Structure and Contents

Well constructed metadata also includes information about the data structure and contents. Everything needs a description: the data model, the data objects (like tables, images, matricies, spatial layers, etc), and the variables all need to be described so that there is no room for misinterpretation.

Variable information includes the definition of a variable, a standardized unit of measurement, definitions of any coded values (such as 0 = not collected), and any missing values (such as 999 = NA).

Not only is this information helpful to you and any other researcher in the future using your data, but it is also helpful to search engines. The semantics of your dataset are crucial to ensure your data is both discoverable by others and interoperable (that is, reusable).

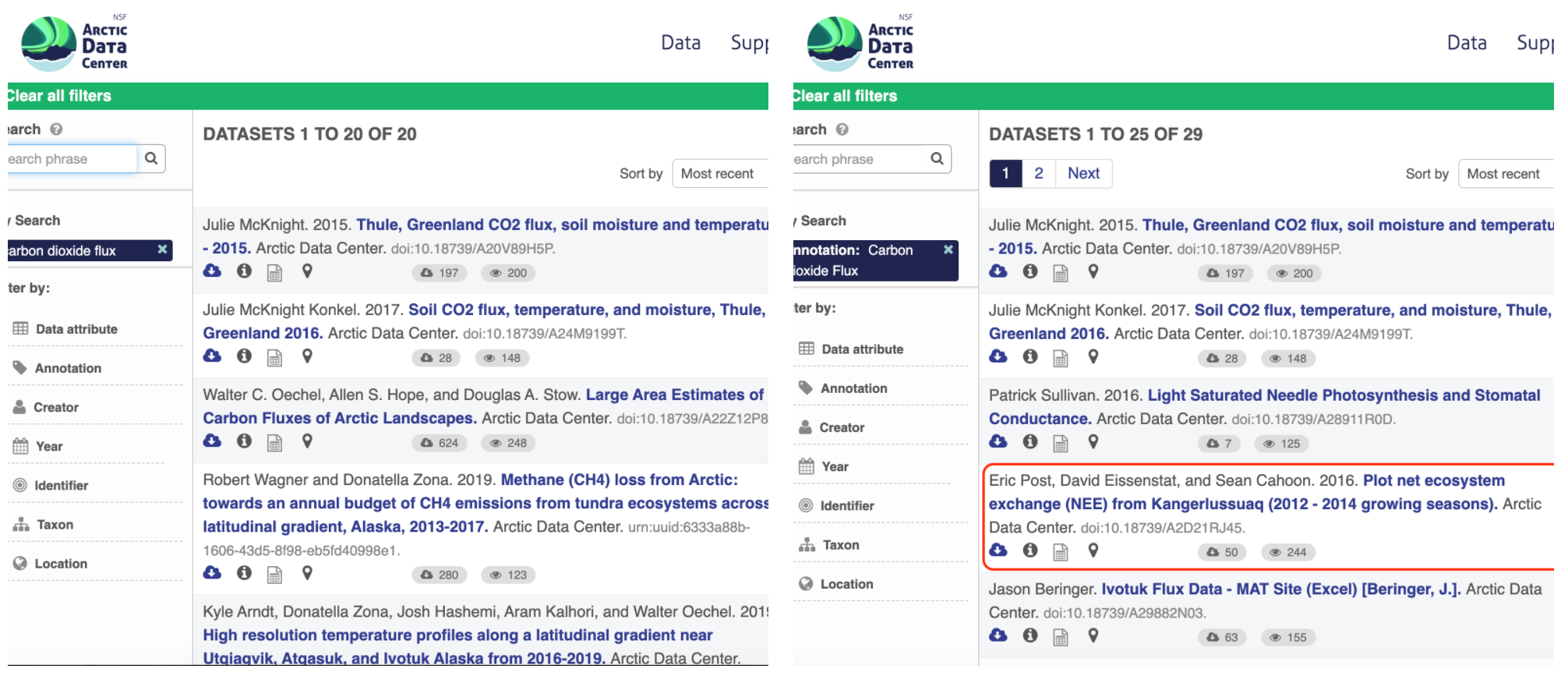

For example, if you were to search for the character string carbon dioxide flux in the general search box at the Arctic Data Center, not all relevant results will be shown due to varying vocabulary conventions (ie, CO2 flux instead of carbon dioxide flux) across disciplines — only datasets containing the exact words carbon dioxide flux are returned. With correct semantic annotation of the variables, your dataset that includes information about carbon dioxide flux but that calls it CO2 flux WOULD be included in that search.

Demonstrates a typical search for “carbon dioxide flux”, yielding 20 datasets. (right) Illustrates an annotated search for “carbon dioxide flux”, yielding 29 datasets. Note that if you were to interact with the site and explore the results of the figure on the right, the dataset in red of Figure 3 will not appear in the typical search for “carbon dioxide flux.”

Rights and Attribution

Correctly assigning a way for your datasets to be cited and reused is the last piece of a complete metadata document. This section sets the scientific rights and expectations for the future on your data, like:

- the citation format to be used when giving credit for the data;

- the attribution expectations for the dataset;

- the reuse rights, which describe who may use the data and for what purpose;

- the redistribution rights, which describe who may copy and redistribute the metadata and the data; and

- the legal terms and conditions like how the data are licensed for reuse.

So, how do you organize all this information? There are a number of metadata standards (think, templates) that you could use, including the Ecological Metadata Language (EML), Geospatial Metadata Standards like ISO 19115 and ISO 19139, the Biological Data Profile (BDP), Dublin Core, Darwin Core, PREMIS, the Metadata Encoding and Transmission Standard (METS), and the list goes on and on. The Arctic Data Center runs on EML.

5.3.3 Data Identifiers

Many journals require a DOI - a digital object identifier - be assigned to the published data before the paper can be accepted for publication. The reason for that is so that the data can easily be found and easily linked to.

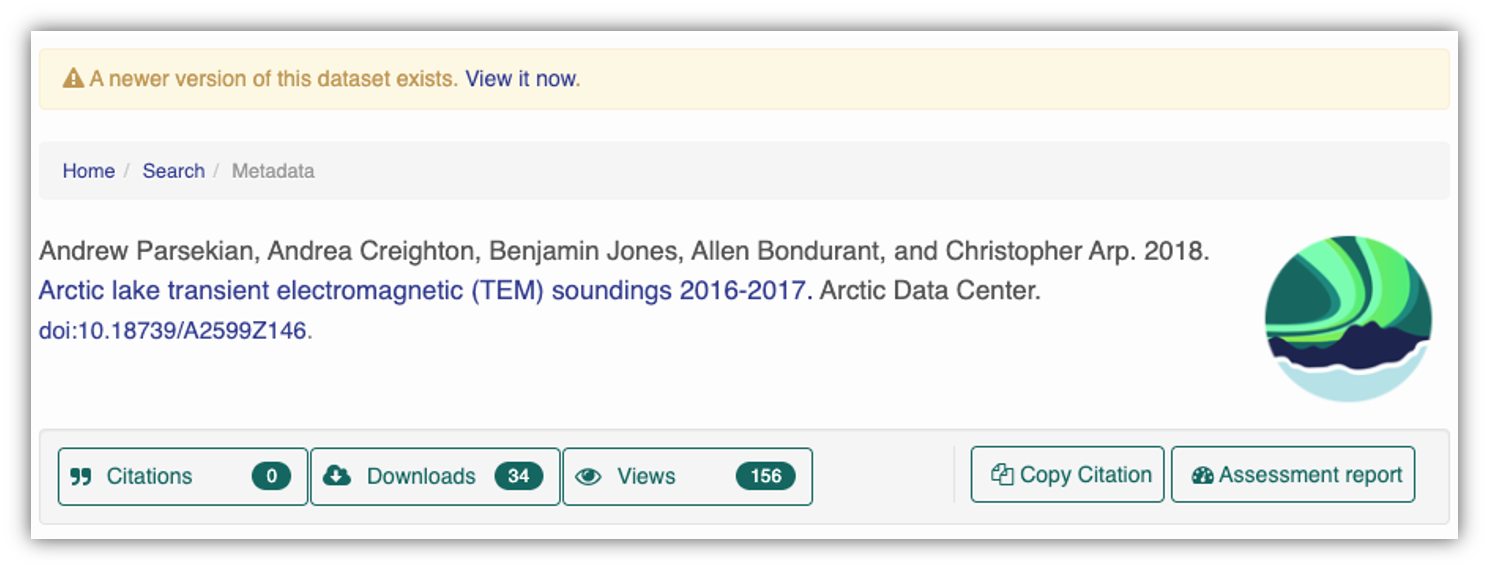

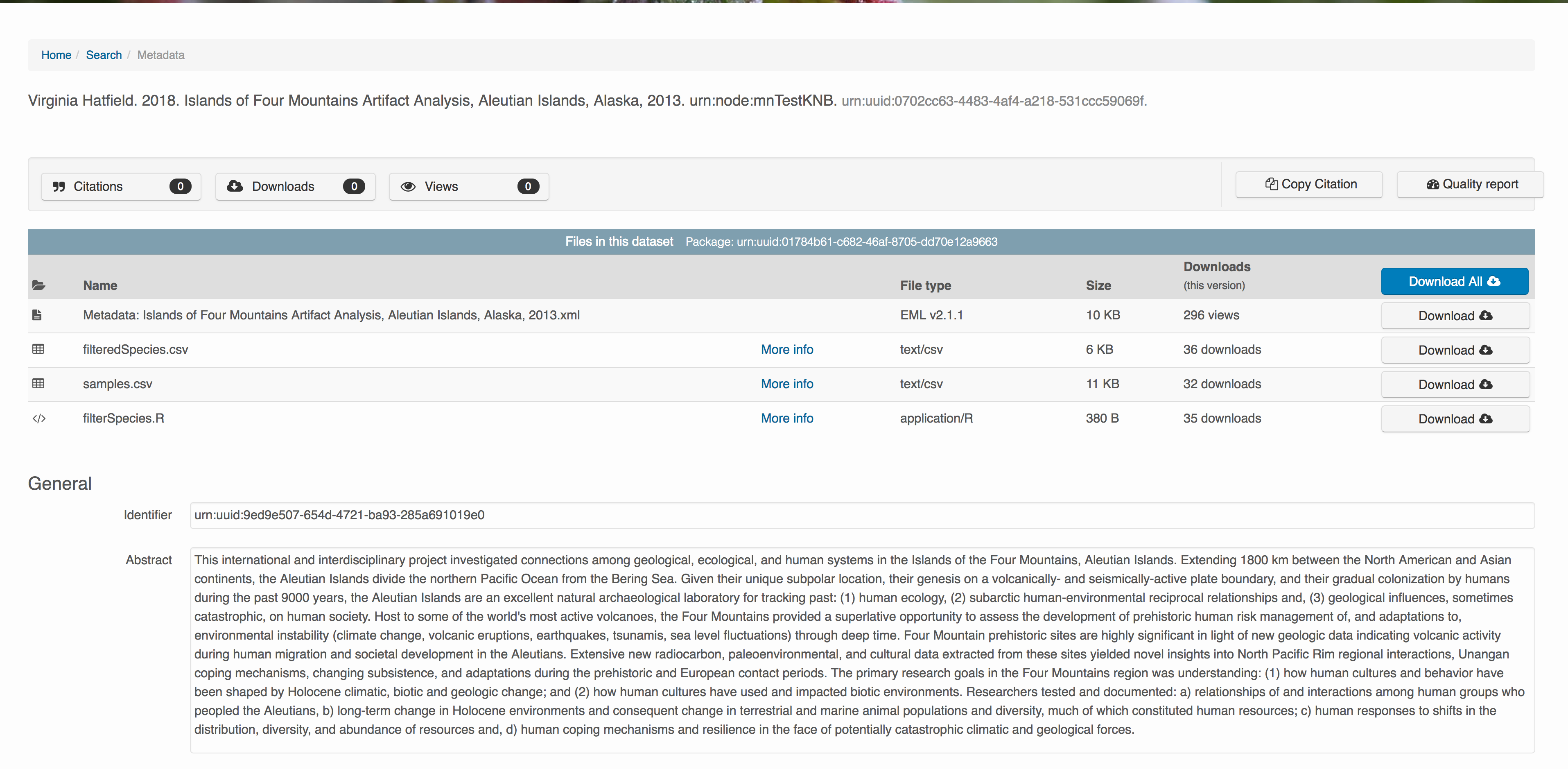

At the Arctic Data Center, we assign a DOI to each published dataset. But, sometimes datasets need to be updated. Each version of a dataset published with the Arctic Data Center has a unique identifier associated with it. Researchers should cite the exact version of the dataset that they used in their analysis, even if there is a newer version of the dataset available. When there is a newer version available, that will be clearly marked on the original dataset page with a yellow banner indicating as such.

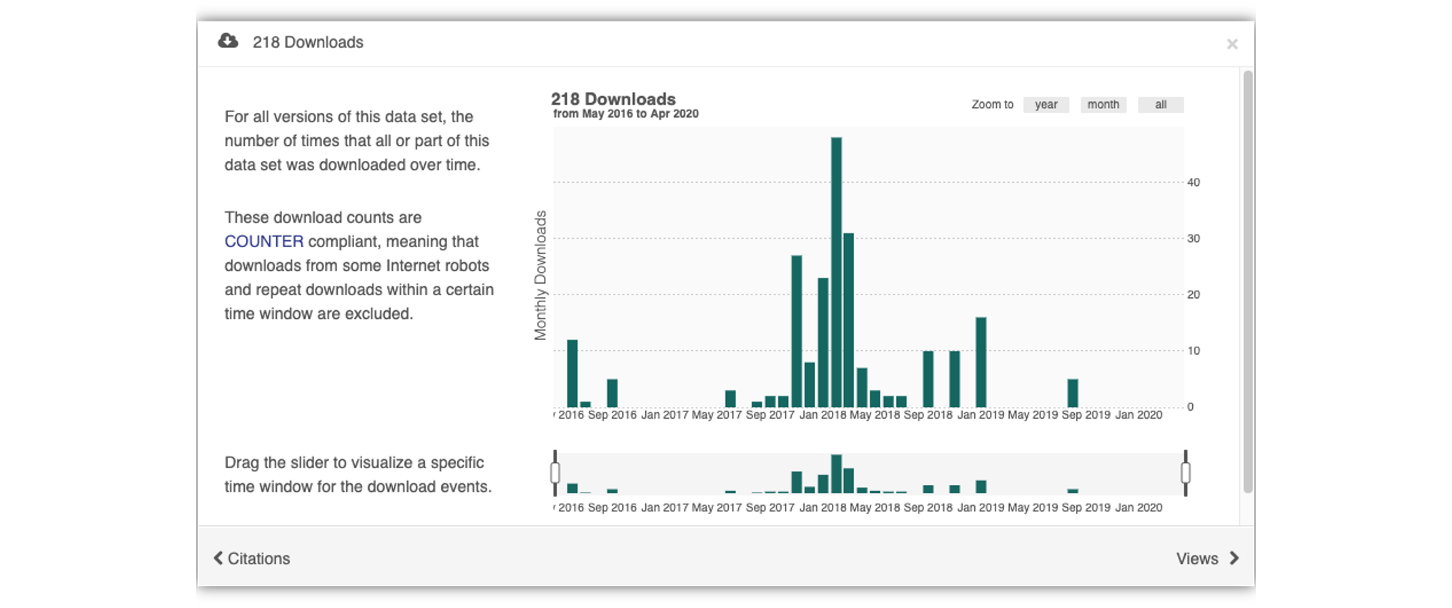

Having the data identified in this manner allows us to accurately track the dataset usage metrics. The Arctic Data Center tracks the number of citations, the number of downloads, and the number of views of each dataset in the catalog.

We filter out most views by internet bots and repeat views within a small time window in order to make these metrics COUNTER compliant. COUNTER is a standard that libraries and repositories use to provide users with consistent, credible, and comparable usage data.

5.3.4 Data Citation

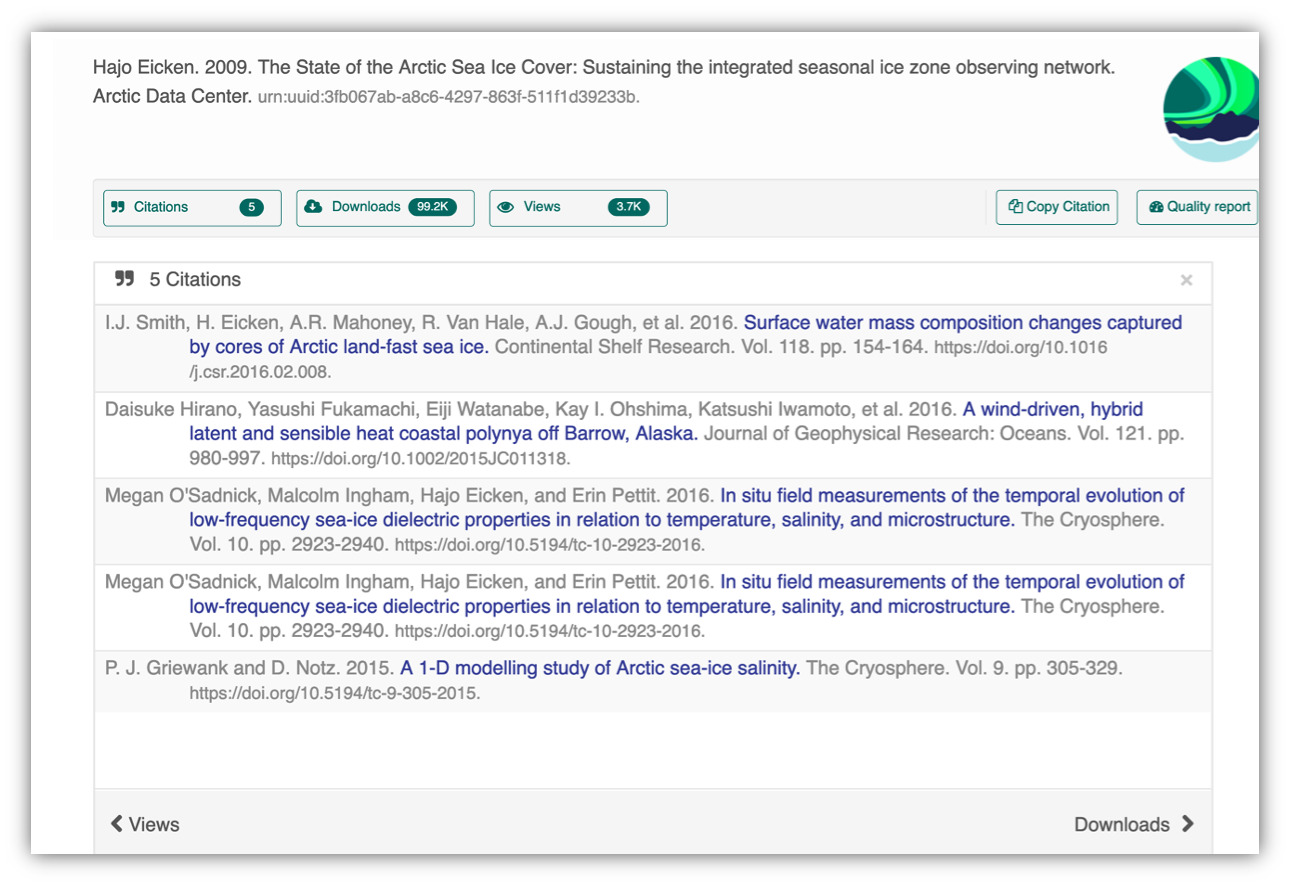

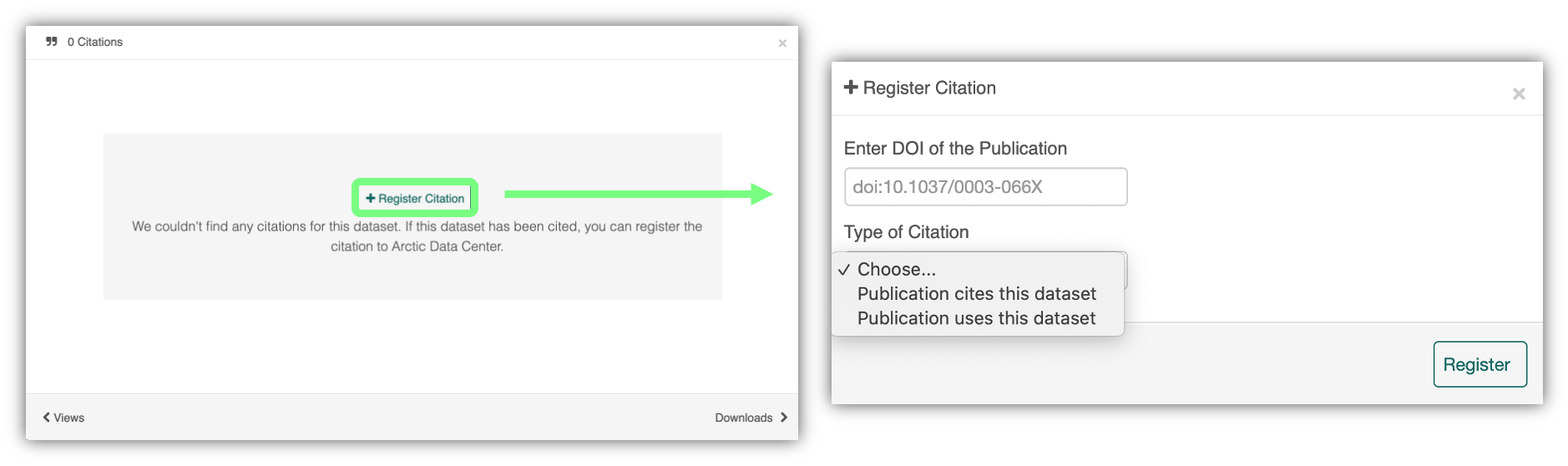

Researchers should get in the habit of citing the data that they use - even if it’s their own data! - in each publication that uses that data. The Arctic Data Center has taken multiple steps towards providing data citation information for all datasets we hold in our catalog, including a feature enabling dataset owners to directly register citations to their datasets.

We recently implemented this “Register Citation” feature to allow researchers to register known citations to their datasets. Researchers may register a citation for any occasions where they know a certain publication uses or refers to a certain dataset, and the citation will be viewable on the dataset profile within 24 hours.

To register a citation, navigate to the dataset using the DOI and click on the citations tab. Once there, this dialog box will pop up and you’ll be able to register the citation with us. Click that button and you’ll see a very simple form asking for the DOI of the paper and if the paper CITES the dataset (that is, the dataset is explicitly identified or linked to somewhere in the text or references) or USES the dataset (that is, uses the dataset but doesn’t formally cite it).

We encourage you to make this part of your workflow, and for you to let your colleagues know about it too!

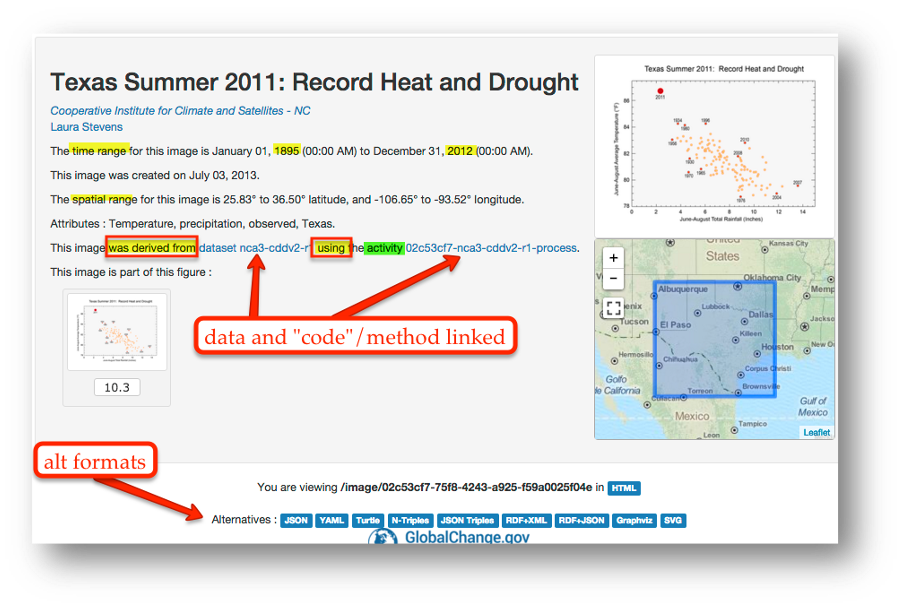

5.3.5 Provanance & Preserving Computational Workflows

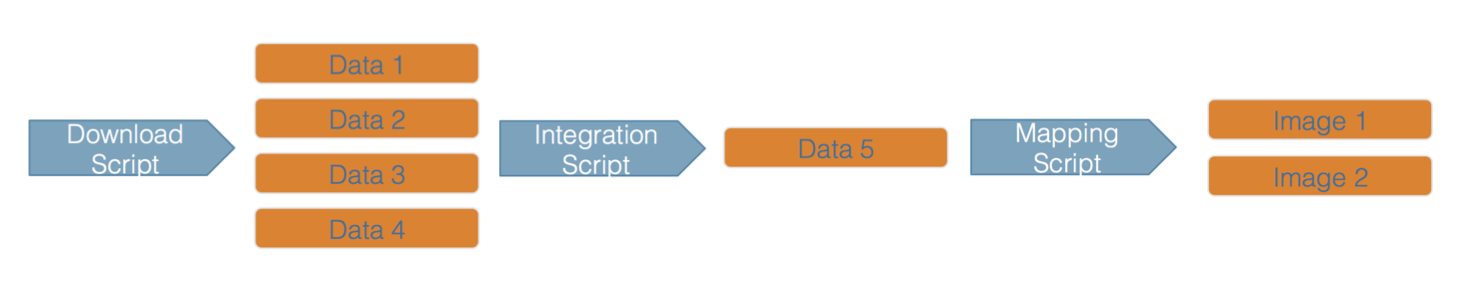

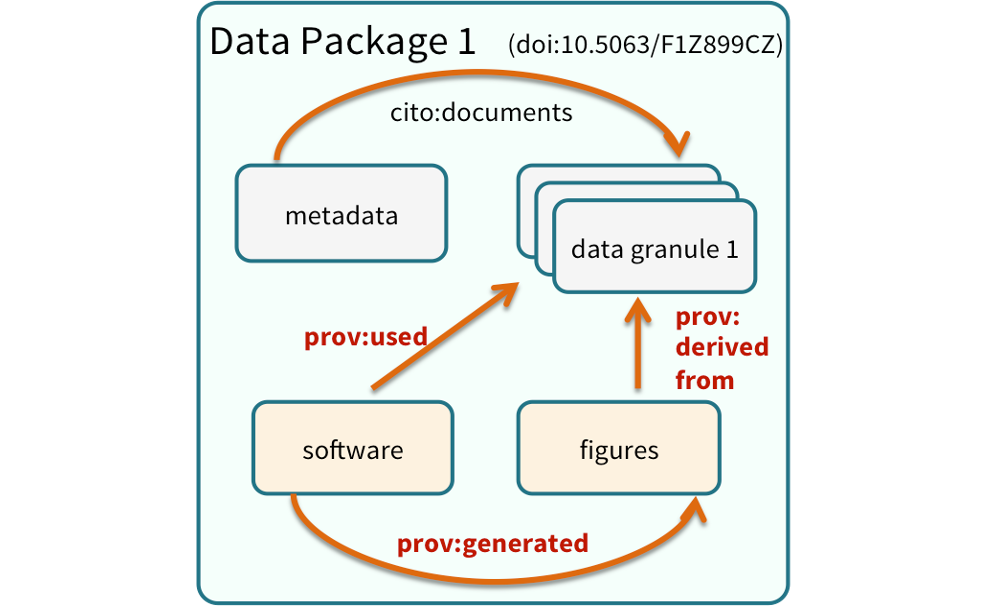

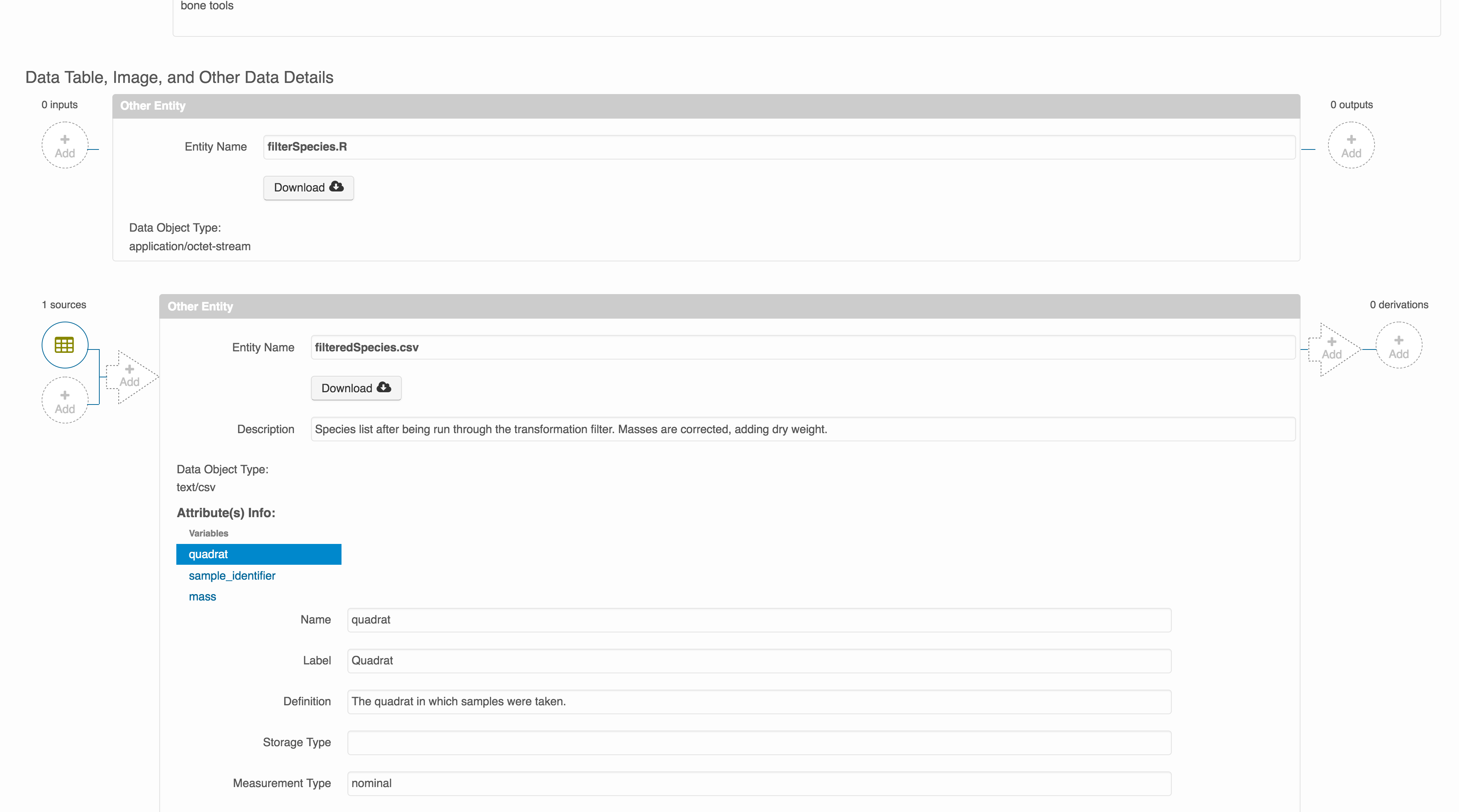

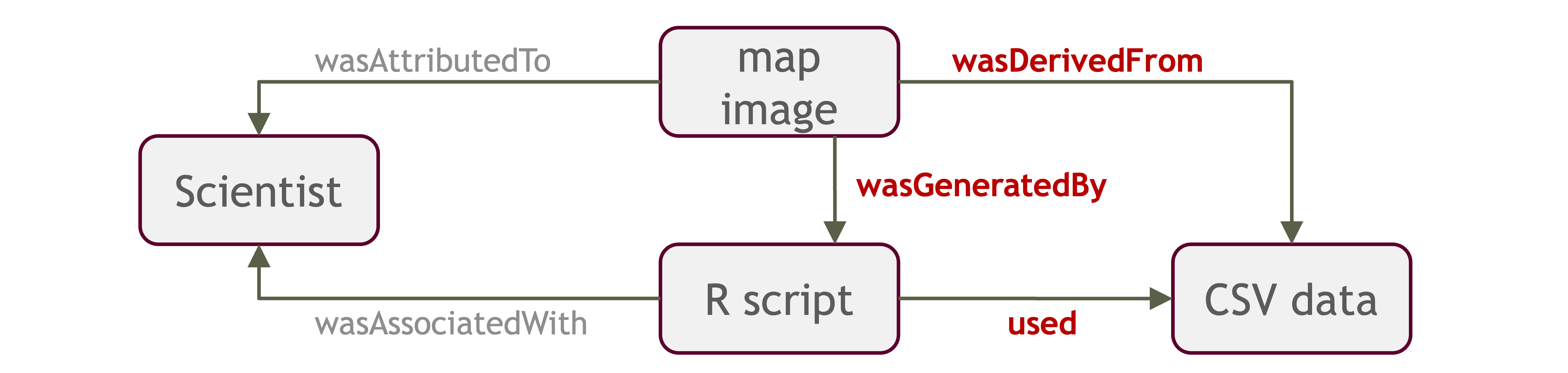

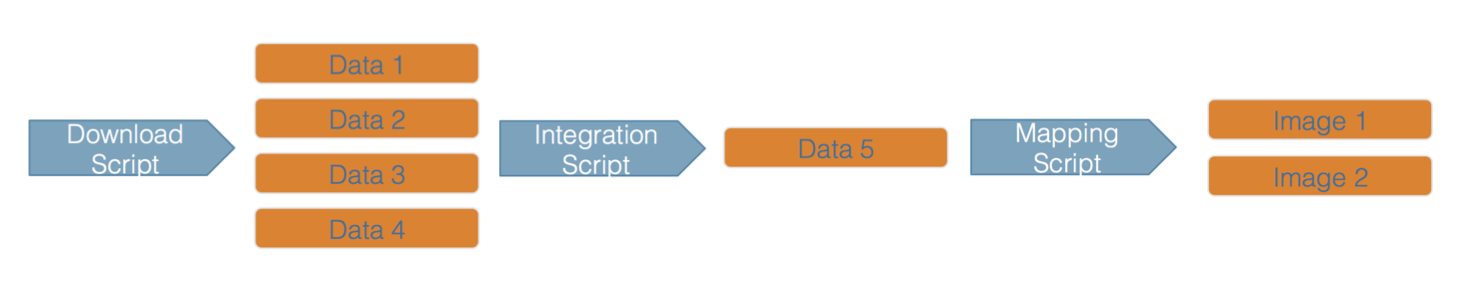

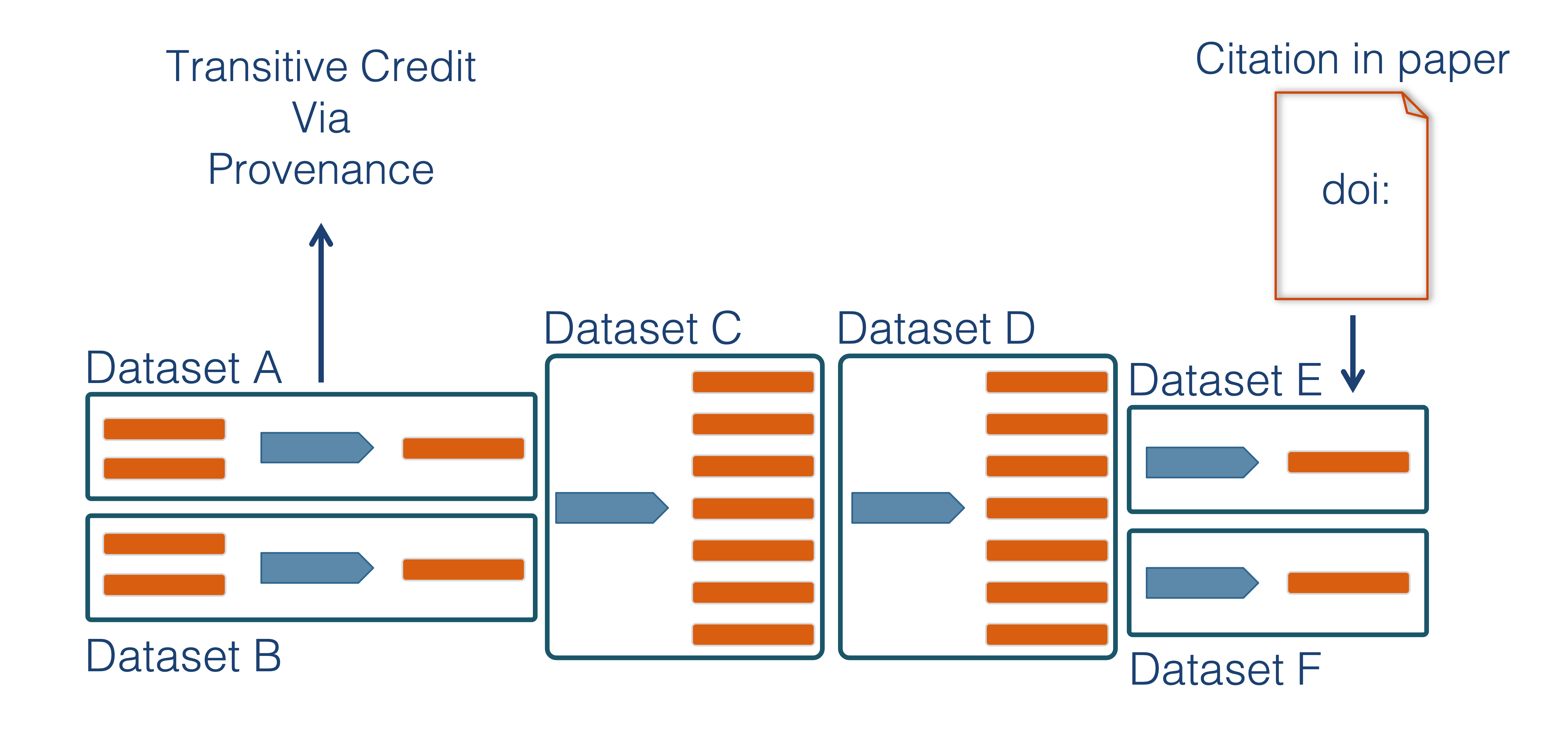

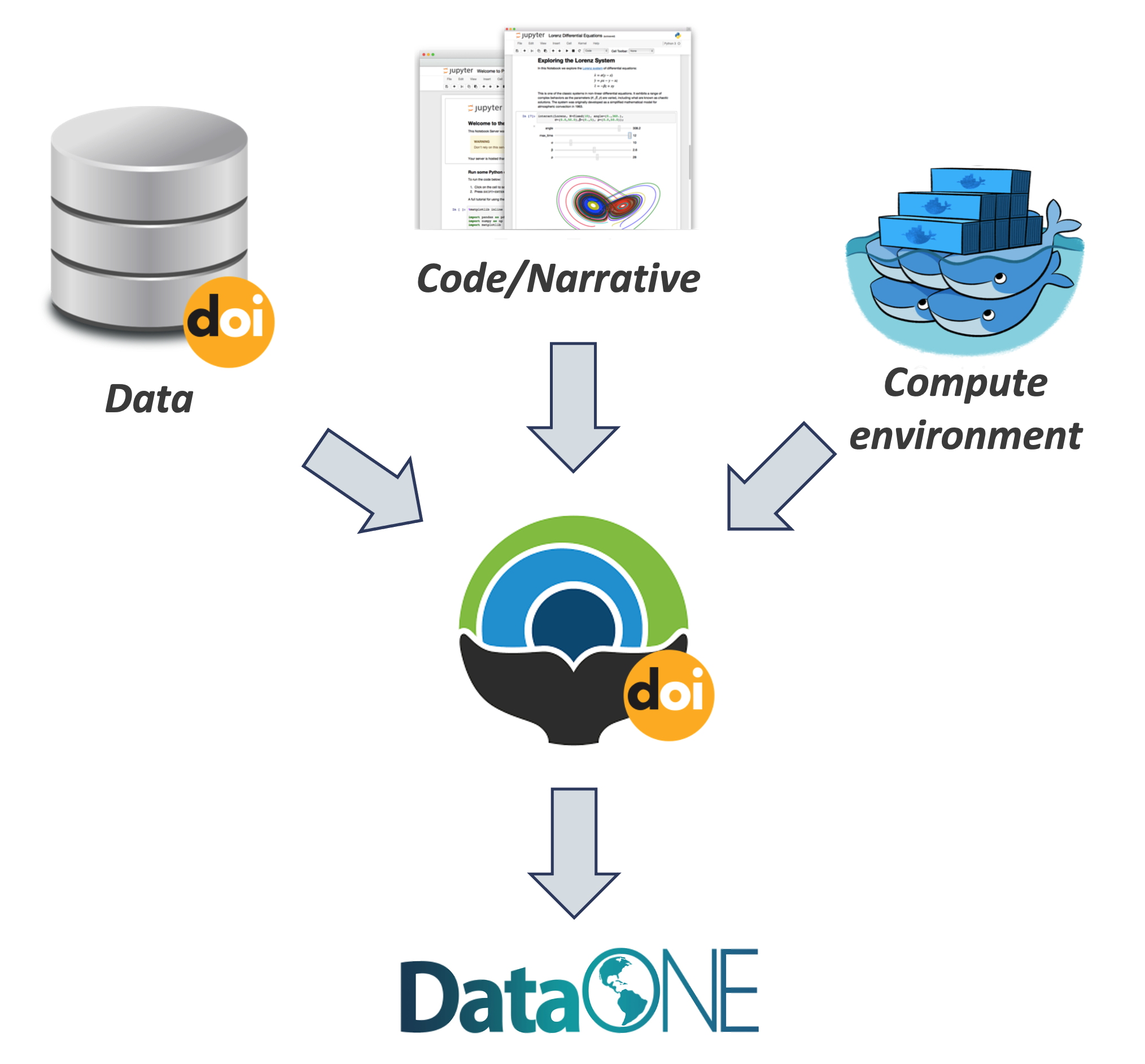

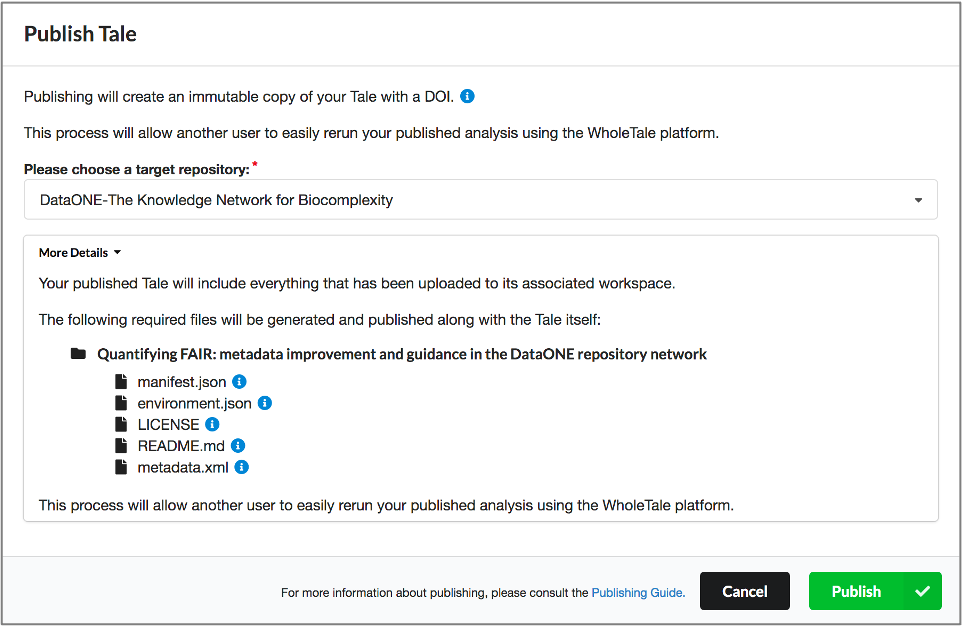

While the Arctic Data Center, Knowledge Network for Biocomplexity, and similar repositories do focus on preserving data, we really set our sights much more broadly on preserving entire computational workflows that are instrumental to advances in science. A computational workflow represents the sequence of computational tasks that are performed from raw data acquisition through data quality control, integration, analysis, modeling, and visualization.

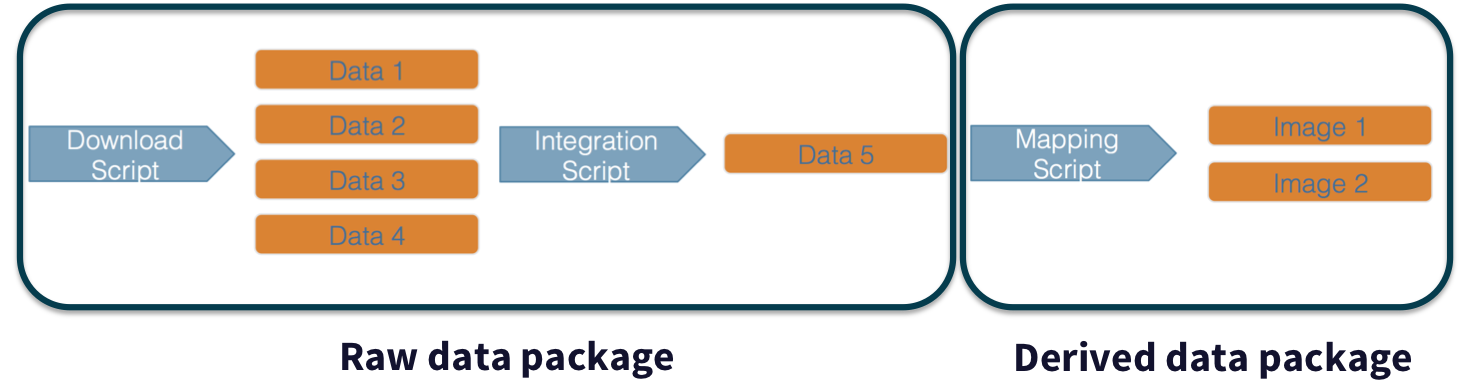

In addition, these workflows are often not executed all at once, but rather are divided into multiple workflows, earch with its own purpose. For example, a data acquistion and cleaning workflow often creates a derived and integrated data product that is then picked up and used by multiple downstream analytical workflows that produce specific scientific findings. These workflows can each be archived as distinct data packages, with the output of the first workflow becoming the input of the second and subsequent workflows.

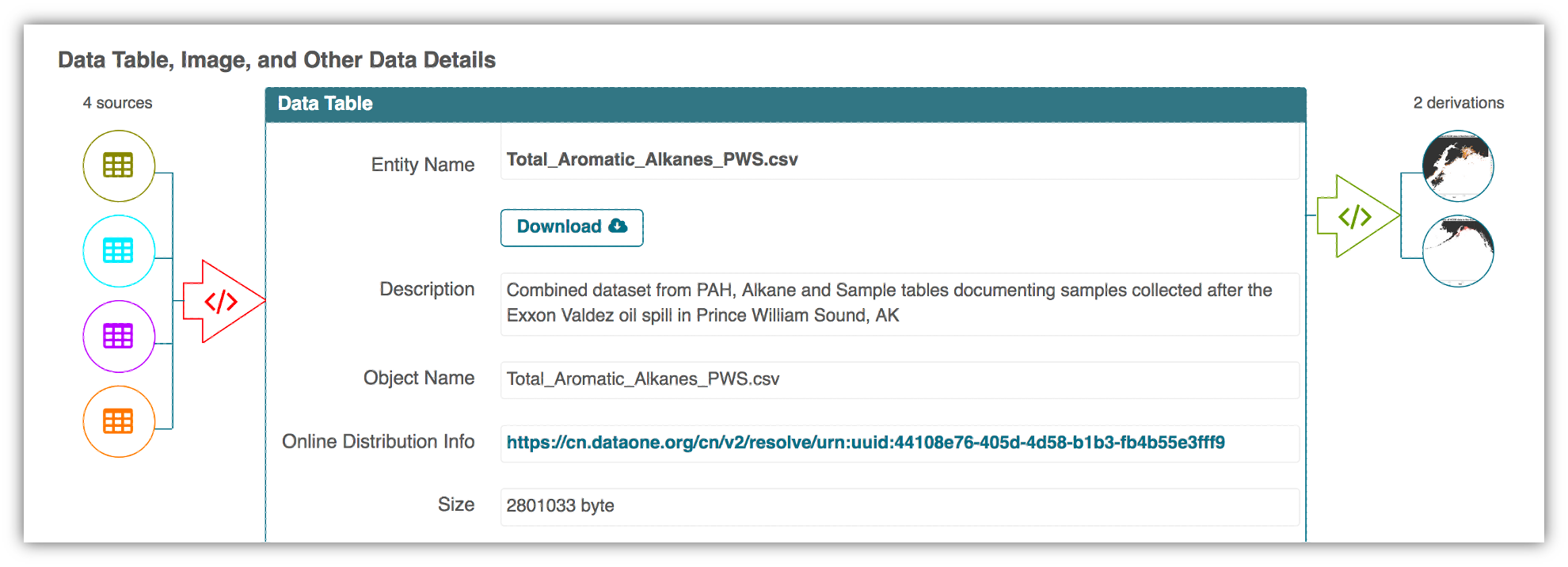

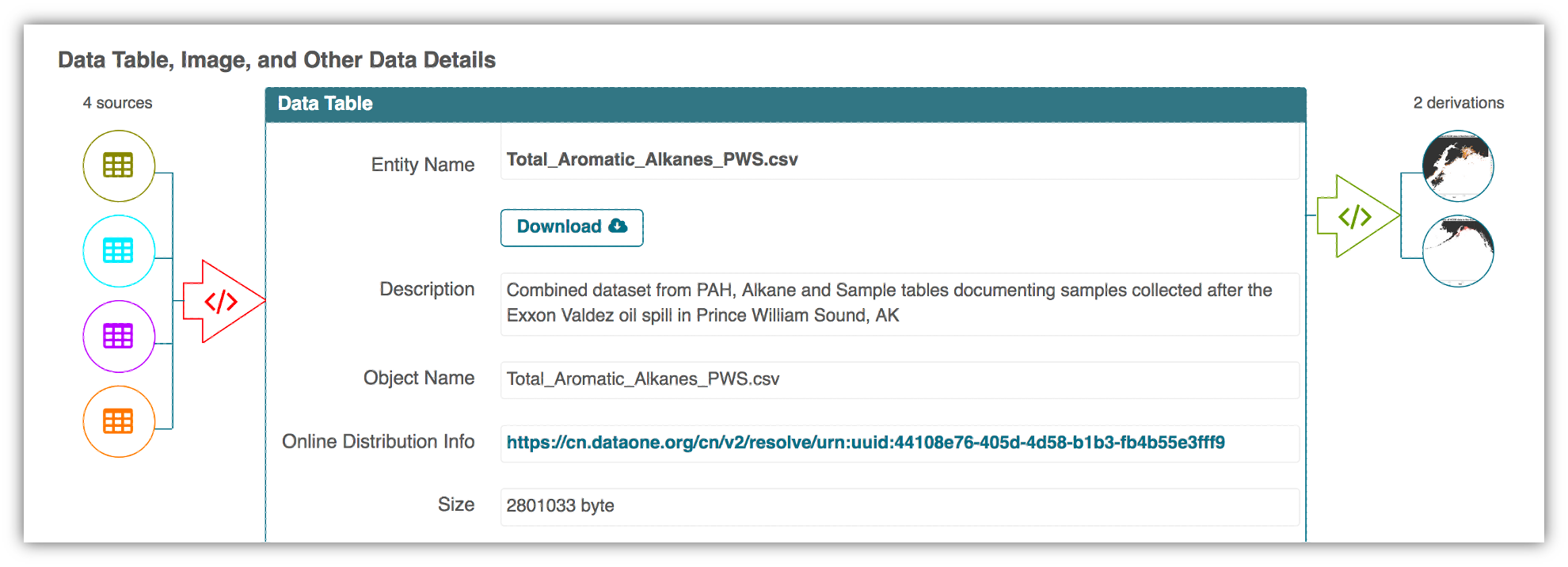

In an effort to make data more reproducible, datasets also support provenance tracking. With provenance tracking, users of the Arctic Data Center can see exactly what datasets led to what product, using the particular script or workflow that the researcher used.

This is a useful tool to make data more compliant with the FAIR principles. In addition to making data more reproducible, it is also useful for building on the work of others; you can produce similar visualizations for another location, for example, using the same code.

RMarkdown itself can be used as a provenance tool, as well - by starting with the raw data and cleaning it programmatically, rather than manually, you preserve the steps that you went through and your workflow is reproducible.

5.4 Data Documentation and Publishing

5.4.1 Learning Objectives

In this lesson, you will learn:

- About open data archives

- What science metadata is and how it can be used

- How data and code can be documented and published in open data archives

5.4.2 Data sharing and preservation

5.4.3 Data repositories: built for data (and code)

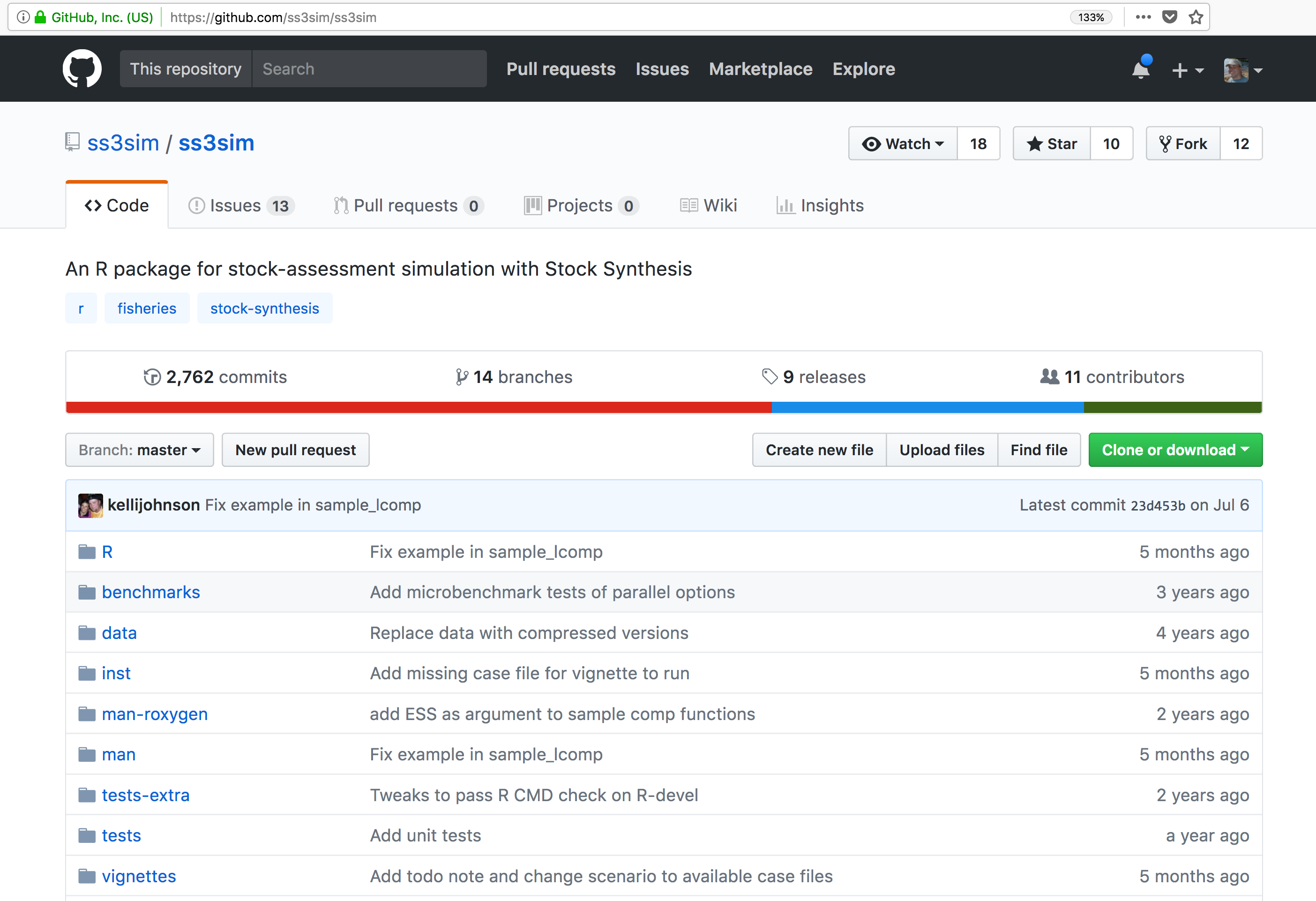

- GitHub is not an archival location

- Dedicated data repositories: NEON, KNB, Arctic Data Center, Zenodo, FigShare

- Rich metadata

- Archival in their mission

- Data papers, e.g., Scientific Data

- List of data repositories: http://re3data.org

5.4.4 Metadata

Metadata are documentation describing the content, context, and structure of data to enable future interpretation and reuse of the data. Generally, metadata describe who collected the data, what data were collected, when and where it was collected, and why it was collected.

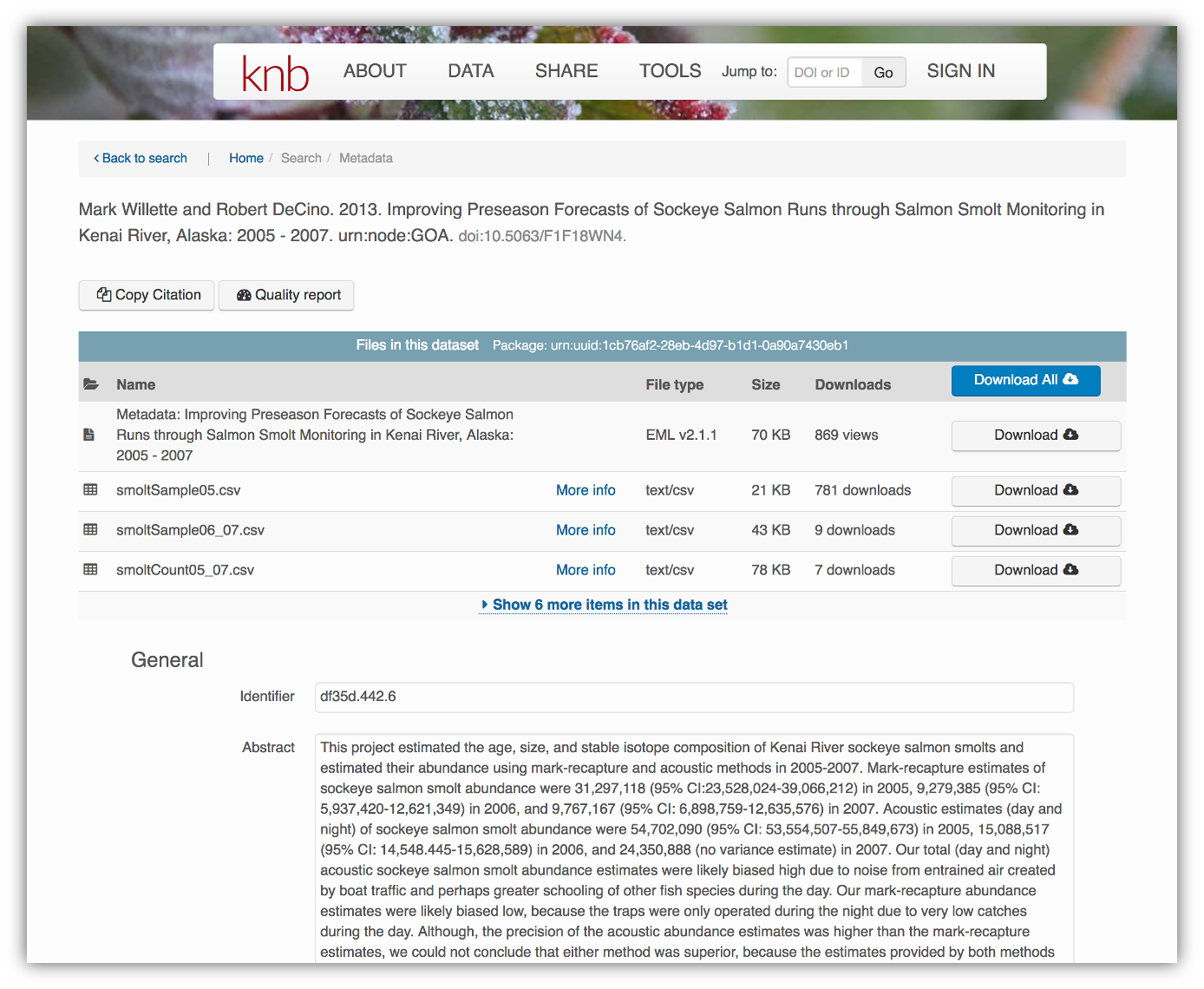

For consistency, metadata are typically structured following metadata content standards such as the Ecological Metadata Language (EML). For example, here’s an excerpt of the metadata for a sockeye salmon data set:

<?xml version="1.0" encoding="UTF-8"?>

<eml:eml packageId="df35d.442.6" system="knb"

xmlns:eml="eml://ecoinformatics.org/eml-2.1.1">

<dataset>

<title>Improving Preseason Forecasts of Sockeye Salmon Runs through

Salmon Smolt Monitoring in Kenai River, Alaska: 2005 - 2007</title>

<creator id="1385594069457">

<individualName>

<givenName>Mark</givenName>

<surName>Willette</surName>

</individualName>

<organizationName>Alaska Department of Fish and Game</organizationName>

<positionName>Fishery Biologist</positionName>

<address>

<city>Soldotna</city>

<administrativeArea>Alaska</administrativeArea>

<country>USA</country>

</address>

<phone phonetype="voice">(907)260-2911</phone>

<electronicMailAddress>mark.willette@alaska.gov</electronicMailAddress>

</creator>

...

</dataset>

</eml:eml>That same metadata document can be converted to HTML format and displayed in a much more readable form on the web: https://knb.ecoinformatics.org/#view/doi:10.5063/F1F18WN4

And as you can see, the whole data set or its components can be downloaded and

reused.

And as you can see, the whole data set or its components can be downloaded and

reused.

Also note that the repository tracks how many times each file has been downloaded, which gives great feedback to researchers on the activity for their published data.

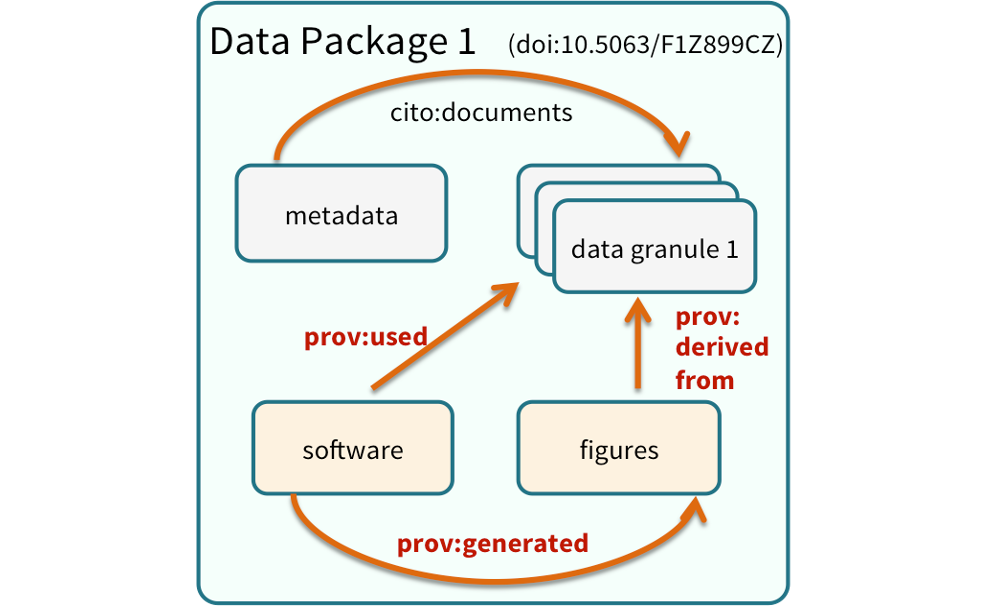

5.4.5 Structure of a data package

Note that the data set above lists a collection of files that are contained within the data set. We define a data package as a scientifically useful collection of data and metadata that a researcher wants to preserve. Sometimes a data package represents all of the data from a particular experiment, while at other times it might be all of the data from a grant, or on a topic, or associated with a paper. Whatever the extent, we define a data package as having one or more data files, software files, and other scientific products such as graphs and images, all tied together with a descriptive metadata document.

These data repositories all assign a unique identifier to every version of every

data file, similarly to how it works with source code commits in GitHub. Those identifiers

usually take one of two forms. A DOI identifier is often assigned to the metadata

and becomes the publicly citable identifier for the package. Each of the other files

gets an internal identifier, often a UUID that is globally unique. In the example above,

the package can be cited with the DOI

These data repositories all assign a unique identifier to every version of every

data file, similarly to how it works with source code commits in GitHub. Those identifiers

usually take one of two forms. A DOI identifier is often assigned to the metadata

and becomes the publicly citable identifier for the package. Each of the other files

gets an internal identifier, often a UUID that is globally unique. In the example above,

the package can be cited with the DOI doi:10.5063/F1F18WN4.

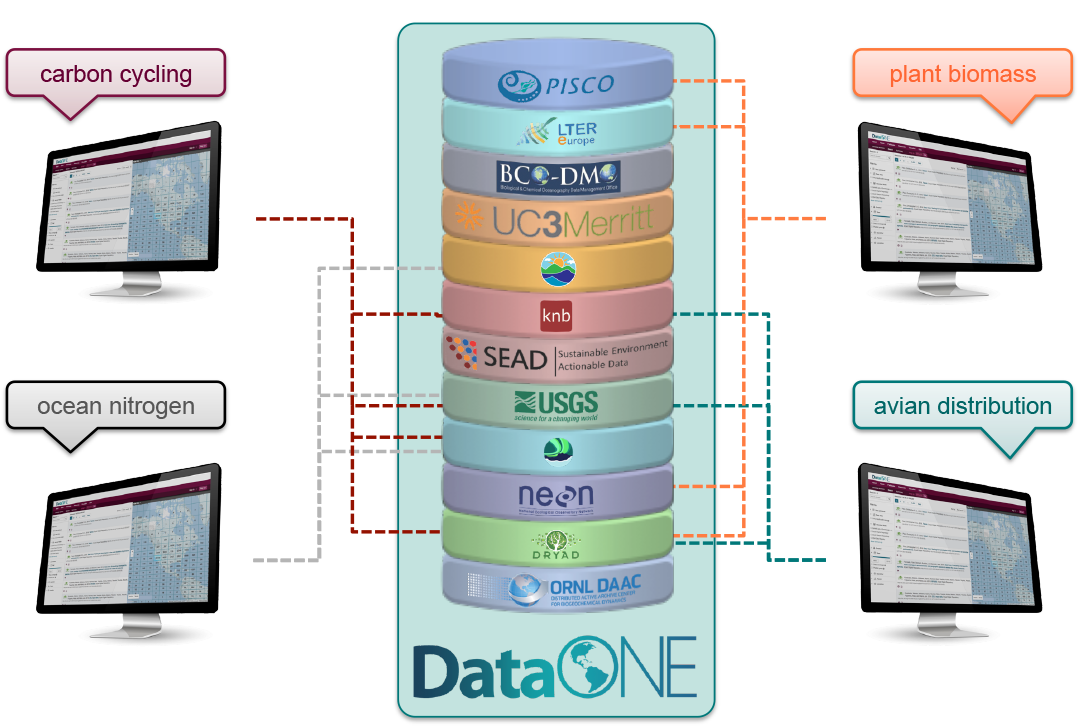

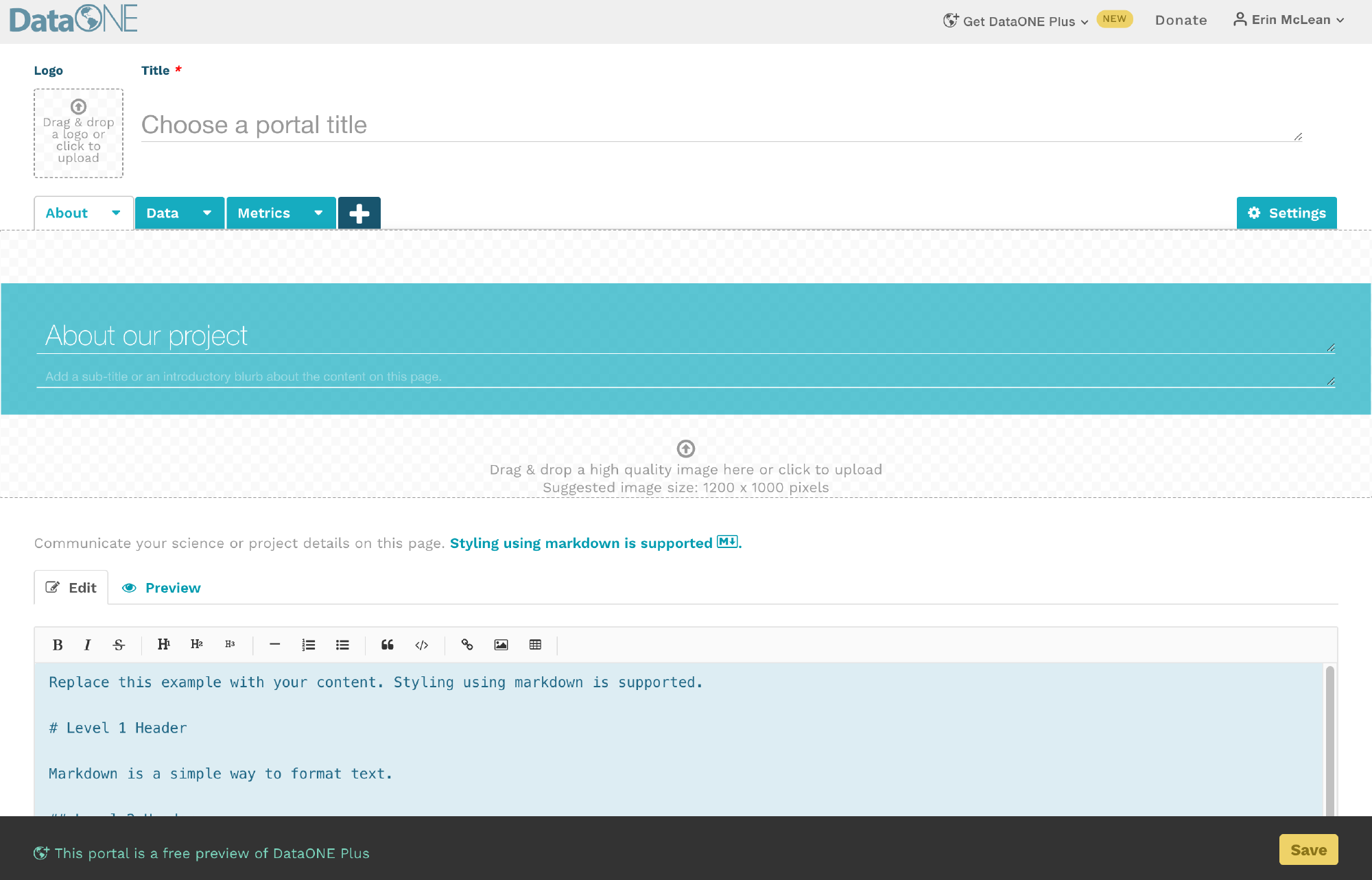

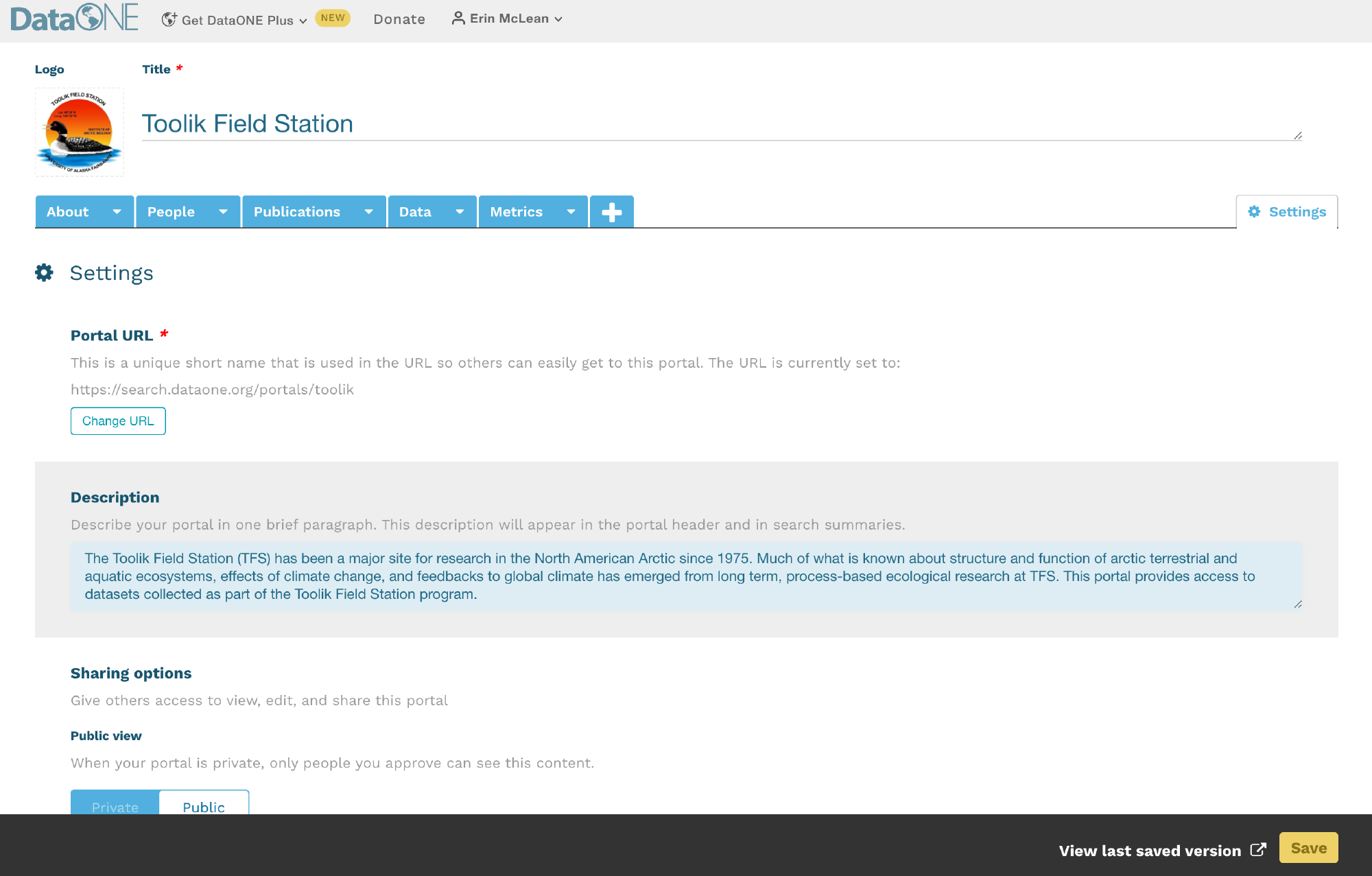

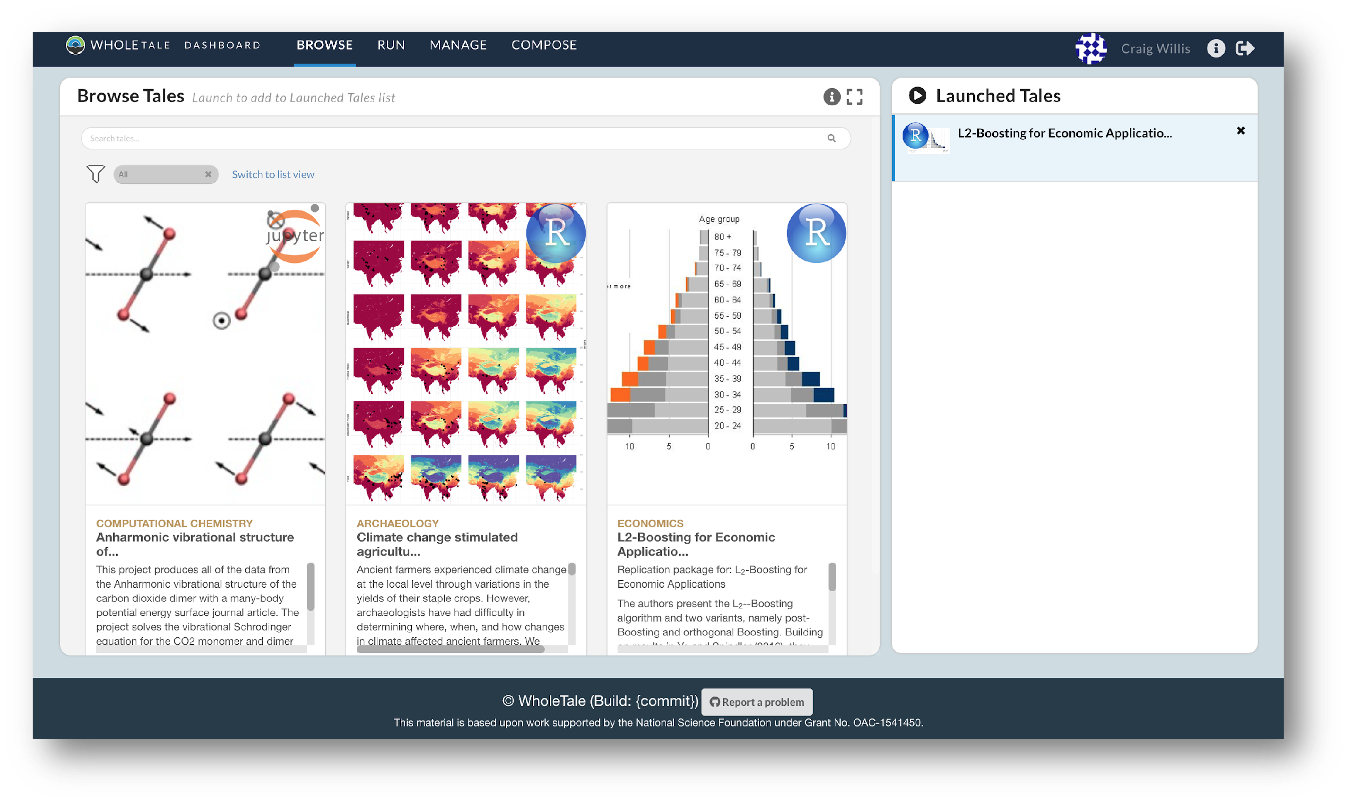

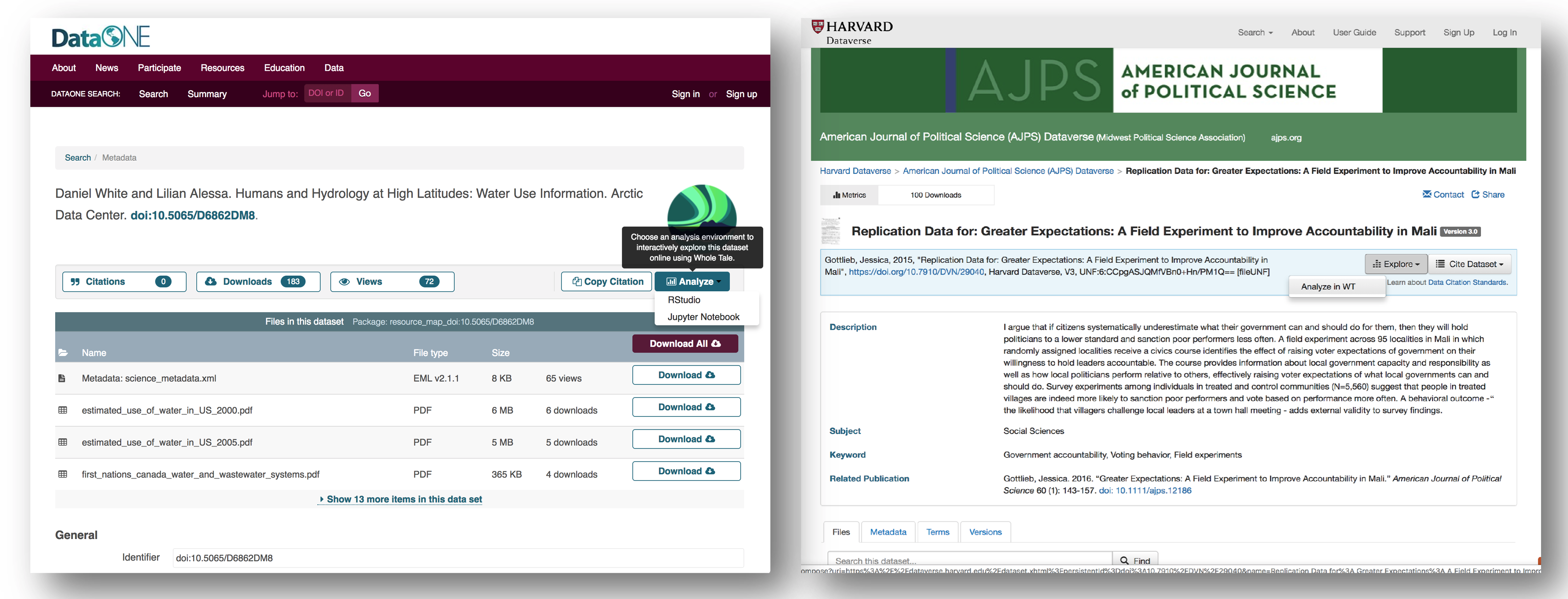

5.4.6 DataONE Federation

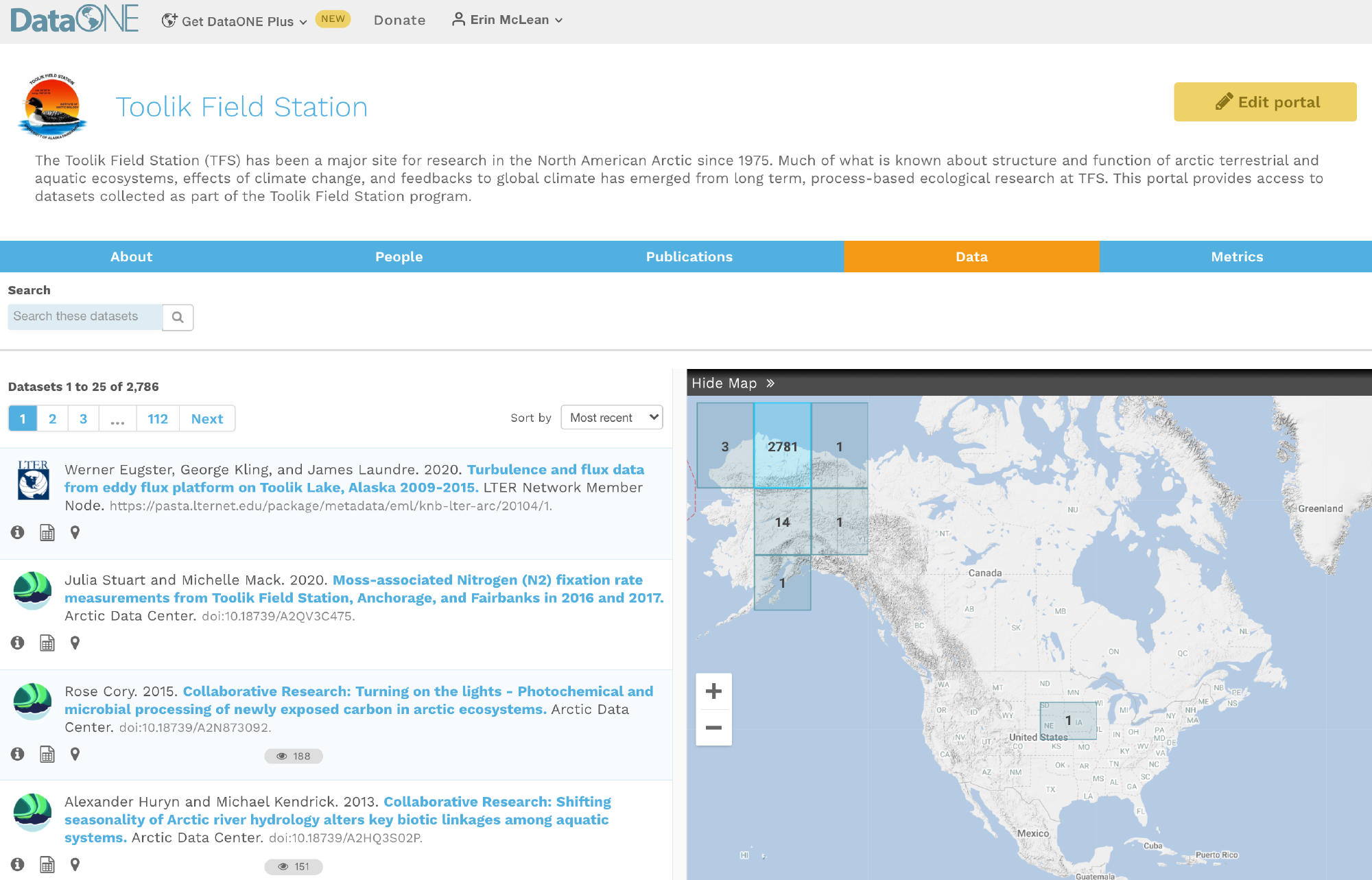

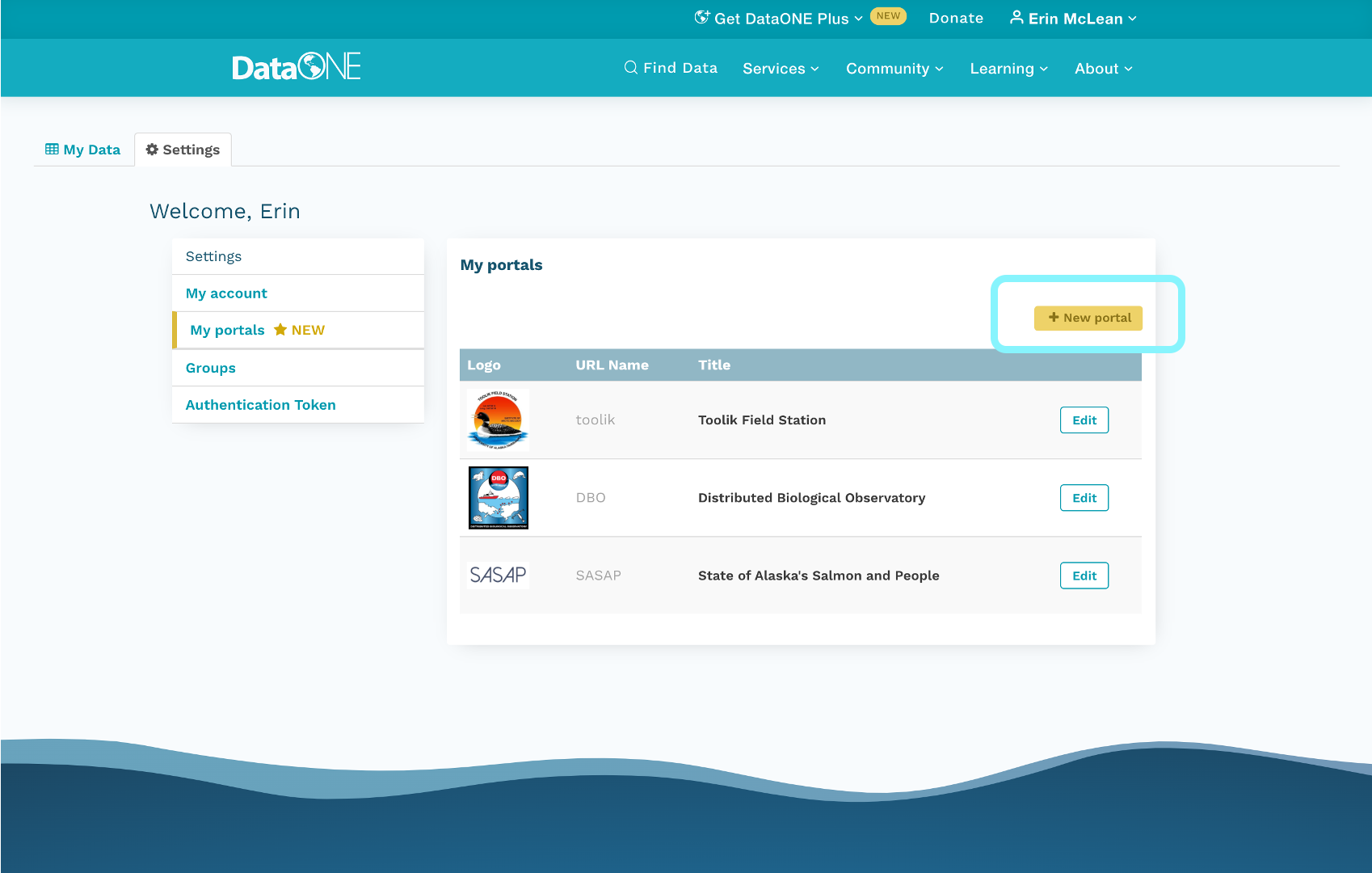

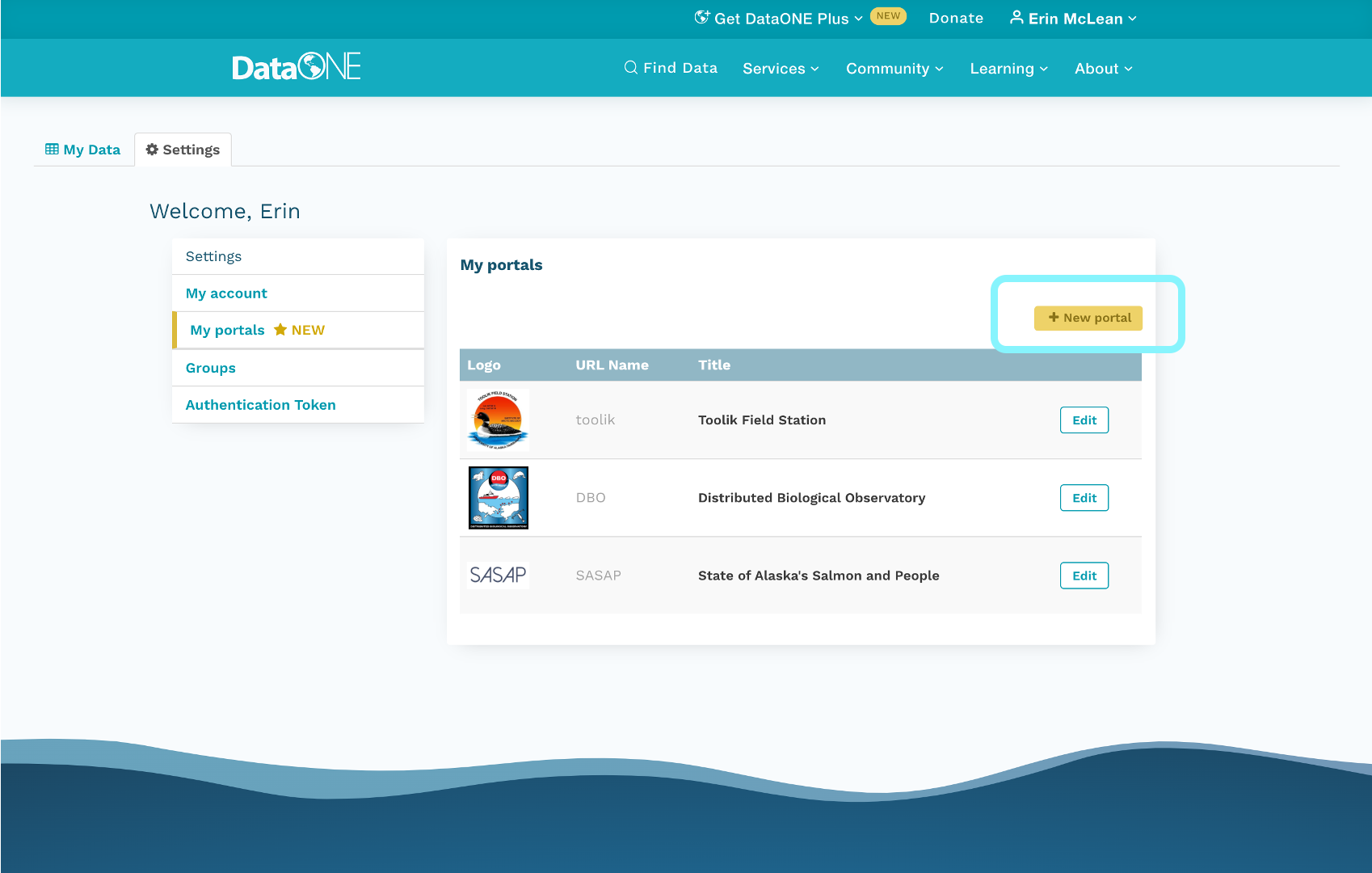

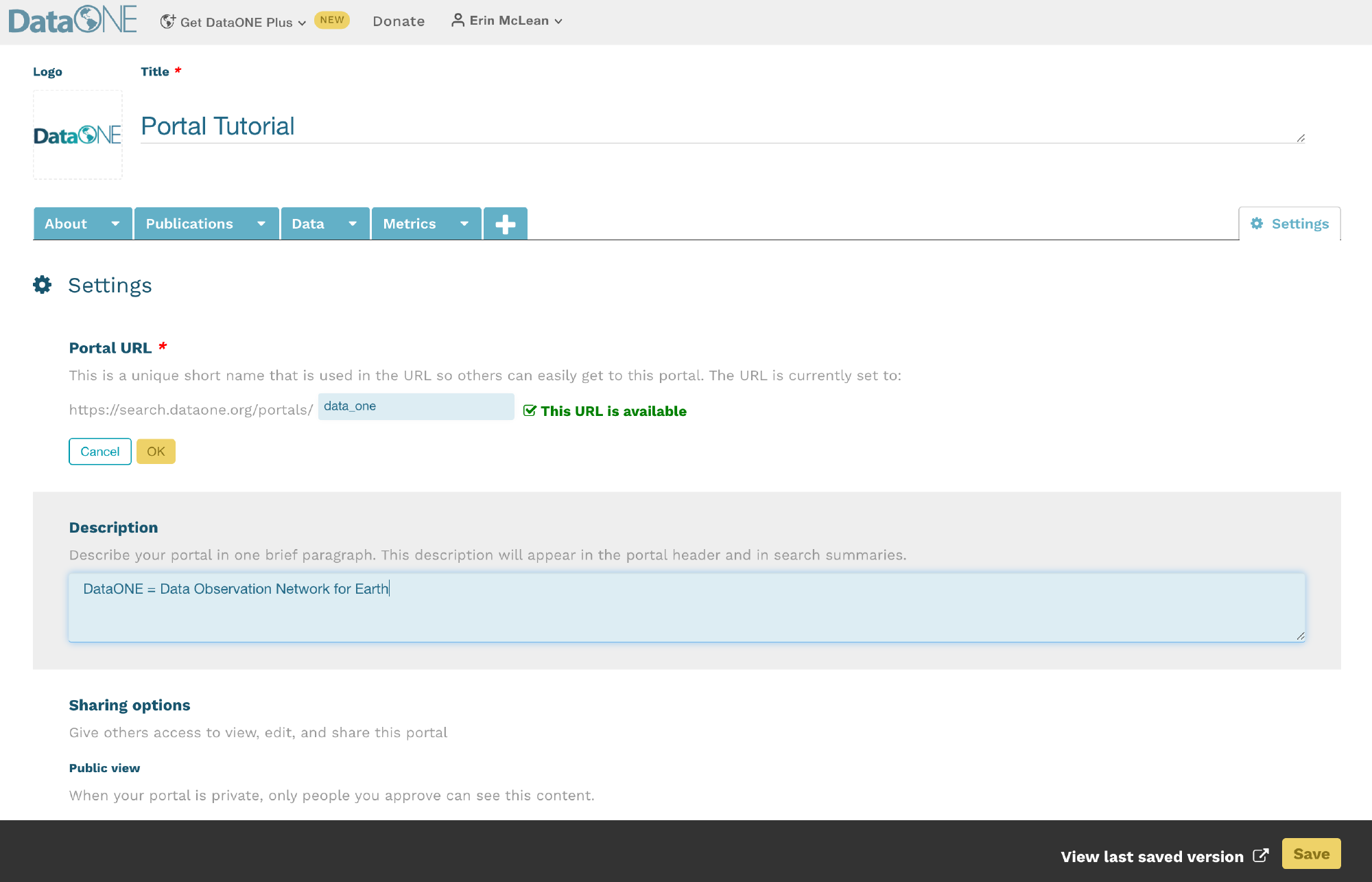

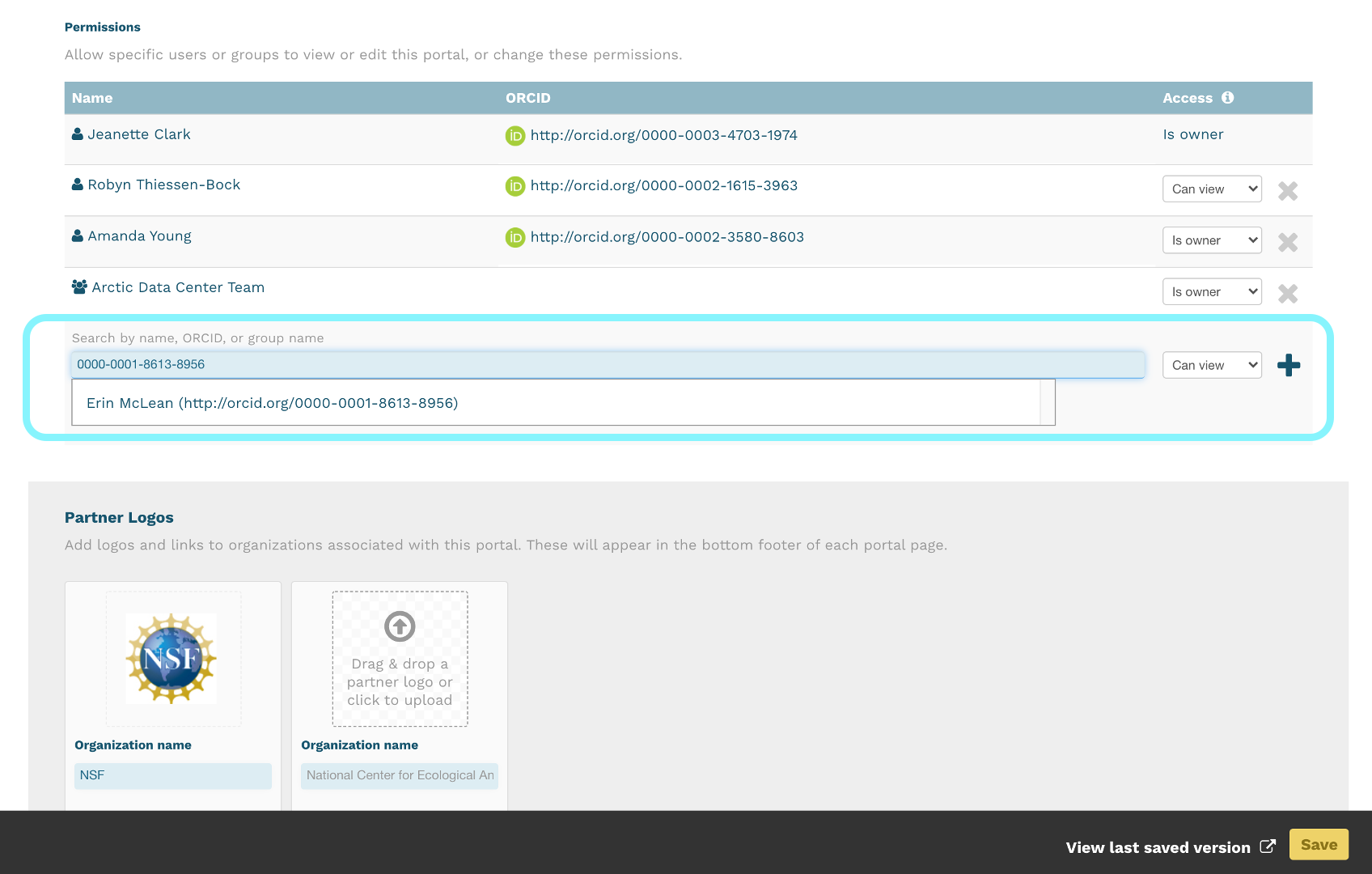

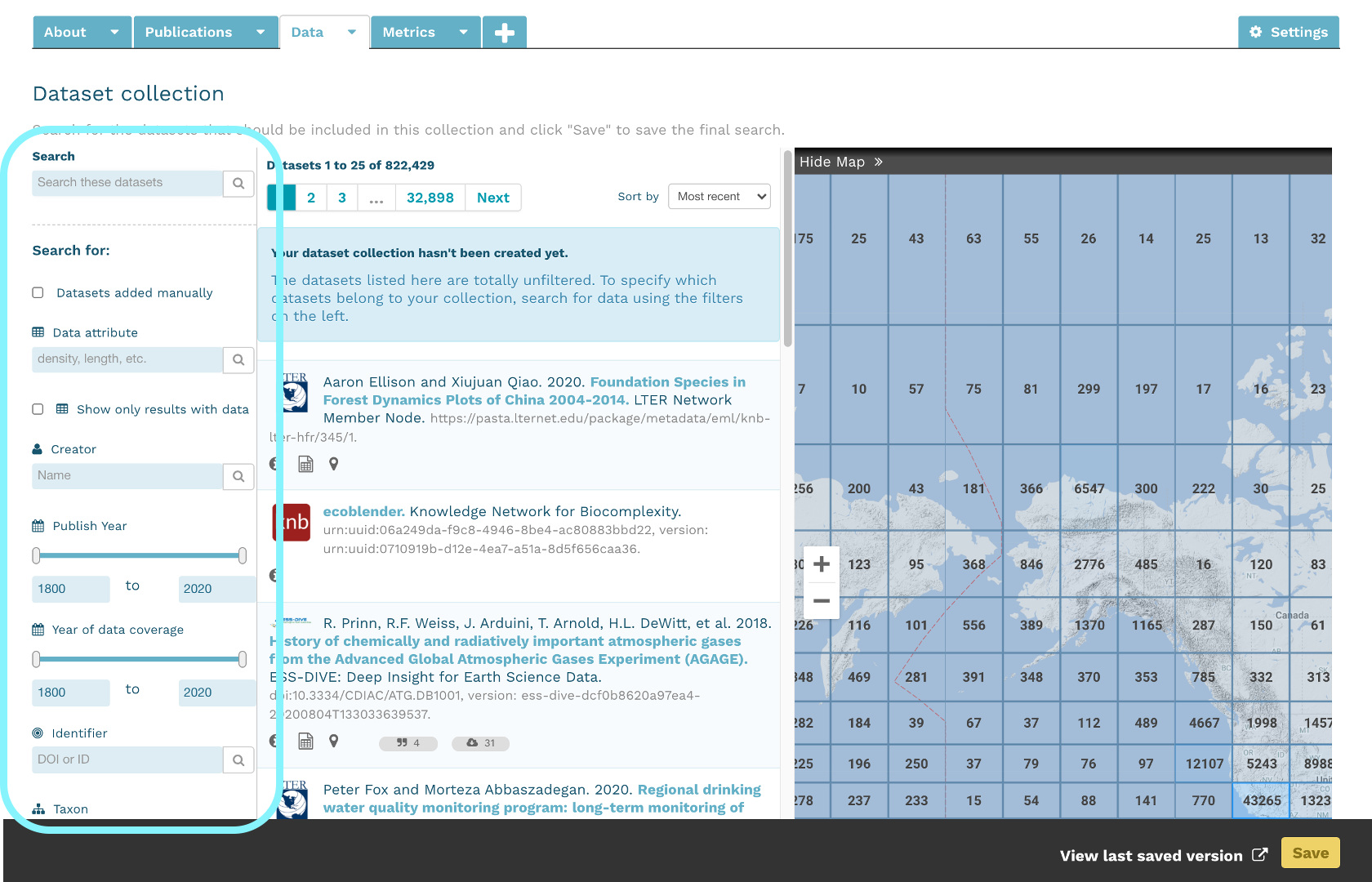

DataONE is a federation of dozens of data repositories that work together to make their systems interoperable and to provide a single unified search system that spans the repositories. DataONE aims to make it simpler for researchers to publish data to one of its member repositories, and then to discover and download that data for reuse in synthetic analyses.

DataONE can be searched on the web (https://search.dataone.org/), which effectively allows a single search to find data form the dozens of members of DataONE, rather than visiting each of the currently 43 repositories one at a time.

Publishing data to NEON

Each data repository tends to have its own mechanism for submitting data and providing metadata. At NEON, you will be required to published data to the NEON Data Portal or to an external data repository for specialized data (see externally hosted data).

Full information on data processing, data management and product release can be found in the online NEON Data Management documentation. Below is an overview of the data processing pipeline for observational data.

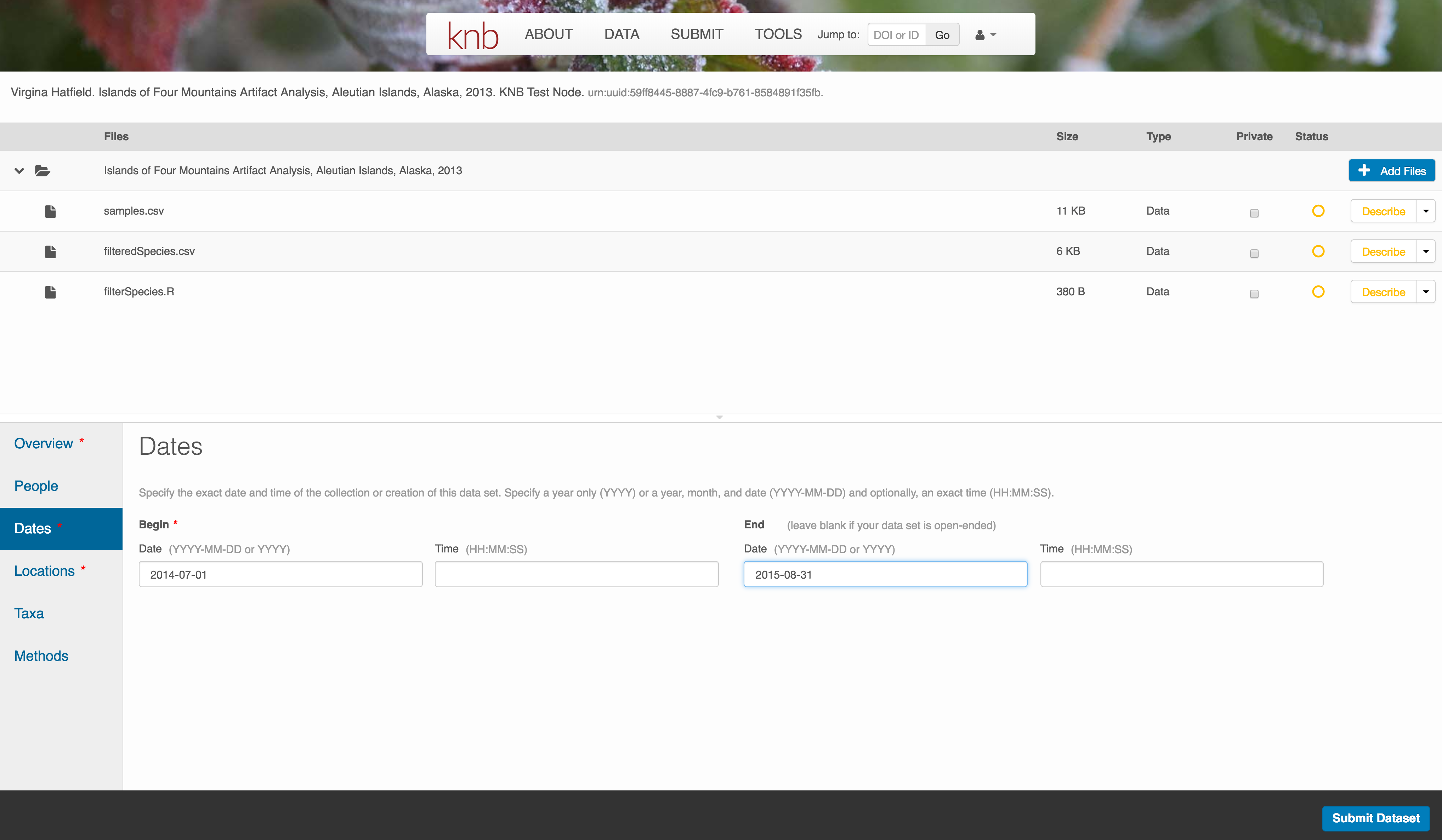

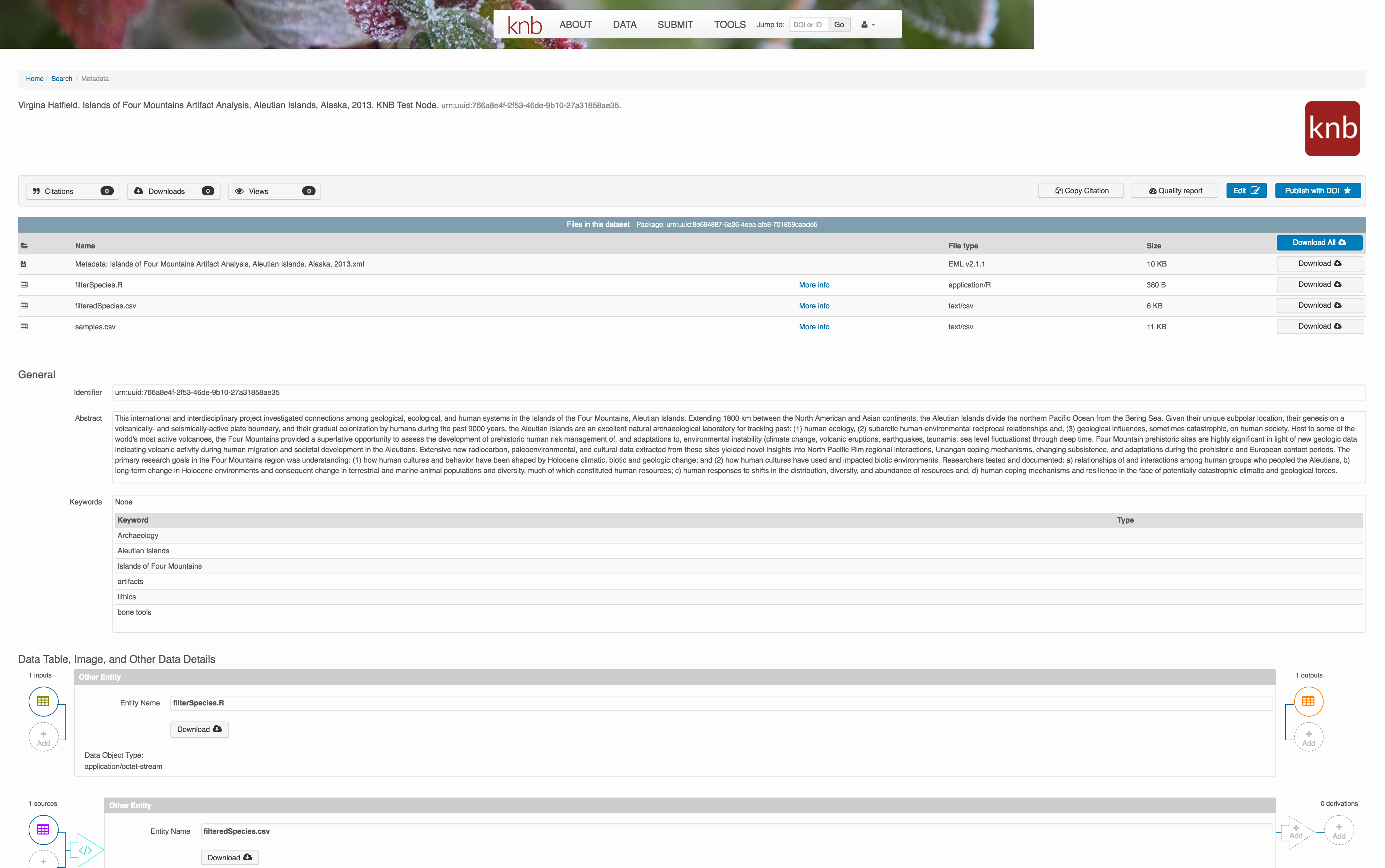

Web based publishing at the KNB

There’s a broad range of data repositories designed to meet different or specific needs of the researcher community. They may be domain specific, designed to support specialized data, or directly associated with an organization or agency. The Knowledge Network for Biocomplexity is an open data repository hosted by NCEAS that preserves a broad cross section of ecological data. The KNB provides simple web based data package editing and submission forms and the OPTIONAL unit below provides an example of the data submission process for another ecological data repository outside the NEON Data Portal.

Download the data to be used for the tutorial

I’ve already uploaded the test data package, and so you can access the data here:

Grab both CSV files, and the R script, and store them in a convenient folder.

Login via ORCID

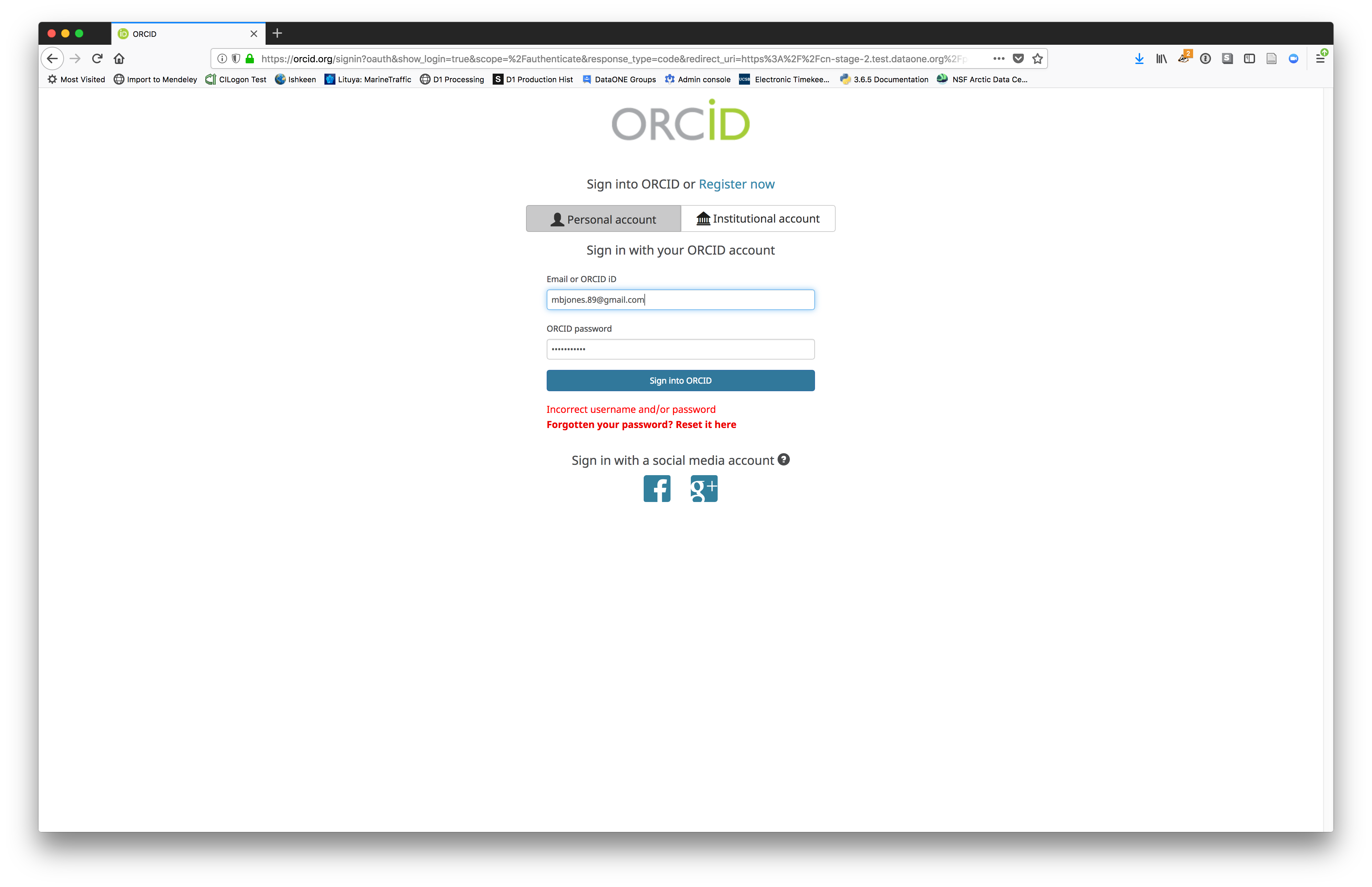

We will walk through web submission on https://demo.nceas.ucsb.edu, and start by logging in with an ORCID account. ORCID provides a common account for sharing scholarly data, so if you don’t have one, you can create one when you are redirected to ORCID from the Sign In button.

When you sign in, you will be redirected to orcid.org, where you can either provide your existing ORCID credentials, or create a new account. ORCID provides multiple ways to login, including using your email address, institutional logins from many universities, and logins from social media account providers. Choose the one that is best suited to your use as a scholarly record, such as your university or agency login.

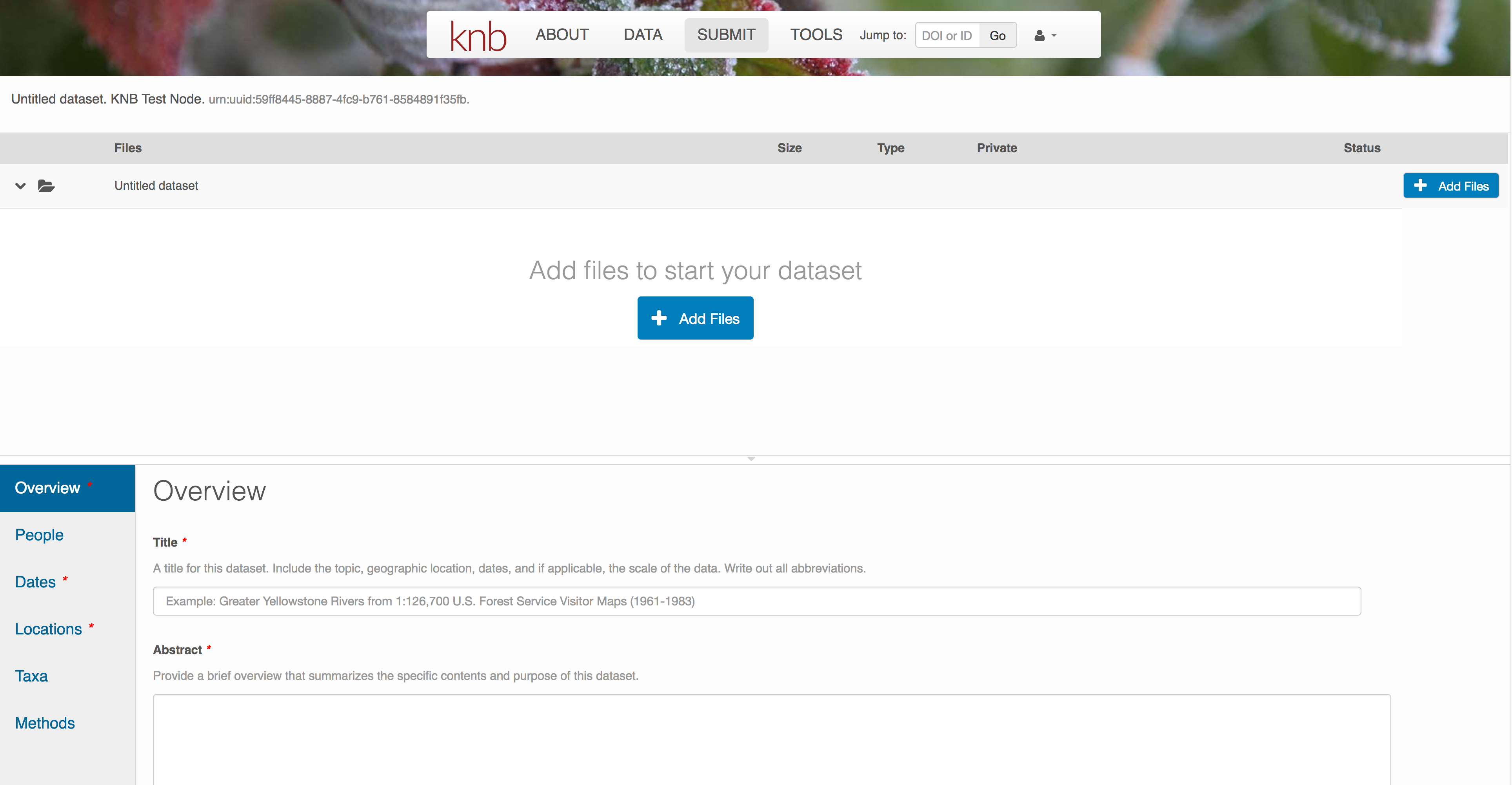

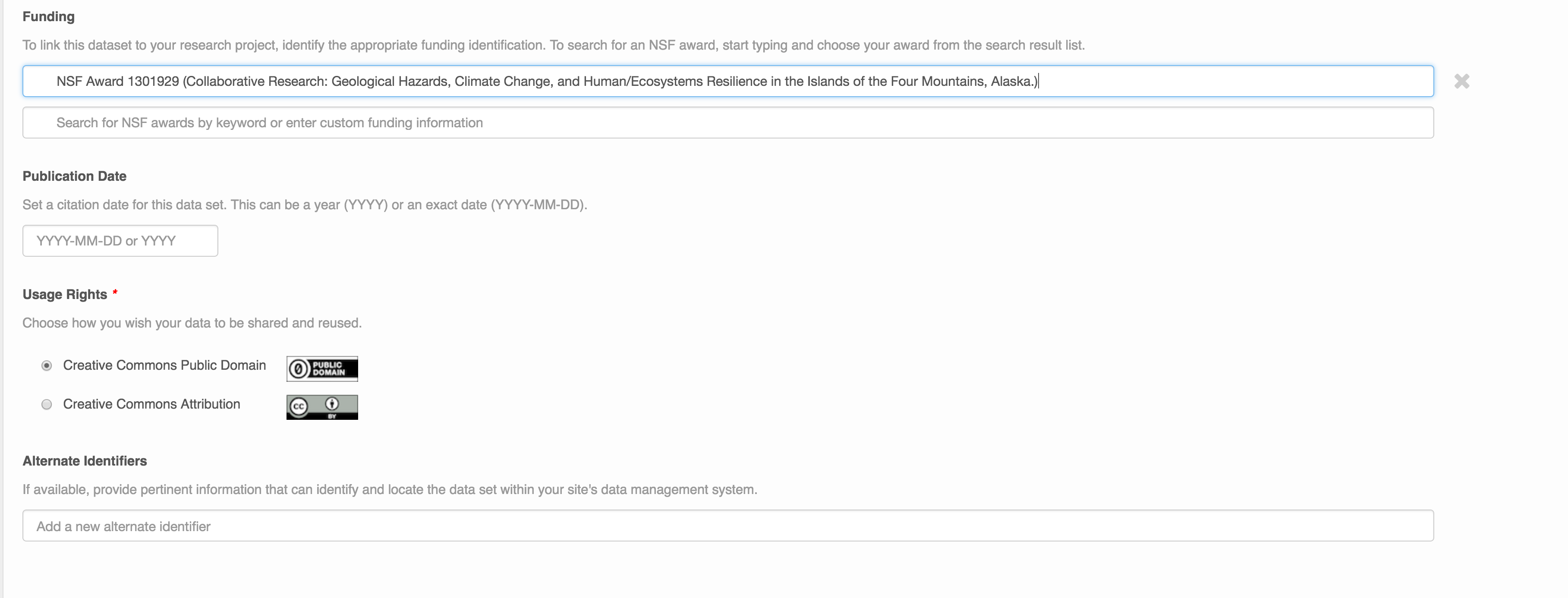

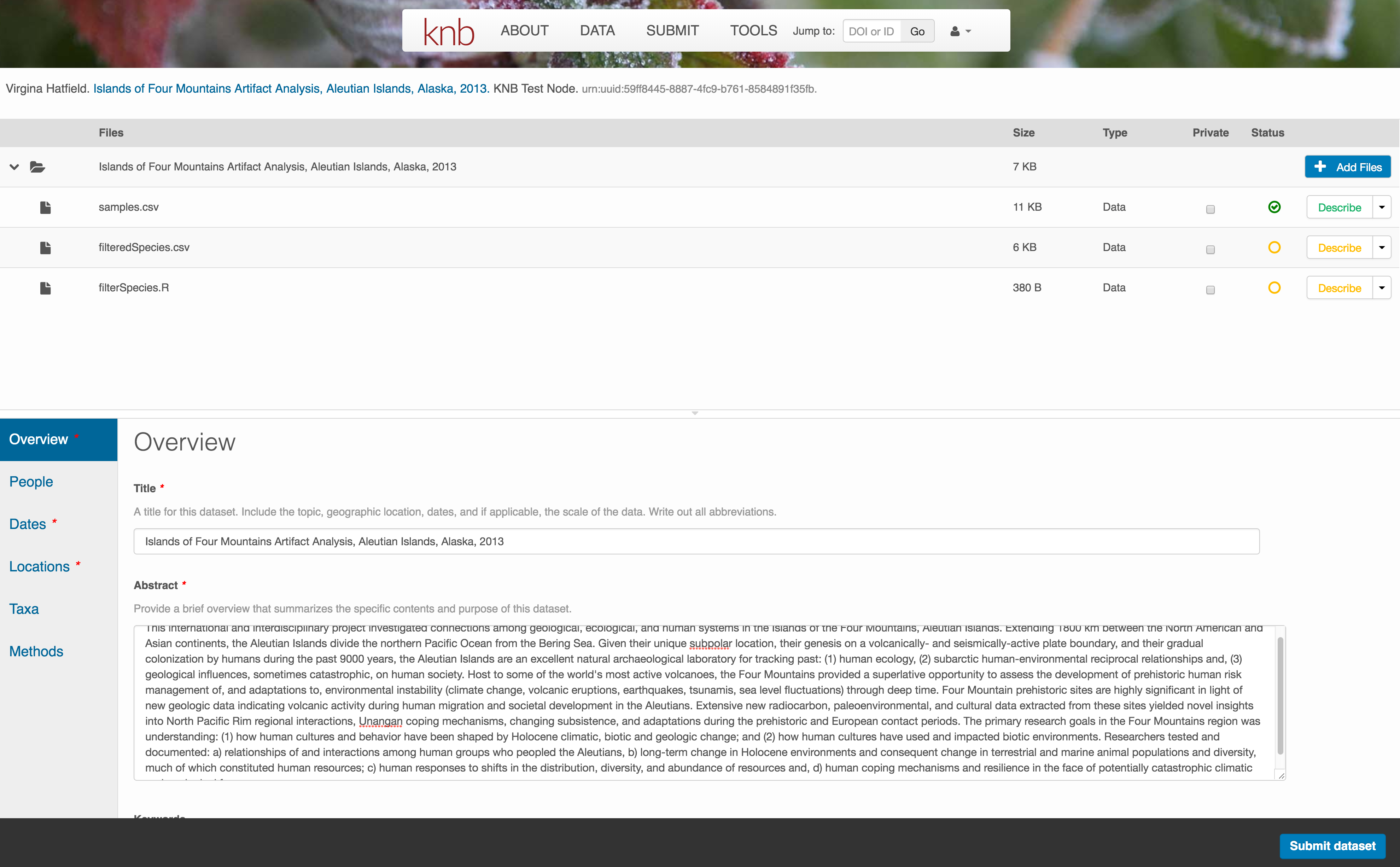

Create and submit the data set

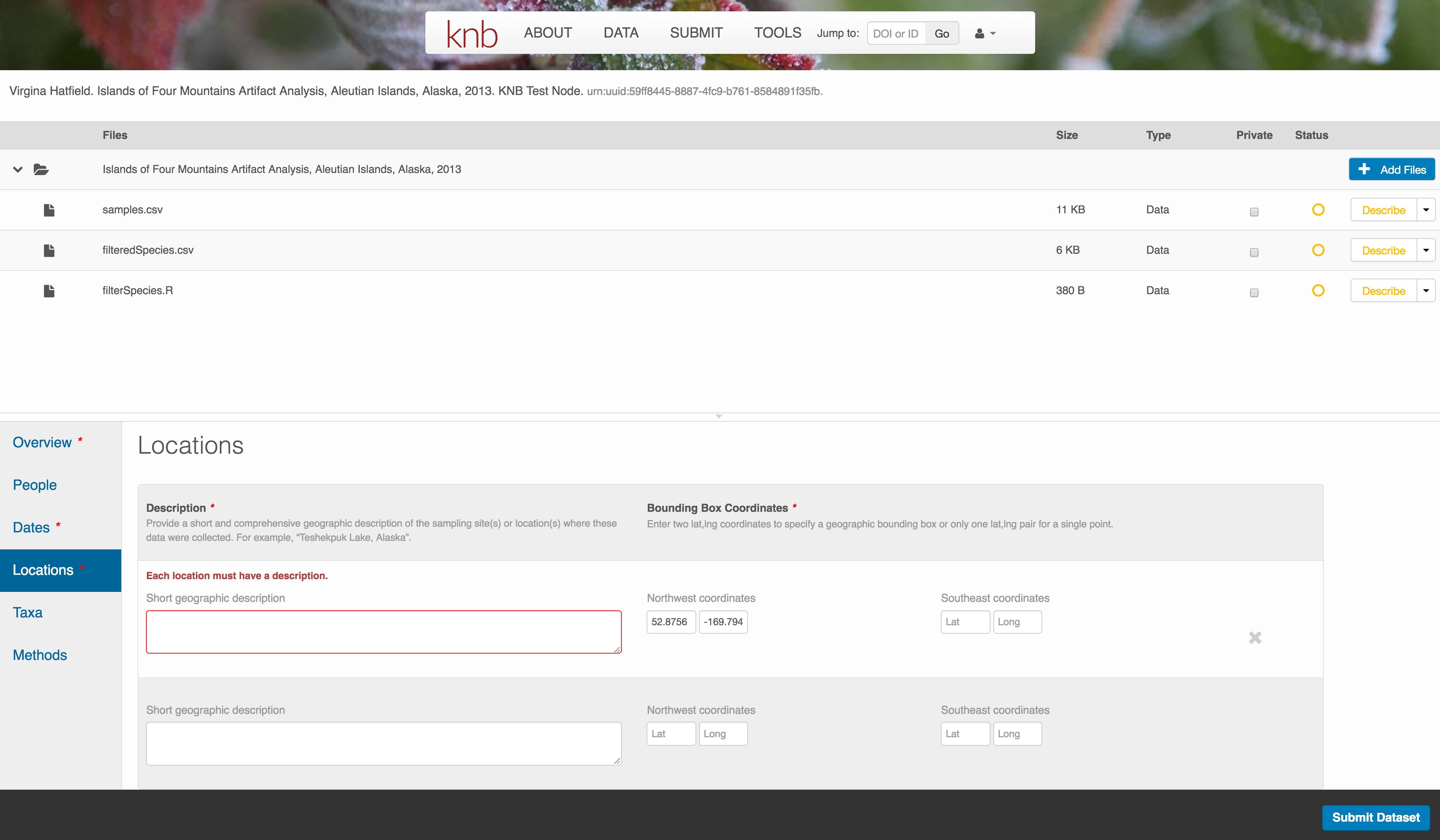

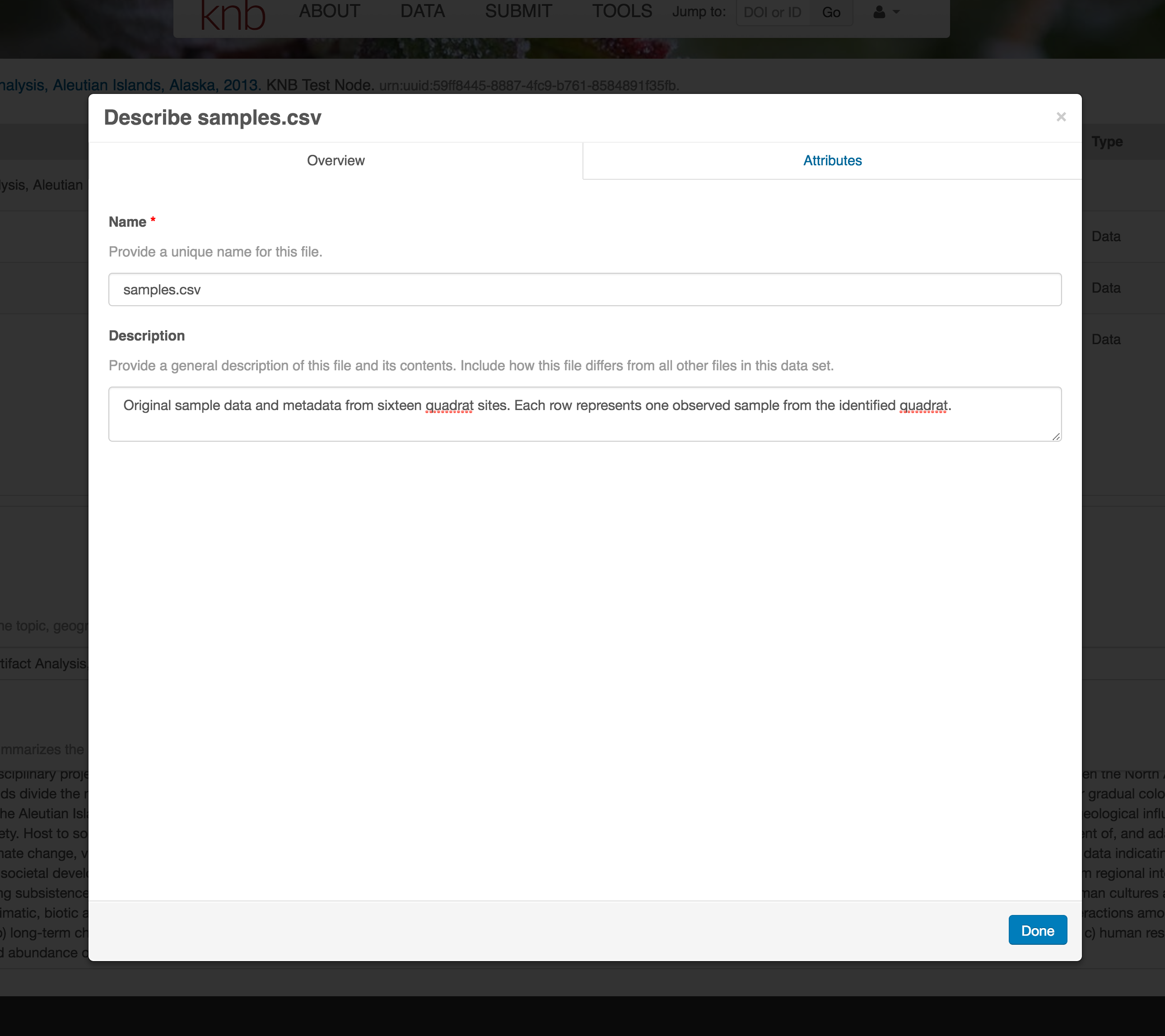

After signing in, you can access the data submission form using the Submit button. Once on the form, upload your data files and follow the prompts to provide the required metadata. Required sections are listed with a red asterisk.

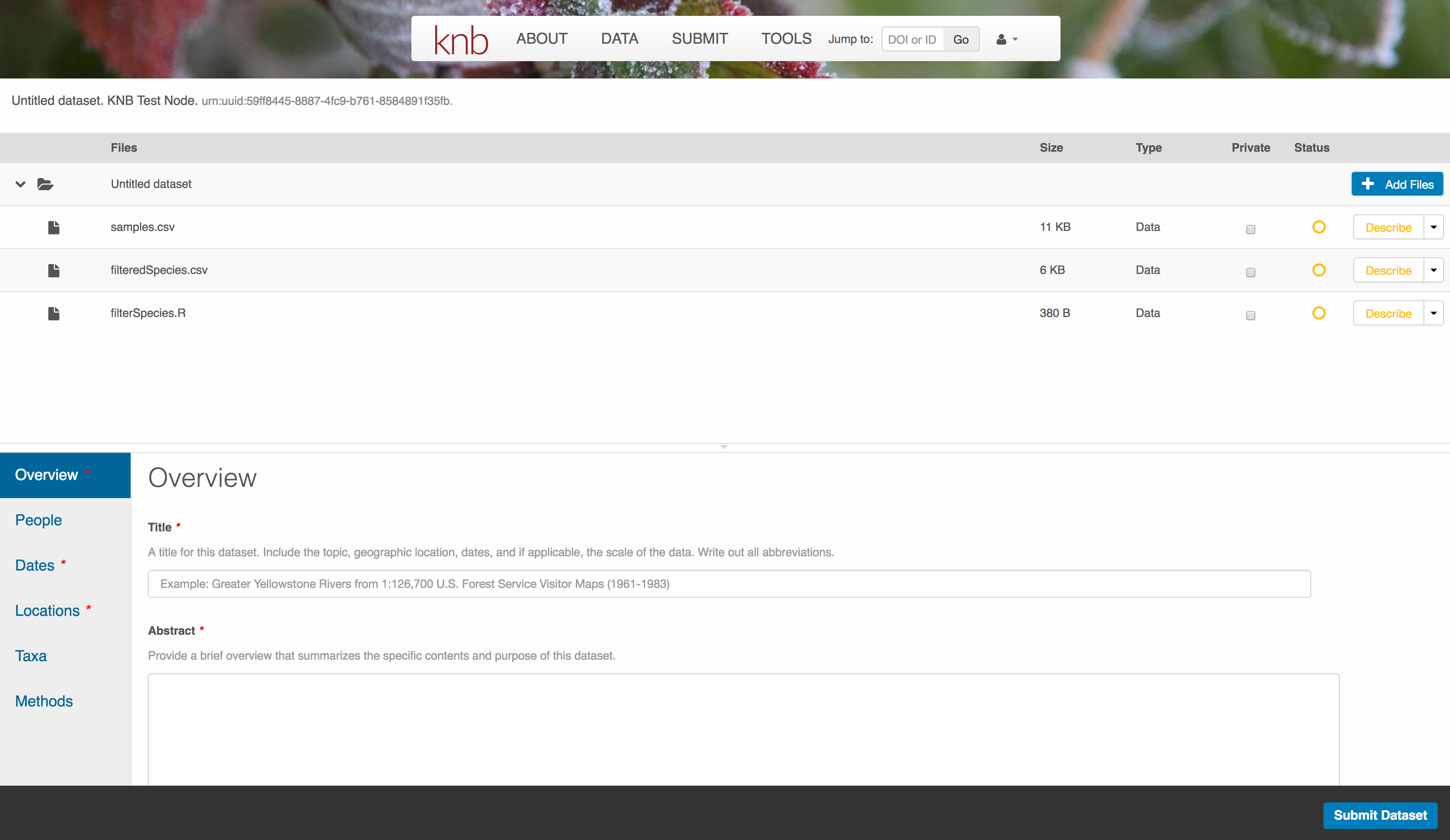

Click Add Files to choose the data files for your package

You can select multiple files at a time to efficiently upload many files.

The files will upload showing a progress indicator. You can continue editing metadata while they upload.

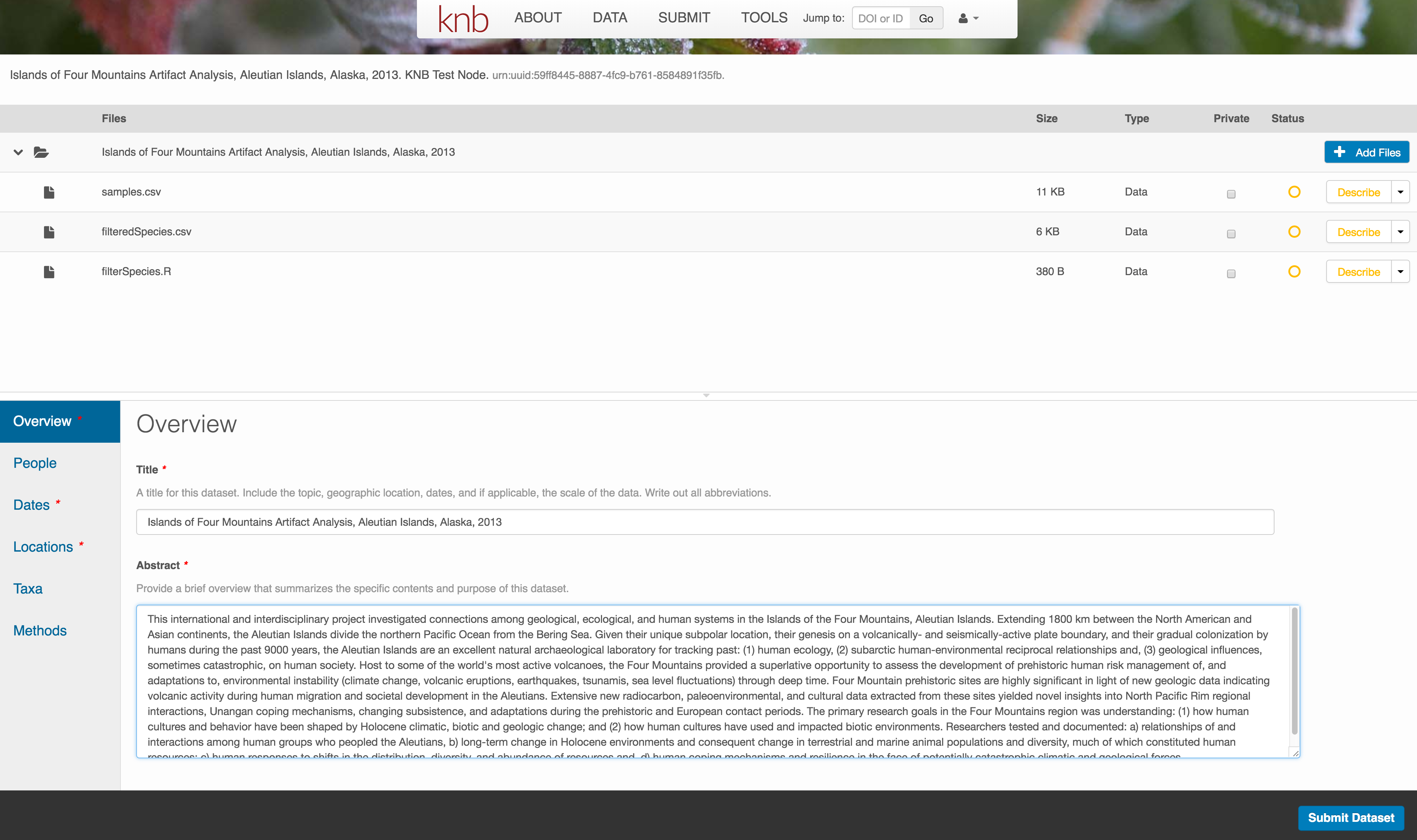

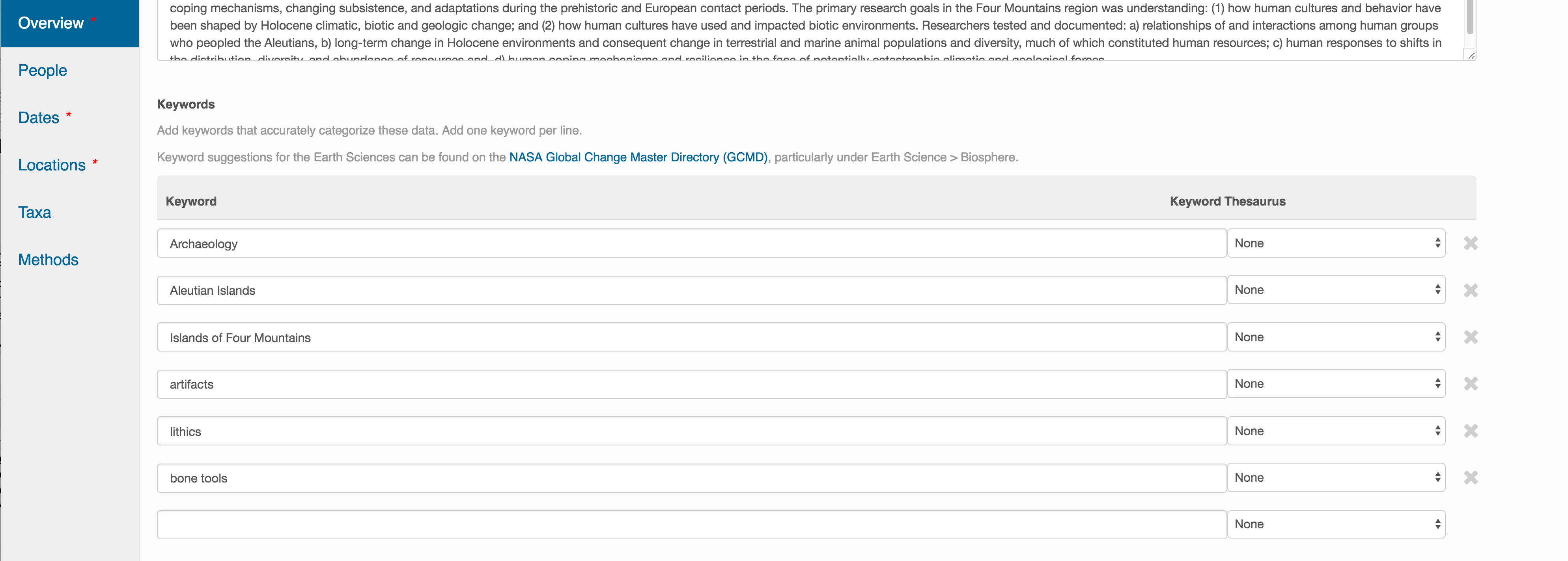

Enter Overview information

This includes a descriptive title, abstract, and keywords.

The title is the first way a potential user will get information about your dataset. It should be descriptive but succinct, lack acronyms, and include some indication of the temporal and geospatial coverage of the data.

The abstract should be sufficently descriptive for a general scientific audience to understand your dataset at a high level. It should provide an overview of the scientific context/project/hypotheses, how this data package fits into the larger context, a synopsis of the experimental or sampling design, and a summary of the data contents.

Keywords, while not required, can help increase the searchability of your dataset, particularly if they come from a semantically defined keyword thesaurus.

Optionally, you can also enter funding information, including a funding number, which can help link multiple datasets collected under the same grant.

Selecting a distribution license - either CC-0 or CC-BY is required.

People Information

Information about the people associated with the dataset is essential to provide credit through citation and to help people understand who made contributions to the product. Enter information for the following people:

- Creators - all the people who should be in the citation for the dataset

- Contacts - one is required, but defaults to the first Creator if omitted

- Principal Investigators

- and any other that are relevant

For each, please strive to provide their ORCID identifier, which helps link this dataset to their other scholarly works.

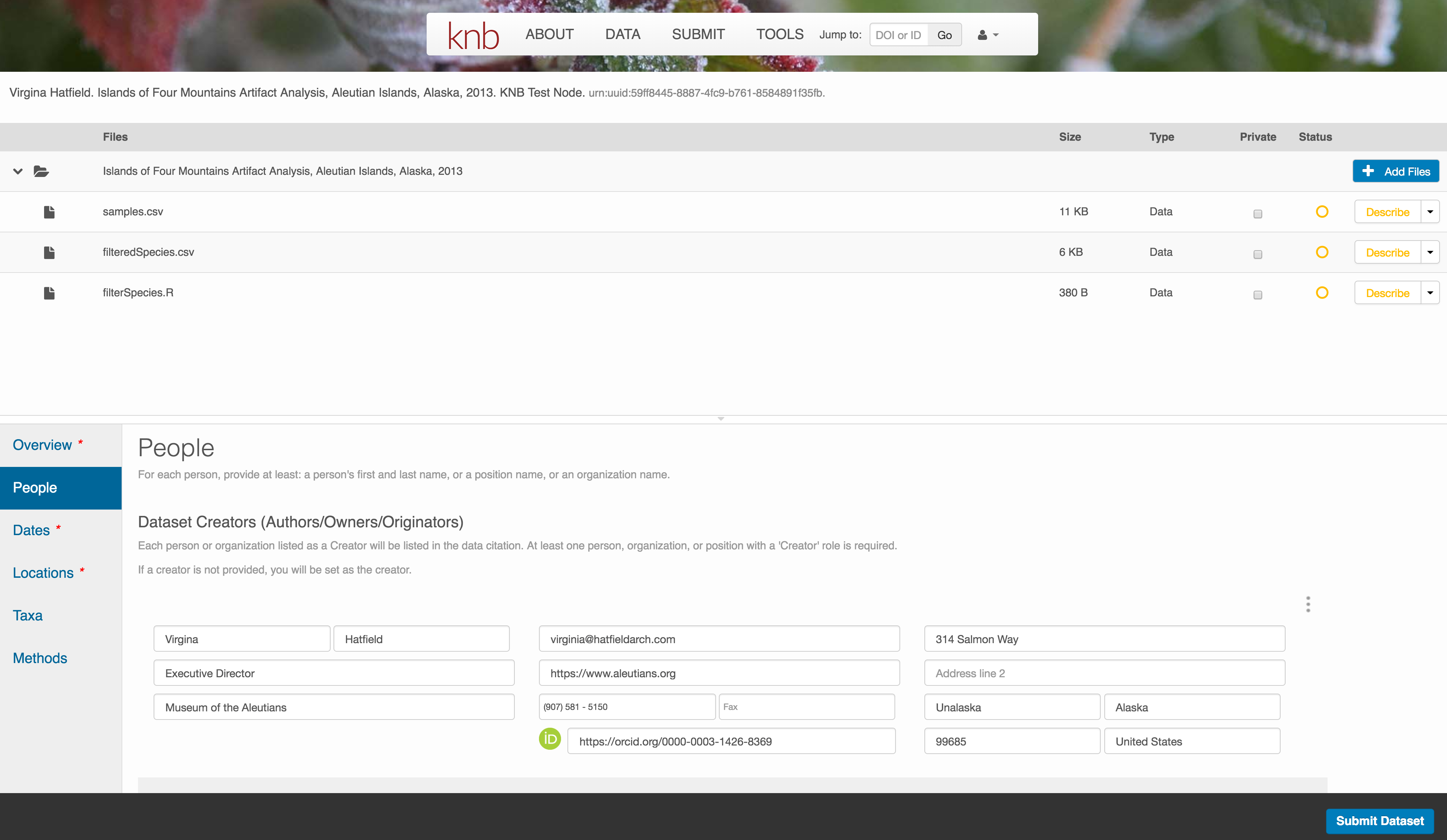

5.4.6.0.1 Temporal Information

Add the temporal coverage of the data, which represents the time period to which data apply.

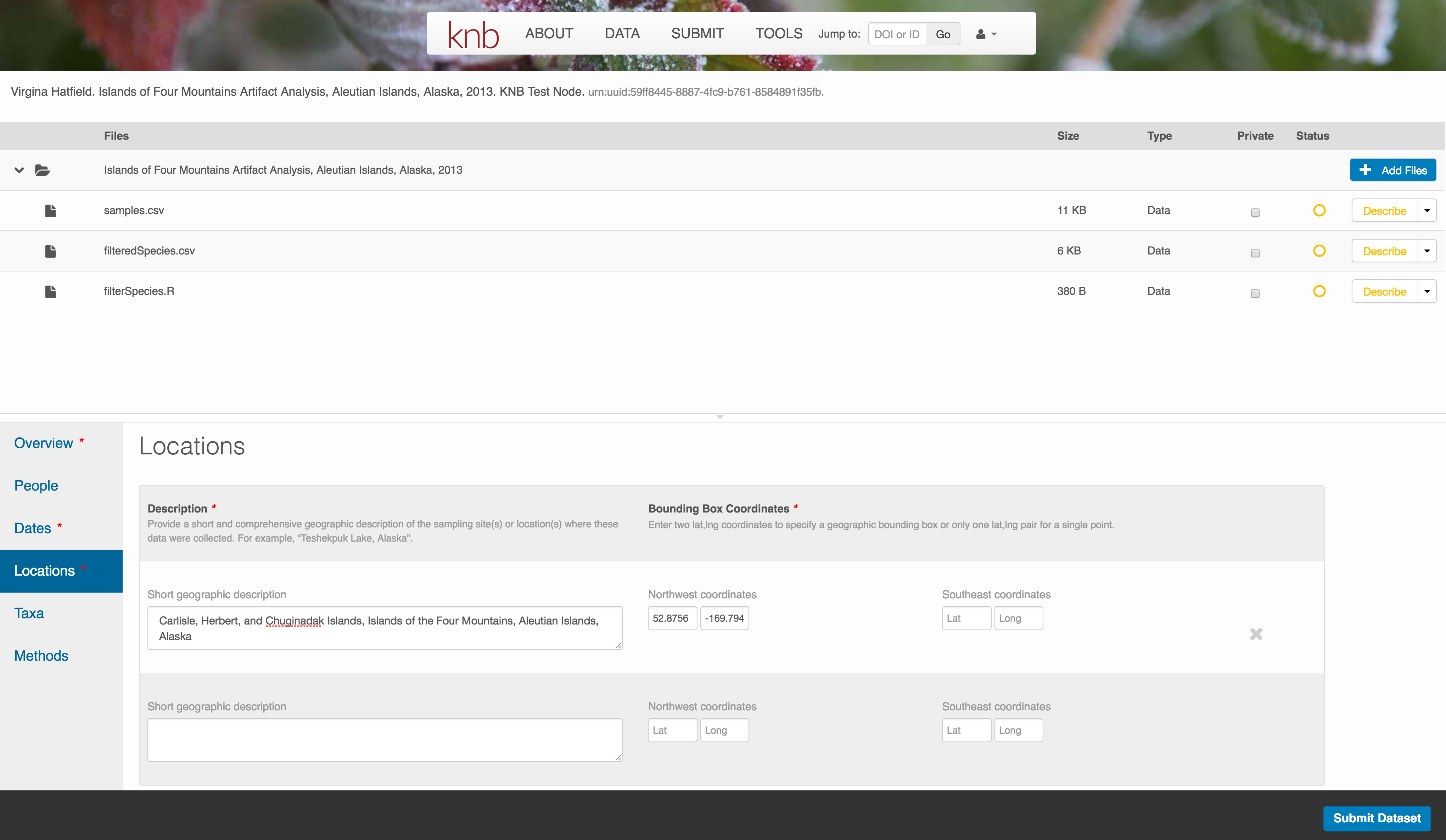

Location Information

The geospatial location that the data were collected is critical for discovery and interpretation of the data. Coordinates are entered in decimal degrees, and be sure to use negative values for West longitudes. The editor allows you to enter multiple locations, which you should do if you had noncontiguous sampling locations. This is particularly important if your sites are separated by large distances, so that a spatial search will be more precise.

Note that, if you miss fields that are required, they will be highlighted in red to draw your attention. In this case, for the description, provide a comma-separated place name, ordered from the local to global. For example:

- Mission Canyon, Santa Barbara, California, USA

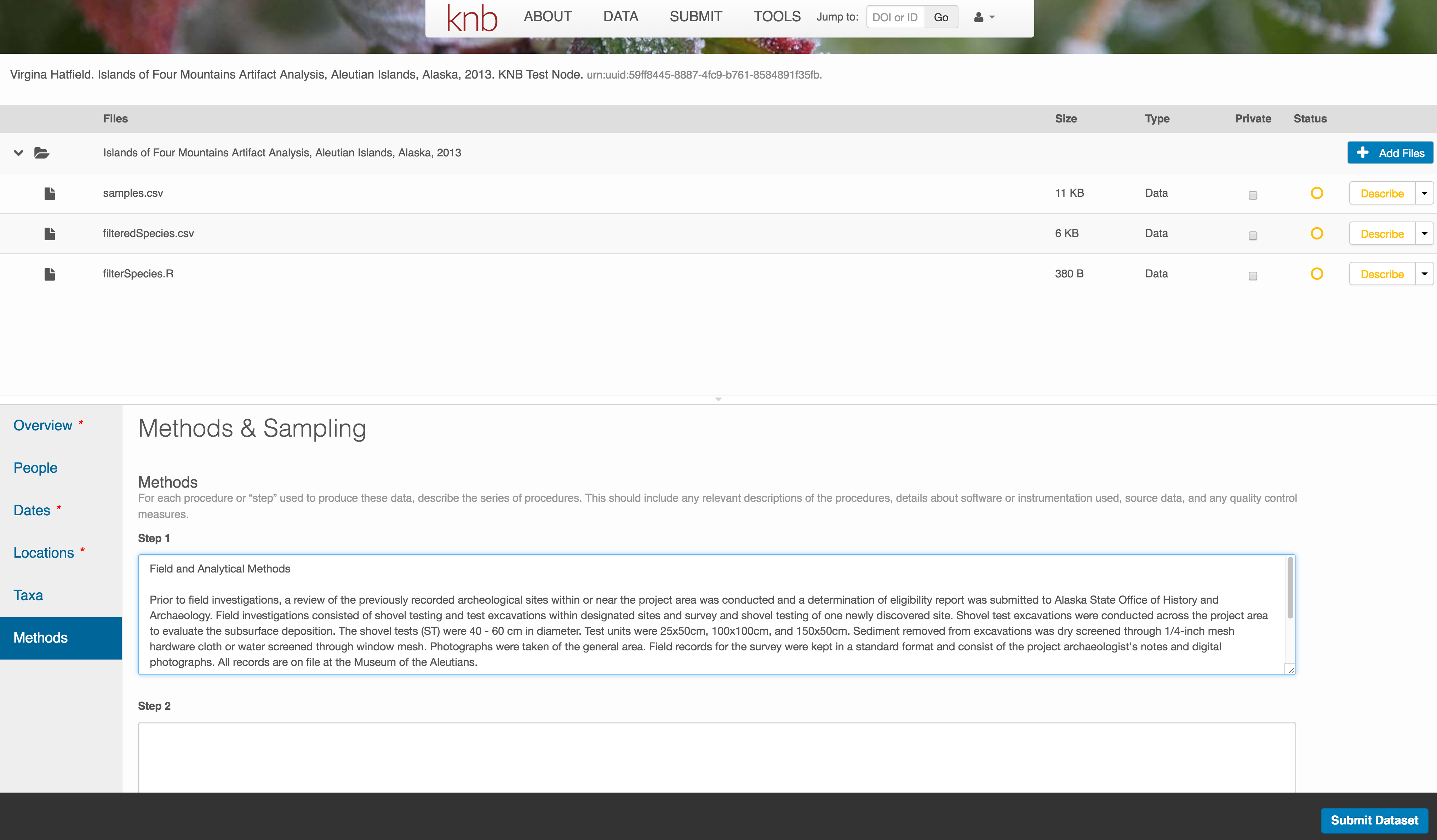

Methods

Methods are critical to accurate interpretation and reuse of your data. The editor allows you to add multiple different methods sections, include details of sampling methods, experimental design, quality assurance procedures, and computational techniques and software. Please be complete with your methods sections, as they are fundamentally important to reuse of the data.

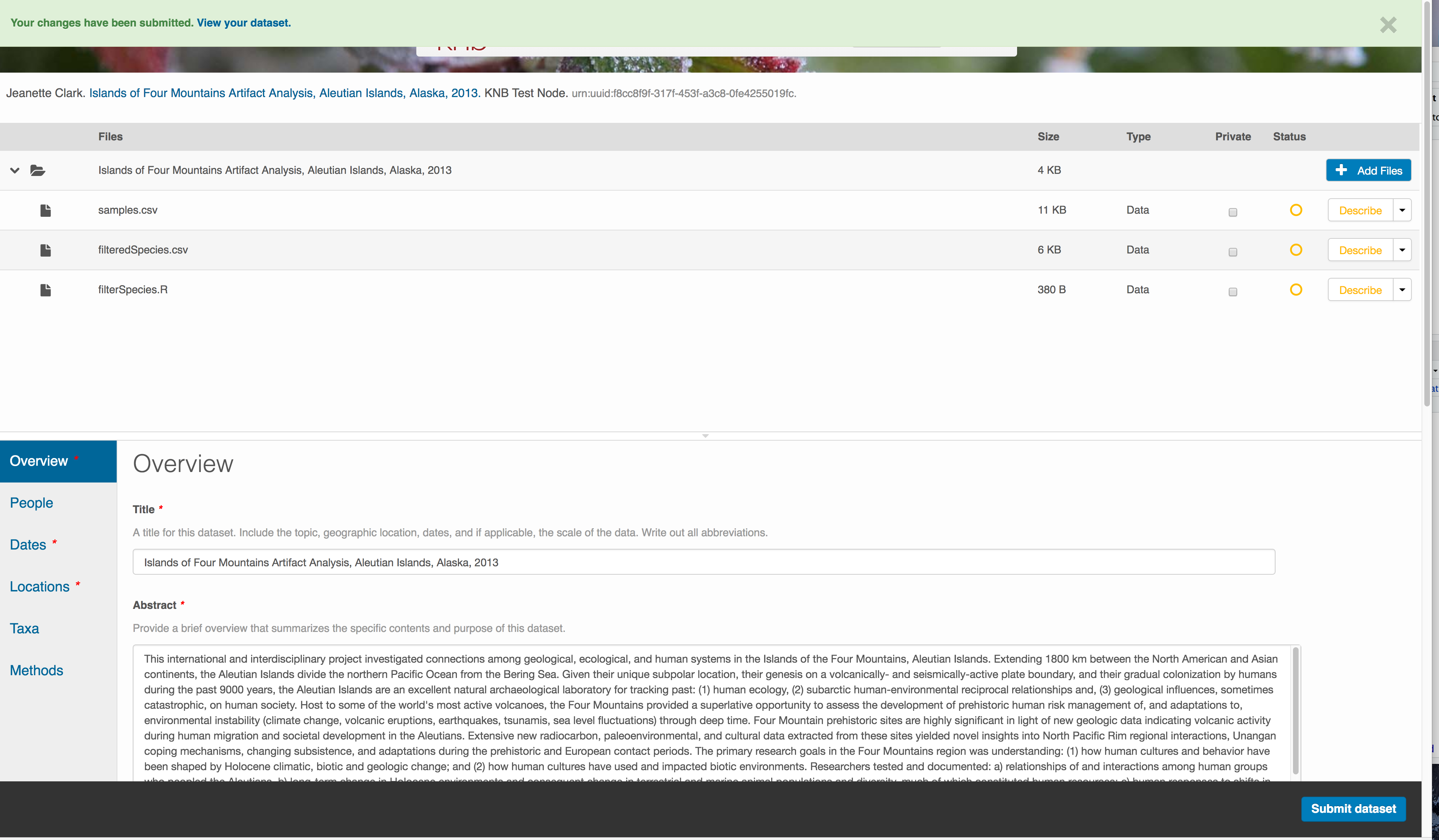

Save a first version with Submit

When finished, click the Submit Dataset button at the bottom.

If there are errors or missing fields, they will be highlighted.

Correct those, and then try submitting again. When you are successful, you should

see a green banner with a link to the current dataset view. Click the X

to close that banner, if you want to continue editing metadata.

Success!

File and variable level metadata

The final major section of metadata concerns the structure and contents of your data files. In this case, provide the names and descriptions of the data contained in each file, as well as details of their internal structure.

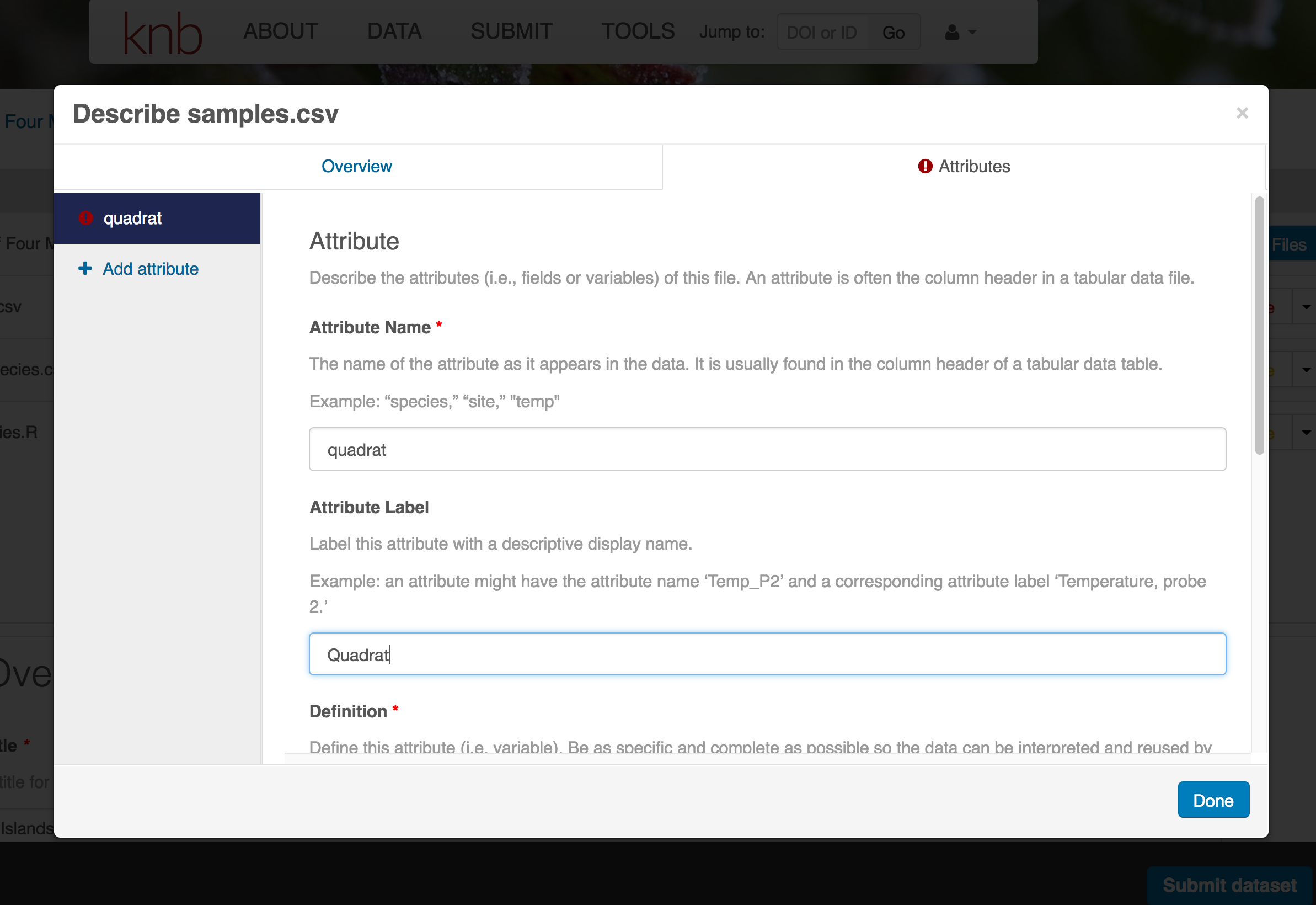

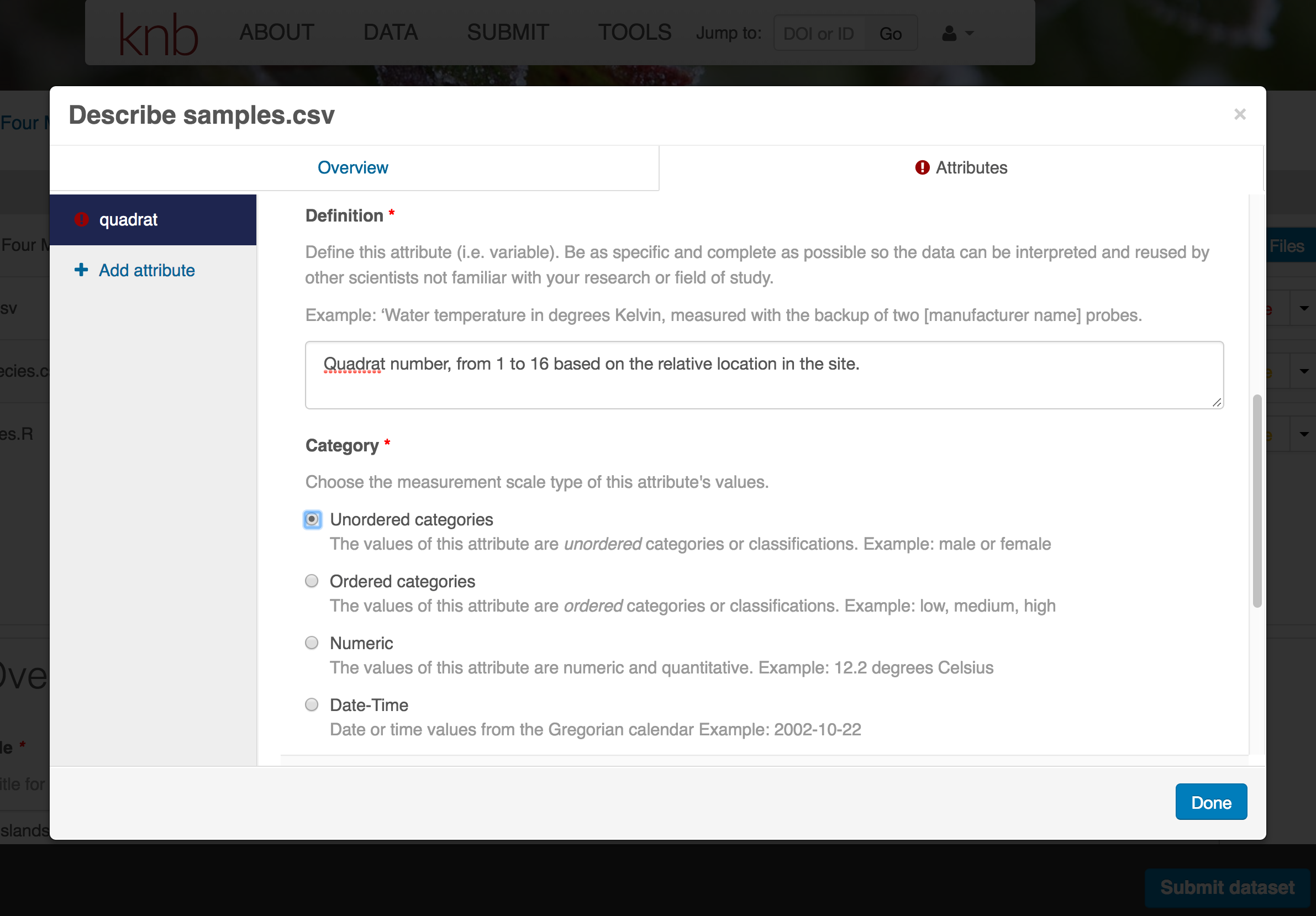

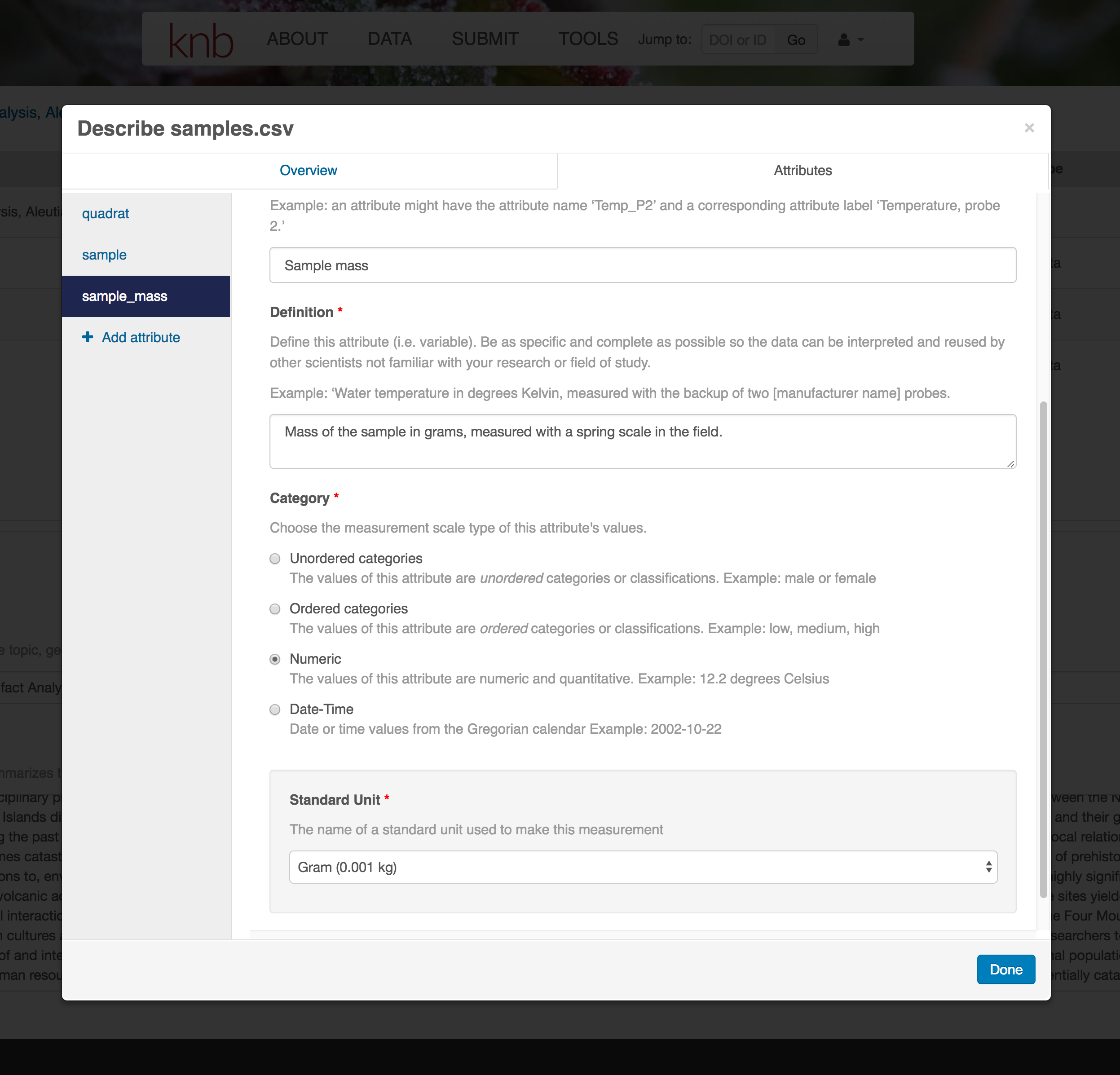

For example, for data tables, you’ll need the name, label, and definition of each variable in your file. Click the Describe button to access a dialog to enter this information.

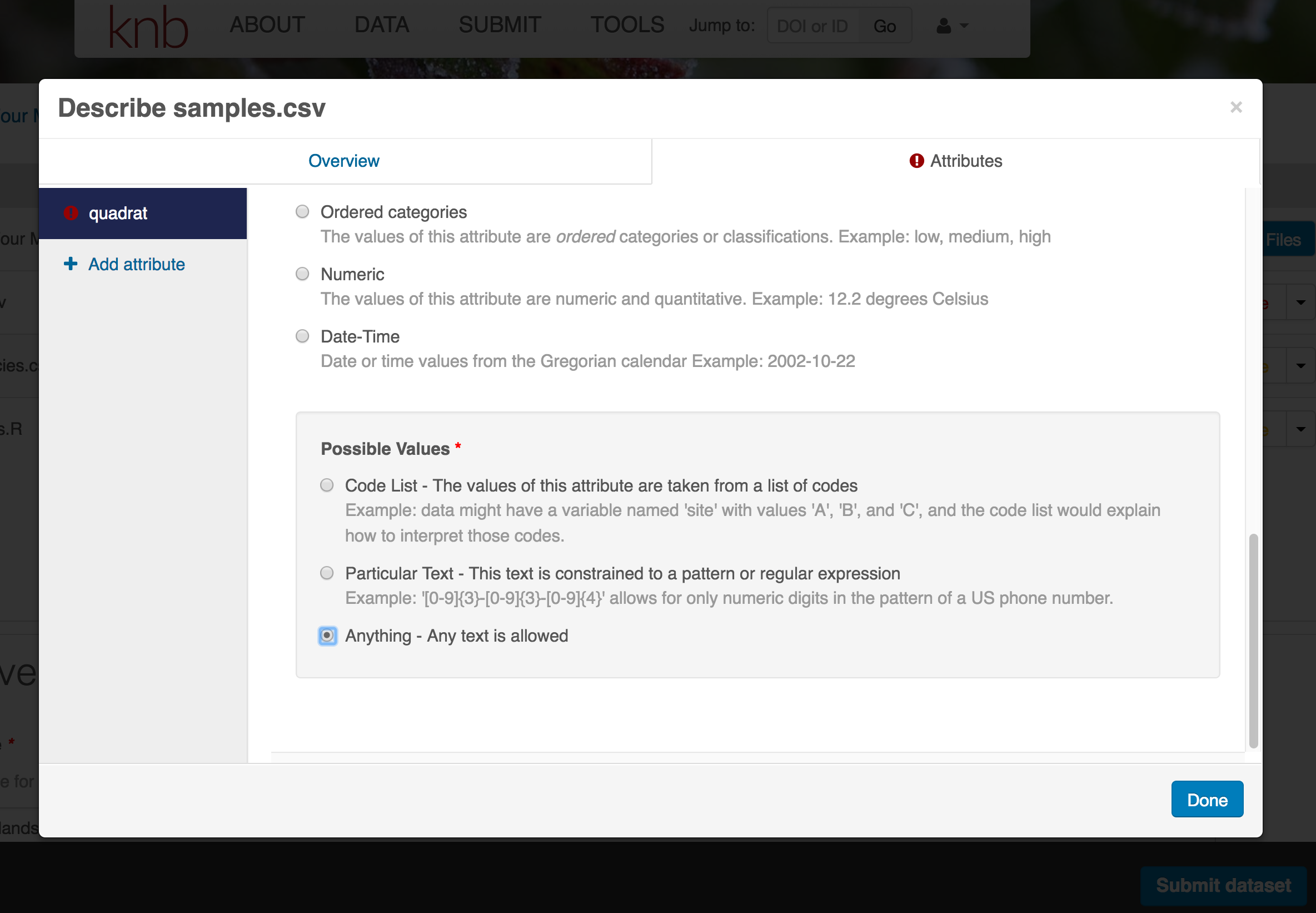

The Attributes tab is where you enter variable (aka attribute) information. In the case of tabular data (such as csv files) each column is an attribute, thus there should be one attribute defined for every column in your dataset. Attribute metadata includes:

- variable name (for programs)

- variable label (for display)

- variable definition (be specific)

- type of measurement

- units & code definitions

You’ll need to add these definitions for every variable (column) in the file. When done, click Done.

Now the list of data files will show a green checkbox indicating that you have full described that file’s internal structure. Proceed with the other CSV files, and then click Submit Dataset to save all of these changes.

Note that attribute information is not relevant for data files that do not contain variables, such as the R script in this example. Other examples of data files that might not need attributes are images, pdfs, and non-tabular text documents (such as readme files). The yellow circle in the editor indicates that attributes have not been filled out for a data file, and serves as a warning that they might be needed, depending on the file.

After you get the green success message, you can visit your dataset and review all of the information that you provided. If you find any errors, simply click Edit again to make changes.

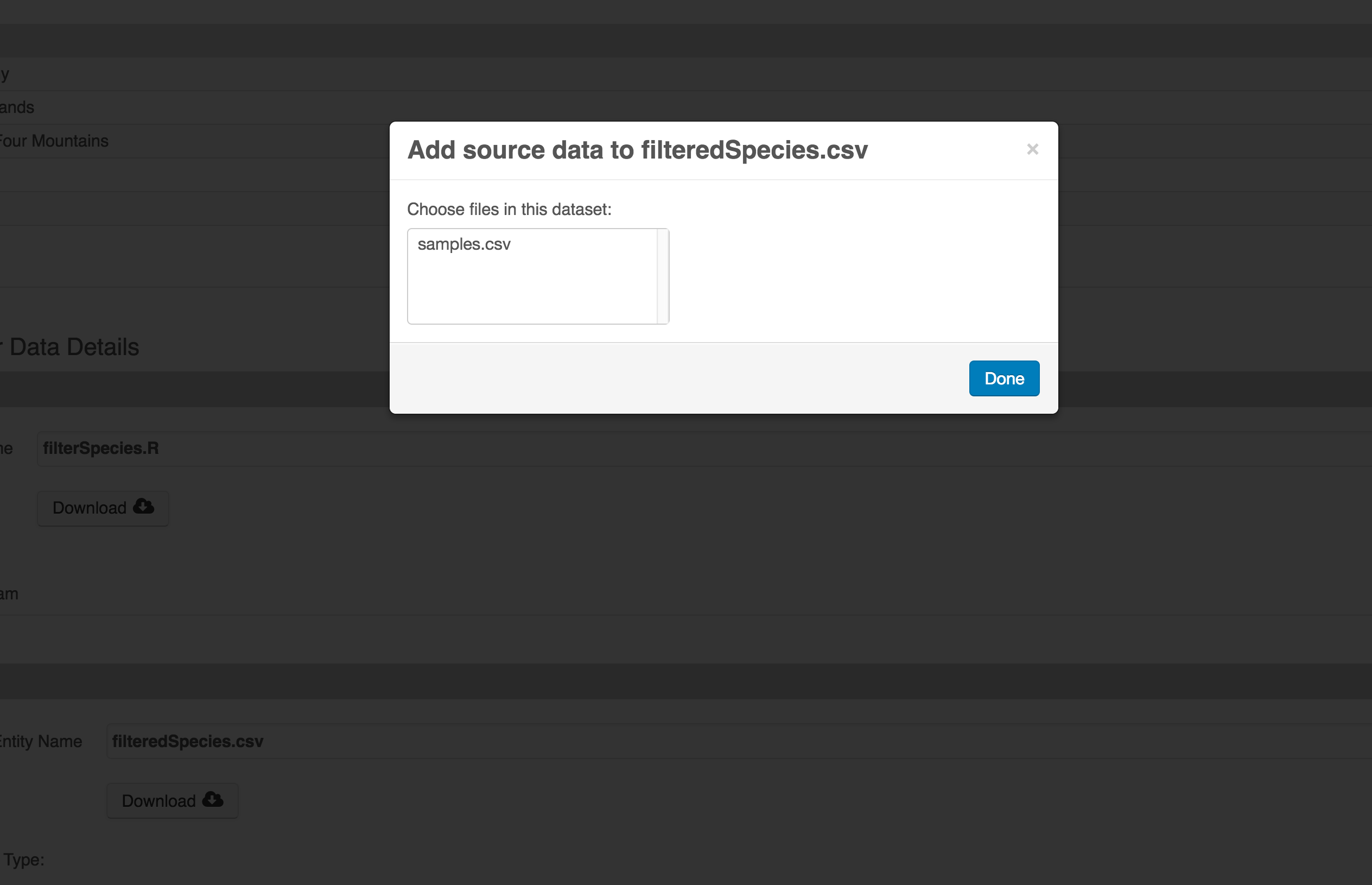

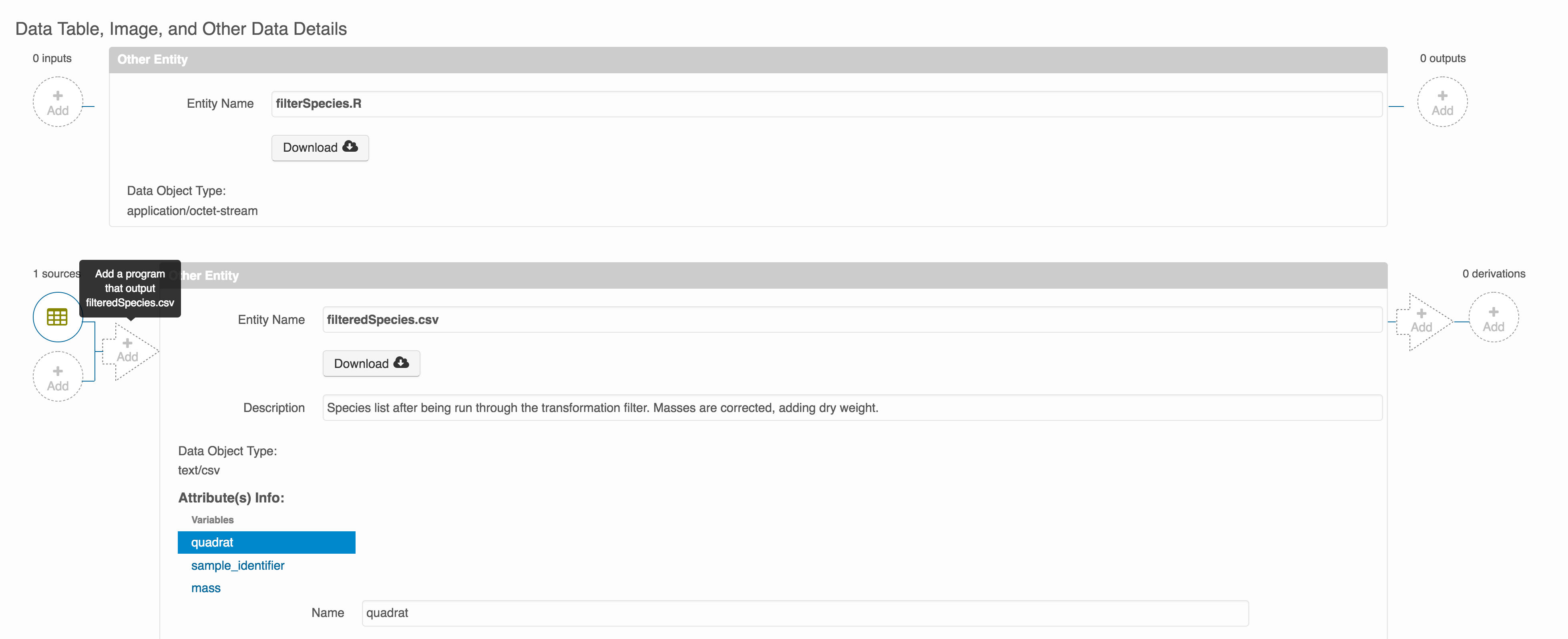

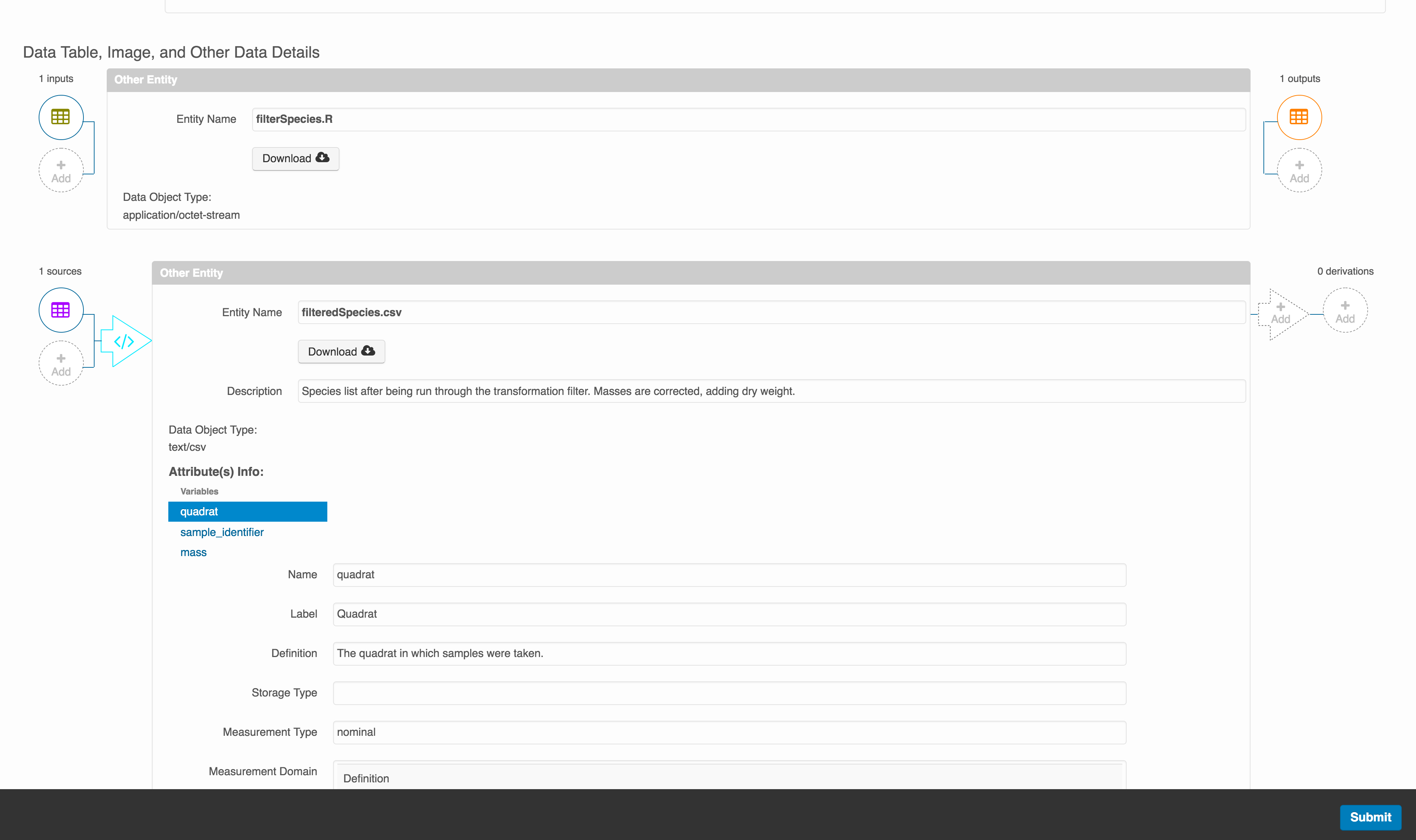

Add workflow provenance

Understanding the relationships between files in a package is critically important, especially as the number of files grows. Raw data are transformed and integrated to produce derived data, that are often then used in analysis and visualization code to produce final outputs. In DataONE, we support structured descriptions of these relationships, so one can see the flow of data from raw data to derived to outputs.

You add provenance by navigating to the data table descriptions, and selecting the

Add buttons to link the data and scripts that were used in your computational

workflow. On the left side, select the Add circle to add an input data source

to the filteredSpecies.csv file. This starts building the provenance graph to

explain the origin and history of each data object.

The linkage to the source dataset should appear.

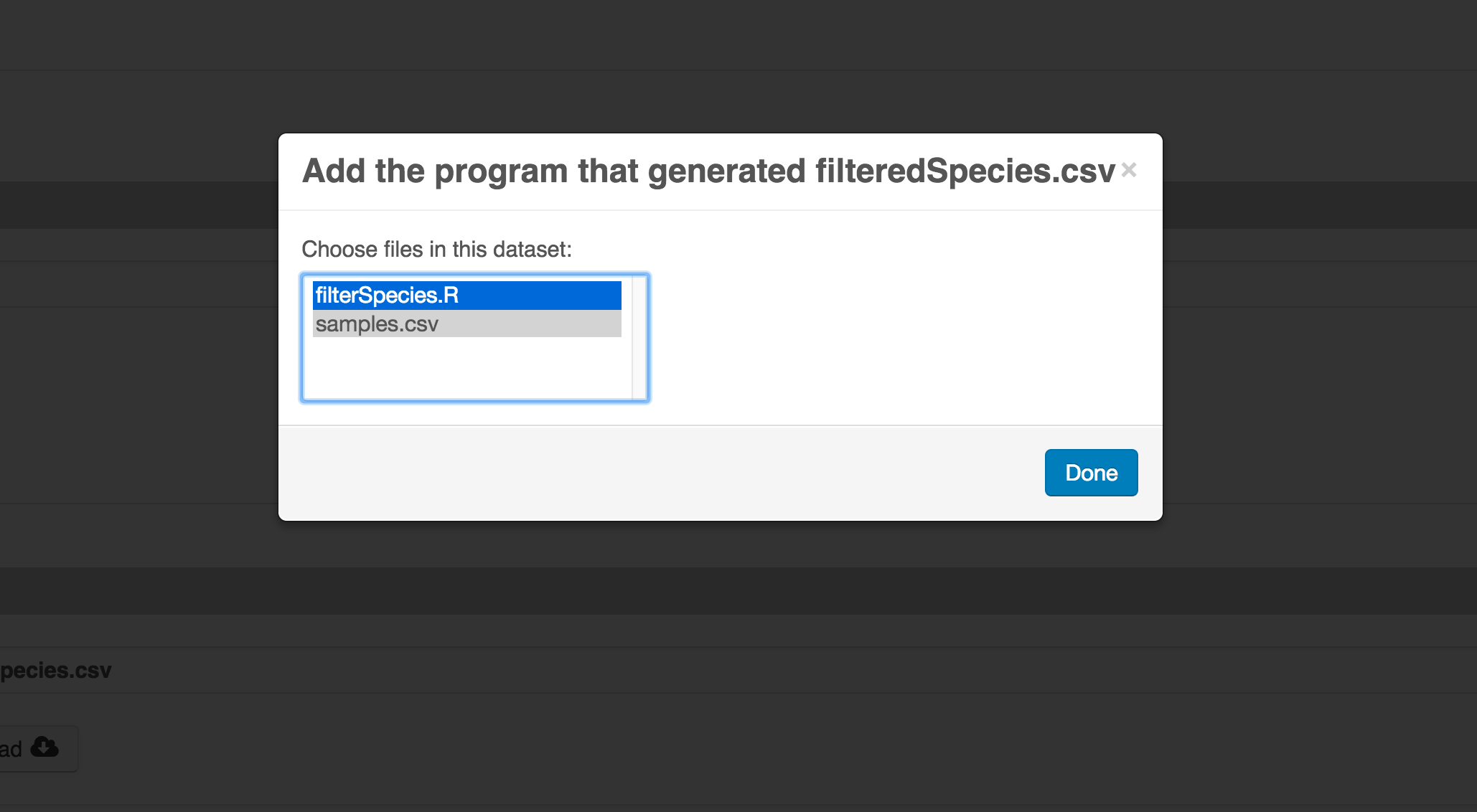

Then you can add the link to the source code that handled the conversion

between the data files by clicking on Add arrow and selecting the R script:

Select the R script and click “Done.”

The diagram now shows the relationships among the data files and the R script, so click Submit to save another version of the package.

Et voilà! A beatifully preserved data package!

5.5 Introduction to R

5.5.1 Learning Objectives

In this lesson we will:

- get oriented to the RStudio interface

- work with R in the console

- be introduced to built-in R functions

- learn to use the help pages

5.5.2 Introduction and Motivation

There is a vibrant community out there that is collectively developing increasingly easy to use and powerful open source programming tools. The changing landscape of programming is making learning how to code easier than it ever has been. Incorporating programming into analysis workflows not only makes science more efficient, but also more computationally reproducible. In this course, we will use the programming language R, and the accompanying integrated development environment (IDE) RStudio. R is a great language to learn for data-oriented programming because it is widely adopted, user-friendly, and (most importantly) open source!

So what is the difference between R and RStudio? Here is an analogy to start us off. If you were a chef, R is a knife. You have food to prepare, and the knife is one of the tools that you’ll use to accomplish your task.

And if R were a knife, RStudio is the kitchen. RStudio provides a place to do your work! Other tools, communication, community, it makes your life as a chef easier. RStudio makes your life as a researcher easier by bringing together other tools you need to do your work efficiently - like a file browser, data viewer, help pages, terminal, community, support, the list goes on. So it’s not just the infrastructure (the user interface or IDE), although it is a great way to learn and interact with your variables, files, and interact directly with git. It’s also data science philosophy, R packages, community, and more. So although you can prepare food without a kitchen and we could learn R without RStudio, that’s not what we’re going to do. We are going to take advantage of the great RStudio support, and learn R and RStudio together.

Something else to start us off is to mention that you are learning a new language here. It’s an ongoing process, it takes time, you’ll make mistakes, it can be frustrating, but it will be overwhelmingly awesome in the long run. We all speak at least one language; it’s a similar process, really. And no matter how fluent you are, you’ll always be learning, you’ll be trying things in new contexts, learning words that mean the same as others, etc, just like everybody else. And just like any form of communication, there will be miscommunications that can be frustrating, but hands down we are all better off because of it.

While language is a familiar concept, programming languages are in a different context from spoken languages, but you will get to know this context with time. For example: you have a concept that there is a first meal of the day, and there is a name for that: in English it’s “breakfast”. So if you’re learning Spanish, you could expect there is a word for this concept of a first meal. (And you’d be right: ‘desayuno’). We will get you to expect that programming languages also have words (called functions in R) for concepts as well. You’ll soon expect that there is a way to order values numerically. Or alphabetically. Or search for patterns in text. Or calculate the median. Or reorganize columns to rows. Or subset exactly what you want. We will get you increase your expectations and learn to ask and find what you’re looking for.

5.5.2.1 Resources

This lesson is a combination of excellent lessons by others. Huge thanks to Julie Lowndes for writing most of this content and letting us build on her material, which in turn was built on Jenny Bryan’s materials. I definitely recommend reading through the original lessons and using them as reference:

Julie Lowndes’ Data Science Training for the Ocean Health Index

Jenny Bryan’s lectures from STAT545 at UBC

Here are some other resources that we like for learning R:

- Learn R in the console with swirl

- The Introduction to R lesson in Data Carpentry’s R for data analysis course

- The Stat 545 course materials

- The QCBS Introduction to R lesson (in French)

Other resources:

5.5.3 R at the console

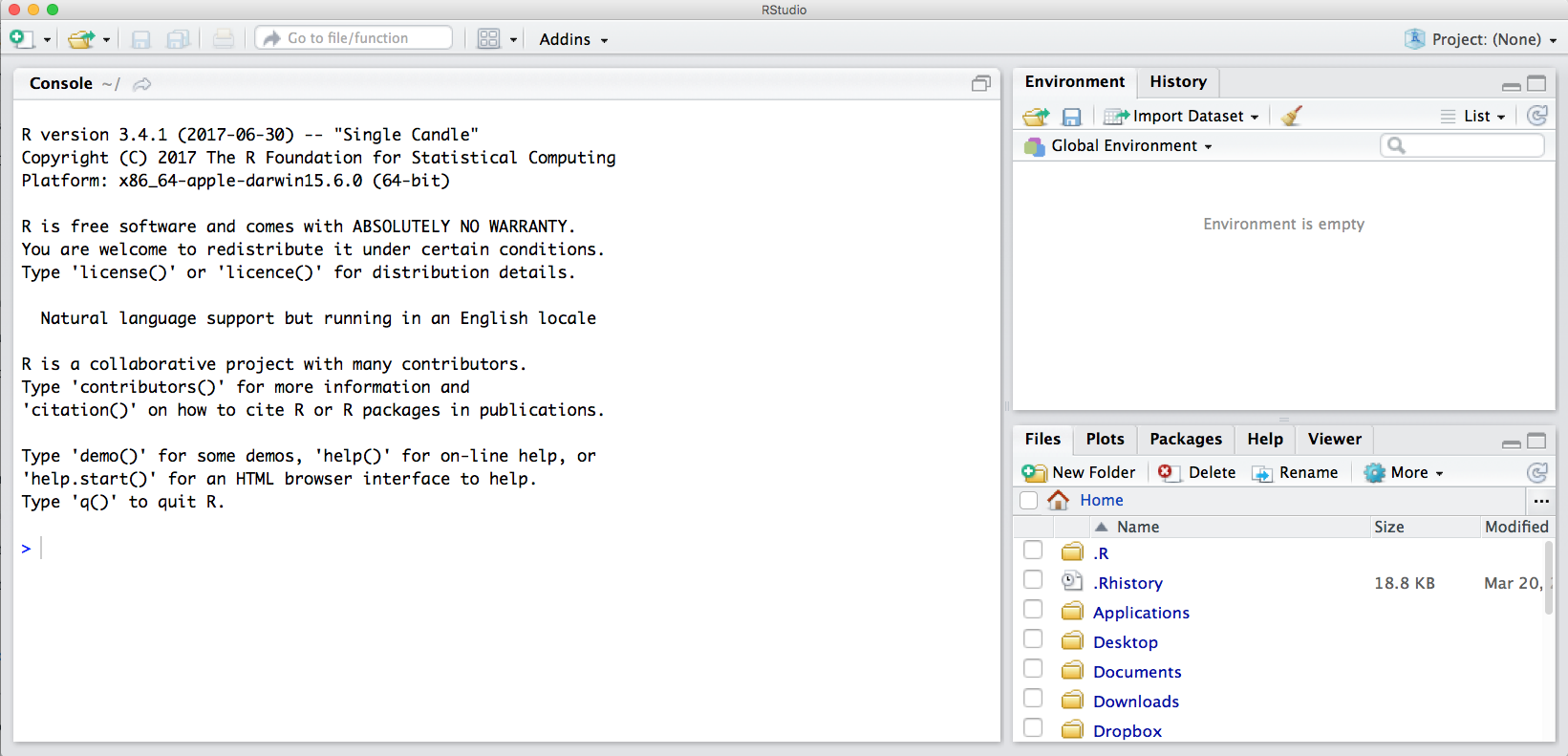

Launch RStudio/R.

Notice the default panes:

- Console (entire left)

- Environment/History (tabbed in upper right)

- Files/Plots/Packages/Help (tabbed in lower right)

FYI: you can change the default location of the panes, among many other things: Customizing RStudio.

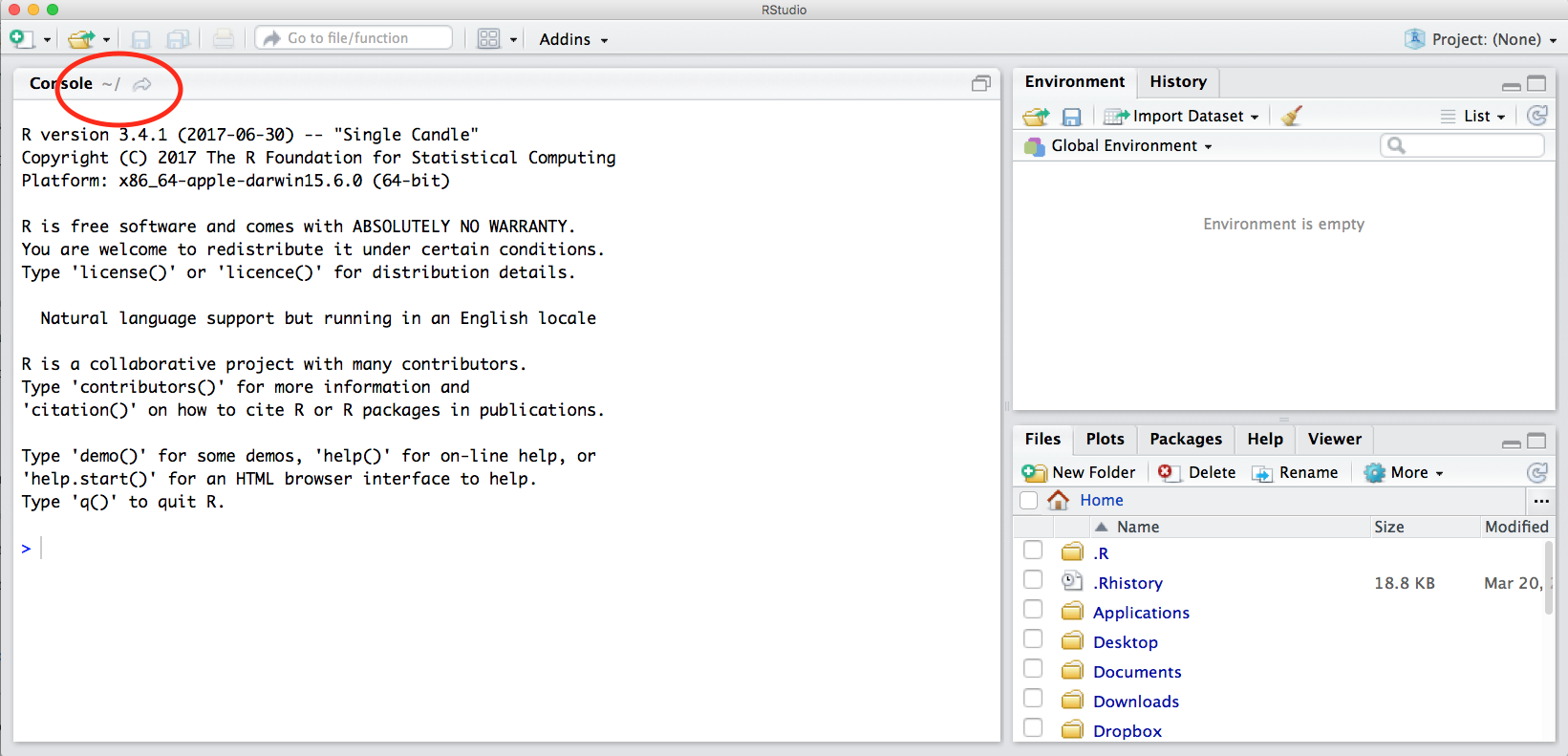

An important first question: where are we?

If you’ve just opened RStudio for the first time, you’ll be in your Home directory. This is noted by the ~/ at the top of the console. You can see too that the Files pane in the lower right shows what is in the Home directory where you are. You can navigate around within that Files pane and explore, but note that you won’t change where you are: even as you click through you’ll still be Home: ~/.

OK let’s go into the Console, where we interact with the live R process.

We use R to calculate things for us, so let’s do some simple math.

## [1] 12You can assign the value of that mathematic operation to a variable, or object, in R. You do this using the assignment operator, <-.

Make an assignment and then inspect the object you just created.

## [1] 12In my head I hear, e.g., “x gets 12”.

All R statements where you create objects – “assignments” – have this form: objectName <- value.

I’ll write it in the console with a hash #, which is the way R comments so it won’t be evaluated.

## objectName <- value

## This is also how you write notes in your code to explain what you are doing.Object names cannot start with a digit and cannot contain certain other characters such as a comma or a space. You will be wise to adopt a convention for demarcating words in names.

Make an assignment

To inspect this variable, instead of typing it, we can press the up arrow key and call your command history, with the most recent commands first. Let’s do that, and then delete the assignment:

## [1] 2.5Another way to inspect this variable is to begin typing this_…and RStudio will automagically have suggested completions for you that you can select by hitting the tab key, then press return.

One more:

You can see that we can assign an object to be a word, not a number. In R, this is called a “string”, and R knows it’s a word and not a number because it has quotes " ". You can work with strings in your data in R pretty easily, thanks to the stringr and tidytext packages. We won’t talk about strings very much specifically, but know that R can handle text, and it can work with text and numbers together.

Strings and numbers lead us to an important concept in programming: that there are different “classes” or types of objects. An object is a variable, function, data structure, or method that you have written to your environment. You can see what objects you have loaded by looking in the “environment” pane in RStudio. The operations you can do with an object will depend on what type of object it is. This makes sense! Just like you wouldn’t do certain things with your car (like use it to eat soup), you won’t do certain operations with character objects (strings), for example.

Try running the following line in your console:

What happened? Why?

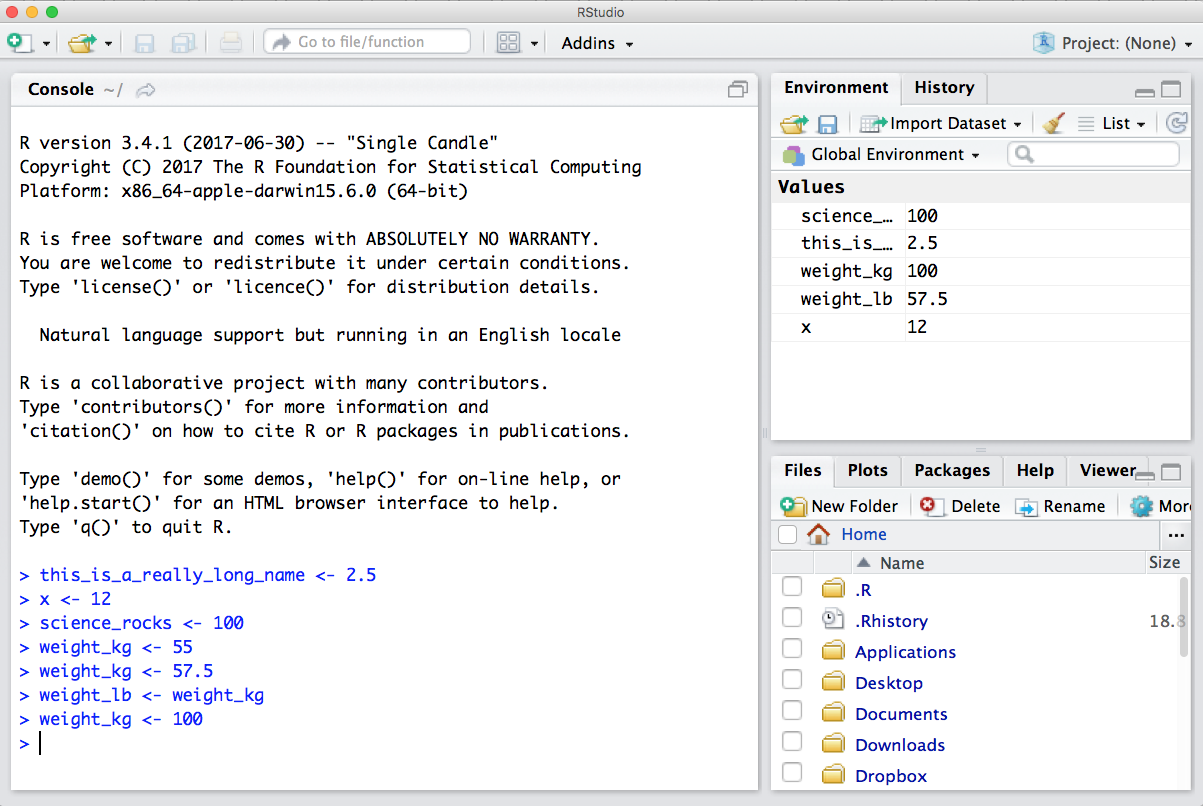

You may have noticed that when assigning a value to an object, R does not print anything. You can force R to print the value by using parentheses or by typing the object name:

weight_kg <- 55 # doesn't print anything

(weight_kg <- 55) # but putting parenthesis around the call prints the value of `weight_kg`## [1] 55## [1] 55Now that R has weight_kg in memory, we can do arithmetic with it. For instance, we may want to convert this weight into pounds (weight in pounds is 2.2 times the weight in kg):

## [1] 121We can also change a variable’s value by assigning it a new one:

## [1] 126.5This means that assigning a value to one variable does not change the values of other variables. For example, let’s store the animal’s weight in pounds in a new variable, weight_lb:

and then change weight_kg to 100.

What do you think is the current content of the object weight_lb? 126.5 or 220? Why?

You can also store more than one value in a single object. Storing a series of weights in a single object is a convenient way to perform the same operation on multiple values at the same time. One way to create such an object is the function c(), which stands for combine or concatenate.

Here we will create a vector of weights in kilograms, and convert them to pounds, saving the weight in pounds as a new object.

## [1] 55 25 12## [1] 121.0 55.0 26.45.5.3.1 Error messages are your friends

Implicit contract with the computer/scripting language: Computer will do tedious computation for you. In return, you will be completely precise in your instructions. Typos matter. Case matters. Pay attention to how you type.

Remember that this is a language, not unsimilar to English! There are times you aren’t understood – it’s going to happen. There are different ways this can happen. Sometimes you’ll get an error. This is like someone saying ‘What?’ or ‘Pardon’? Error messages can also be more useful, like when they say ‘I didn’t understand this specific part of what you said, I was expecting something else’. That is a great type of error message. Error messages are your friend. Google them (copy-and-paste!) to figure out what they mean.

And also know that there are errors that can creep in more subtly, without an error message right away, when you are giving information that is understood, but not in the way you meant. Like if I’m telling a story about tables and you’re picturing where you eat breakfast and I’m talking about data. This can leave me thinking I’ve gotten something across that the listener (or R) interpreted very differently. And as I continue telling my story you get more and more confused… So write clean code and check your work as you go to minimize these circumstances!

5.5.3.2 Logical operators and expressions

A moment about logical operators and expressions. We can ask questions about the objects we just made.

==means ‘is equal to’!=means ‘is not equal to’<means ` is less than’>means ` is greater than’<=means ` is less than or equal to’>=means ` is greater than or equal to’

## [1] FALSE FALSE FALSE## [1] TRUE FALSE FALSE## [1] TRUE TRUE TRUEShortcuts You will make lots of assignments and the operator

<-is a pain to type. Don’t be lazy and use=, although it would work, because it will just sow confusion later. Instead, utilize RStudio’s keyboard shortcut: Alt + - (the minus sign). Notice that RStudio automagically surrounds<-with spaces, which demonstrates a useful code formatting practice. Code is miserable to read on a good day. Give your eyes a break and use spaces. RStudio offers many handy keyboard shortcuts. Also, Alt+Shift+K brings up a keyboard shortcut reference card.

5.5.3.3 Clearing the environment

Now look at the objects in your environment (workspace) – in the upper right pane. The workspace is where user-defined objects accumulate.

You can also get a listing of these objects with a few different R commands:

## [1] "science_rocks"

## [2] "this_is_a_really_long_name"

## [3] "weight_kg"

## [4] "weight_lb"

## [5] "x"## [1] "science_rocks"

## [2] "this_is_a_really_long_name"

## [3] "weight_kg"

## [4] "weight_lb"

## [5] "x"If you want to remove the object named weight_kg, you can do this:

To remove everything:

or click the broom in RStudio’s Environment pane.

5.5.4 R functions, help pages

So far we’ve learned some of the basic syntax and concepts of R programming, and how to navigate RStudio, but we haven’t done any complicated or interesting programming processes yet. This is where functions come in!

A function is a way to group a set of commands together to undertake a task in a reusable way. When a function is executed, it produces a return value. We often say that we are “calling” a function when it is executed. Functions can be user defined and saved to an object using the assignment operator, so you can write whatever functions you need, but R also has a mind-blowing collection of built-in functions ready to use. To start, we will be using some built in R functions.

All functions are called using the same syntax: function name with parentheses around what the function needs in order to do what it was built to do. The pieces of information that the function needs to do its job are called arguments. So the syntax will look something like: result_value <- function_name(argument1 = value1, argument2 = value2, ...).

5.5.4.1 A simple example

To take a very simple example, let’s look at the mean() function. As you might expect, this is a function that will take the mean of a set of numbers. Very convenient!

Let’s create our vector of weights again:

and use the mean function to calculate the mean weight.

## [1] 30.666675.5.4.2 Getting help

What if you know the name of the function that you want to use, but don’t know exactly how to use it? Thankfully RStudio provides an easy way to access the help documentation for functions.

To access the help page for mean, enter the following into your console:

The help pane will show up in the lower right hand corner of your RStudio.

The help page is broken down into sections:

- Description: An extended description of what the function does.

- Usage: The arguments of the function(s) and their default values.

- Arguments: An explanation of the data each argument is expecting.

- Details: Any important details to be aware of.

- Value: The data the function returns.

- See Also: Any related functions you might find useful.

- Examples: Some examples for how to use the function.

5.5.4.3 Your turn

Exercise: Look up the help file for a function that you know or expect to exist. Here are some ideas:

?getwd(),?plot(),min(),max(),?log()).

And there’s also help for when you only sort of remember the function name: double-questionmark:

Not all functions have (or require) arguments:

## [1] "Tue Feb 16 11:53:44 2021"5.6 Literate Analysis with RMarkdown

5.6.1 Learning Objectives

In this lesson we will:

- explore an example of RMarkdown as literate analysis

- learn markdown syntax

- write and run R code in RMarkdown

- build and knit an example document

5.6.2 Introduction and motivation

The concept of literate analysis dates to a 1984 article by Donald Knuth. In this article, Knuth proposes a reversal of the programming paradigm.

Instead of imagining that our main task is to instruct a computer what to do, let us concentrate rather on explaining to human beings what we want a computer to do.

If our aim is to make scientific research more transparent, the appeal of this paradigm reversal is immediately apparent. All too often, computational methods are written in such a way as to be borderline incomprehensible - even to the person who originally wrote the code! The reason for this is obvious, computers interpret information very differently than people do. By switching to a literate analysis model, you help enable human understanding of what the computer is doing. As Knuth describes, in the literate analysis model, the author is an “essayist” who chooses variable names carefully, explains what they mean, and introduces concepts in the analysis in a way that facilitates understanding.

RMarkdown is an excellent way to generate literate analysis, and a reproducible workflow. RMarkdown is a combination of two things - R, the programming language, and markdown, a set of text formatting directives. In R, the language assumes that you are writing R code, unless you specify that you are writing prose (using a comment, designated by #). The paradigm shift of literate analysis comes in the switch to RMarkdown, where instead of assuming you are writing code, Rmarkdown assumes that you are writing prose unless you specify that you are writing code. This, along with the formatting provided by markdown, encourages the “essayist” to write understandable prose to accompany the code that explains to the human-beings reading the document what the author told the computer to do. This is in contrast to writing just R code, where the author telling to the computer what to do with maybe a smattering of terse comments explaining the code to a reader.

Before we dive in deeper, let’s look at an example of what literate analysis with RMarkdown can look like using a real example. Here is an example of a real analysis workflow written using RMarkdown.

There are a few things to notice about this document, which assembles a set of similar data sources on salmon brood tables with different formatting into a single data source.

- It introduces the data sources using in-line images, links, interactive tables, and interactive maps.

- An example of data formatting from one source using R is shown.

- The document executes a set of formatting scripts in a directory to generate a single merged file.

- Some simple quality checks are performed (and their output shown) on the merged data.

- Simple analysis and plots are shown.

In addition to achieving literate analysis, this document also represents a reproducible analysis. Because the entire merging and quality control of the data is done using the R code in the RMarkdown, if a new data source and formatting script are added, the document can be run all at once with a single click to re-generate the quality control, plots, and analysis of the updated data.

RMarkdown is an amazing tool to use for collaborative research, so we will spend some time learning it well now, and use it through the rest of the course.

Setup

Open a new RMarkdown file using the following prompts:

File -> New File -> RMarkdown

A popup window will appear. You can just click the OK button here, or give your file a new title if you wish. Leave the output format as HTML.

5.6.3 Basic RMarkdown syntax

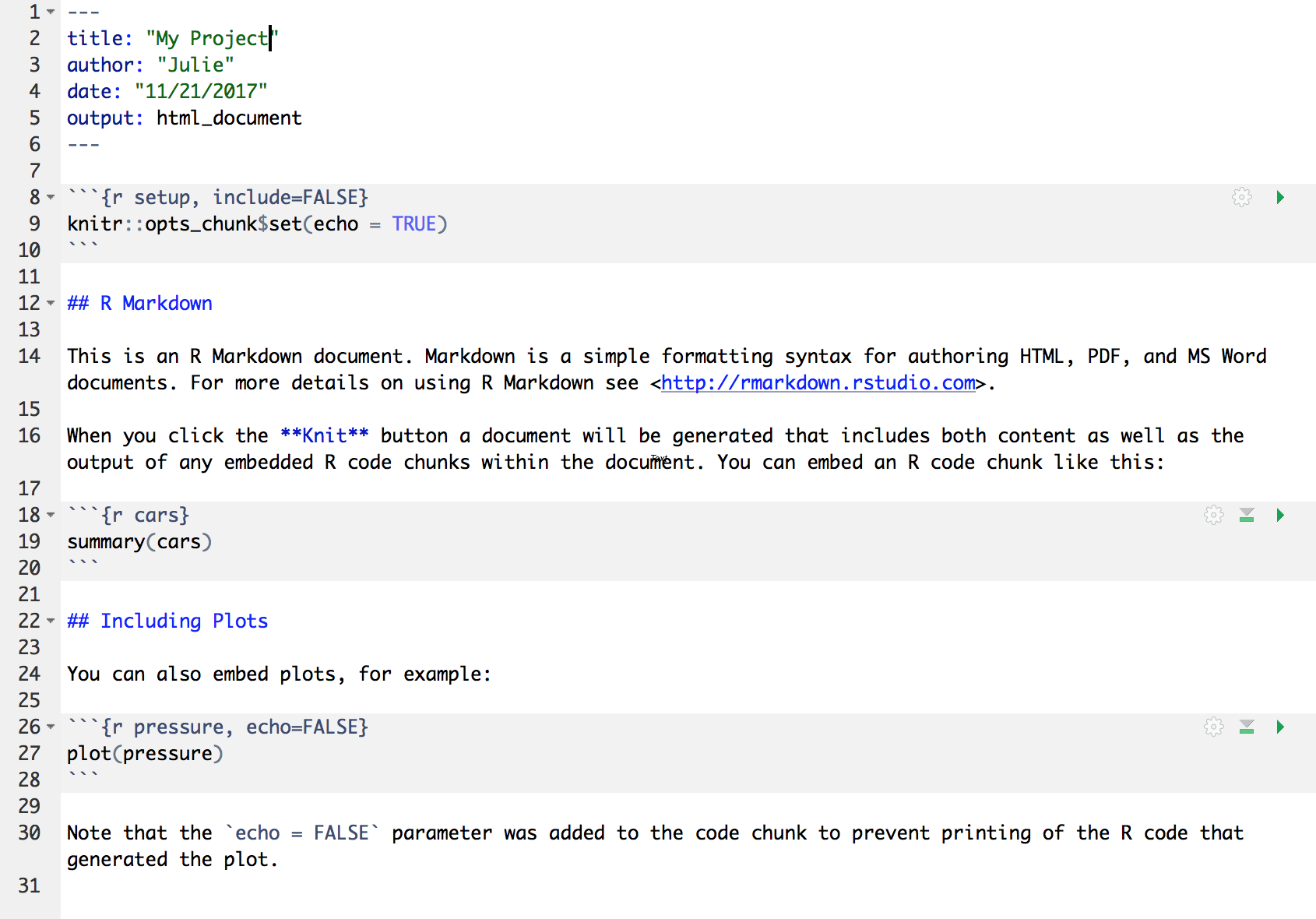

The first thing to notice is that by opening a file, we are seeing the 4th pane of the RStudio console, which is essentially a text editor.

Let’s have a look at this file — it’s not blank; there is some initial text already provided for you. Notice a few things about it:

- There are white and grey sections. R code is in grey sections, and other text is in white.

Let’s go ahead and “Knit” the document by clicking the blue yarn at the top of the RMarkdown file. When you first click this button, RStudio will prompt you to save this file. Save it in the top level of your home directory on the server, and name it something that you will remember (like rmarkdown-intro.Rmd).

What do you notice between the two?

First, the knit process produced a second file (an HTML file) that popped up in a second window. You’ll also see this file in your directory with the same name as your Rmd, but with the html extension. In it’s simplest format, RMarkdown files come in pairs - the RMarkdown file, and its rendered version. In this case, we are knitting, or rendering, the file into HTML. You can also knit to PDF or Word files.

Notice how the grey R code chunks are surrounded by 3 backticks and {r LABEL}. These are evaluated and return the output text in the case of summary(cars) and the output plot in the case of plot(pressure). The label next to the letter r in the code chunk syntax is a chunk label - this can help you navigate your RMarkdown document using the dropdown menu at the bottom of the editor pane.

Notice how the code plot(pressure) is not shown in the HTML output because of the R code chunk option echo = FALSE. RMarkdown has lots of chunk options, including ones that allow for code to be run but not shown (echo = FALSE), code to be shown but not run (eval = FALSE), code to be run, but results not shown (results = 'hide'), or any combination of those.

Before we get too deeply into the R side, let’s talk about Markdown. Markdown is a formatting language for plain text, and there are only around 15 rules to know.

Notice the syntax in the document we just knitted:

- headers get rendered at multiple levels:

#,## - bold:

**word**

There are some good cheatsheets to get you started, and here is one built into RStudio: Go to Help > Markdown Quick Reference .

Important: note that the hash symbol # is used differently in Markdown and in R:

- in R, a hash indicates a comment that will not be evaluated. You can use as many as you want:

#is equivalent to######. It’s just a matter of style. - in Markdown, a hash indicates a level of a header. And the number you use matters:

#is a “level one header”, meaning the biggest font and the top of the hierarchy.###is a level three header, and will show up nested below the#and##headers.

Challenge

- In Markdown, Write some italic text, make a numbered list, and add a few sub-headers. Use the Markdown Quick Reference (in the menu bar: Help > Markdown Quick Reference).

- Re-knit your html file and observe your edits.

5.6.4 Code chunks

Next, do what I do every time I open a new RMarkdown: delete everything below the “setup chunk” (line 10). The setup chunk is the one that looks like this:

knitr::opts_chunk$set(echo = TRUE)This is a very useful chunk that will set the default R chunk options for your entire document. I like keeping it in my document so that I can easily modify default chunk options based on the audience for my RMarkdown. For example, if I know my document is going to be a report for a non-technical audience, I might set echo = FALSE in my setup chunk, that way all of the text, plots, and tables appear in the knitted document. The code, on the other hand, is still run, but doesn’t display in the final document.

Now let’s practice with some R chunks. You can Create a new chunk in your RMarkdown in one of these ways:

- click “Insert > R” at the top of the editor pane

- type by hand ```{r} ```

- use the keyboard shortcut Command + Option + i (for windows, Ctrl + Alt + i)

Now, let’s write some R code.

x <- 4*3

xHitting return does not execute this command; remember, it’s just a text file. To execute it, we need to get what we typed in the the R chunk (the grey R code) down into the console. How do we do it? There are several ways (let’s do each of them):

copy-paste this line into the console (generally not recommended as a primary method)

select the line (or simply put the cursor there), and click ‘Run’. This is available from

- the bar above the file (green arrow)

- the menu bar: Code > Run Selected Line(s)

- keyboard shortcut: command-return

click the green arrow at the right of the code chunk

Challenge

Add a few more commands to your code chunk. Execute them by trying the three ways above.

Question: What is the difference between running code using the green arrow in the chunk and the command-return keyboard shortcut?

5.6.5 Literate analysis practice

Now that we have gone over the basics, let’s go a little deeper by building a simple, small RMarkdown document that represents a literate analysis using real data.

Setup

- Navigate to the following dataset: https://doi.org/10.18739/A25T3FZ8X

- Download the file “BGchem2008data.csv”

- Click the “Upload” button in your RStudio server file browser.

- In the dialog box, make sure the destination directory is the same directory where your RMarkdown file is saved (likely your home directory), click “choose file,” and locate the BGchem2008data.csv file. Press “ok” to upload the file.

5.6.5.1 Developing code in RMarkdown

Experienced R users who have never used RMarkdown often struggle a bit in the transition to developing analysis in RMarkdown - which makes sense! It is switching the code paradigm to a new way of thinking. Rather than starting an R chunk and putting all of your code in that single chunk, here I describe what I think is a better way.

- Open a document and block out the high-level sections you know you’ll need to include using top level headers.

- Add bullet points for some high level pseudo-code steps you know you’ll need to take.

- Start filling in under each bullet point the code that accomplishes each step. As you write your code, transform your bullet points into prose, and add new bullet points or sections as needed.

For this mini-analysis, we will just have the following sections and code steps:

- Introduction

- read in data

- Analysis

- calculate summary statistics

- calculate mean Redfield ratio

- plot Redfield ratio

Challenge

Create the ‘outline’ of your document with the information above. Top level bullet points should be top level sections. The second level points should be a list within each section.

Next, write a sentence saying where your dataset came from, including a hyperlink, in the introduction section.

Hint: Navigate to Help > Markdown Quick Reference to lookup the hyperlink syntax.

5.6.5.2 Read in the data

Now that we have outlined our document, we can start writing code! To read the data into our environment, we will use a function from the readr package. R packages are the building blocks of computational reproducibility in R. Each package contains a set of related functions that enable you to more easily do a task or set of tasks in R. There are thousands of community-maintained packages out there for just about every imaginable use of R - including many that you have probably never thought of!

To install a package, we use the syntax install.packages('packge_name'). A package only needs to be installed once, so this code can be run directly in the console if needed. To use a package in our analysis, we need to load it into our environment using library(package_name). Even though we have installed it, we haven’t yet told our R session to access it. Because there are so many packages (many with conflicting namespaces) R cannot automatically load every single package you have installed. Instead, you load only the ones you need for a particular analysis. Loading the package is a key part of the reproducible aspect of our Rmarkdown, so we will include it as an R chunk. It is generally good practice to include all of your library calls in a single, dedicated R chunk near the top of your document. This lets collaborators know what packages they might need to install before they start running your code.

You should have already installed readr as part of the setup for this course, so add a new R chunk below your setup chunk that calls the readr library, and run it. It should look like this:

Now, below the introduction that you wrote, add a chunk that uses the read_csv function to read in your data file.

About RMarkdown paths

In computing, a path specifies the unique location of a file on the filesystem. A path can come in one of two forms: absolute or relative. Absolute paths start at the very top of your file system, and work their way down the directory tree to the file. Relative paths start at an arbitrary point in the file system. In R, this point is set by your working directory.

RMarkdown has a special way of handling relative paths that can be very handy. When working in an RMarkdown document, R will set all paths relative to the location of the RMarkdown file. This way, you don’t have to worry about setting a working directory, or changing your colleagues absolute path structure with the correct user name, etc. If your RMarkdown is stored near where the data it analyses are stored (good practice, generally), setting paths becomes much easier!

If you saved your “BGchem2008data.csv” data file in the same location as your Rmd, you can just write the following to read it in. The help page (?read_csv, in the console) for this function tells you that the first argument should be a pointer to the file. Rstudio has some nice helpers to help you navigate paths. If you open quotes and press ‘tab’ with your cursor between the quotes, a popup menu will appear showing you some options.

Parsed with column specification:

cols(

Date = col_date(format = ""),

Time = col_datetime(format = ""),

Station = col_character(),

Latitude = col_double(),

Longitude = col_double(),

Target_Depth = col_double(),

CTD_Depth = col_double(),

CTD_Salinity = col_double(),

CTD_Temperature = col_double(),

Bottle_Salinity = col_double(),

d18O = col_double(),

Ba = col_double(),

Si = col_double(),

NO3 = col_double(),

NO2 = col_double(),

NH4 = col_double(),

P = col_double(),

TA = col_double(),

O2 = col_double()

)

Warning messages:

1: In get_engine(options$engine) :

Unknown language engine 'markdown' (must be registered via knit_engines$set()).

2: Problem with `mutate()` input `Lower`.

ℹ NAs introduced by coercion

ℹ Input `Lower` is `as.integer(Lower)`.

3: In mask$eval_all_mutate(dots[[i]]) : NAs introduced by coercionIf you run this line in your RMarkdown document, you should see the bg_chem object populate in your environment pane. It also spits out lots of text explaining what types the function parsed each column into. This text is important, and should be examined, but we might not want it in our final document.

Challenge

Use one of two methods to figure out how to suppress warning and message text in your chunk output:

- The gear icon in the chunk, next to the play button

- The RMarkdown quick reference

Aside

Why not use read.csv from base R?

We chose to show read_csv from the readr package for a few reasons. One is to introduce the concept of packages and showing how to load them, but read_csv has several advantages over read.csv.

- more reasonable function defaults (no stringsAsFactors!)

- smarter column type parsing, especially for dates

- it is much faster than

read.csv, which is helpful for large files

5.6.5.3 Calculate Summary Statistics

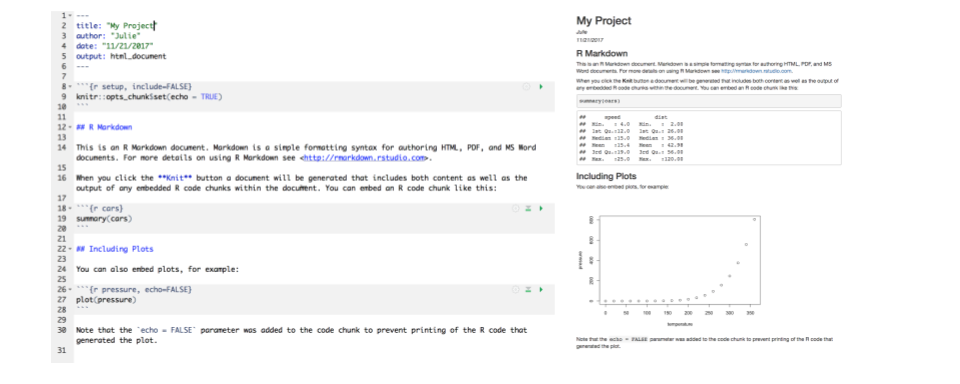

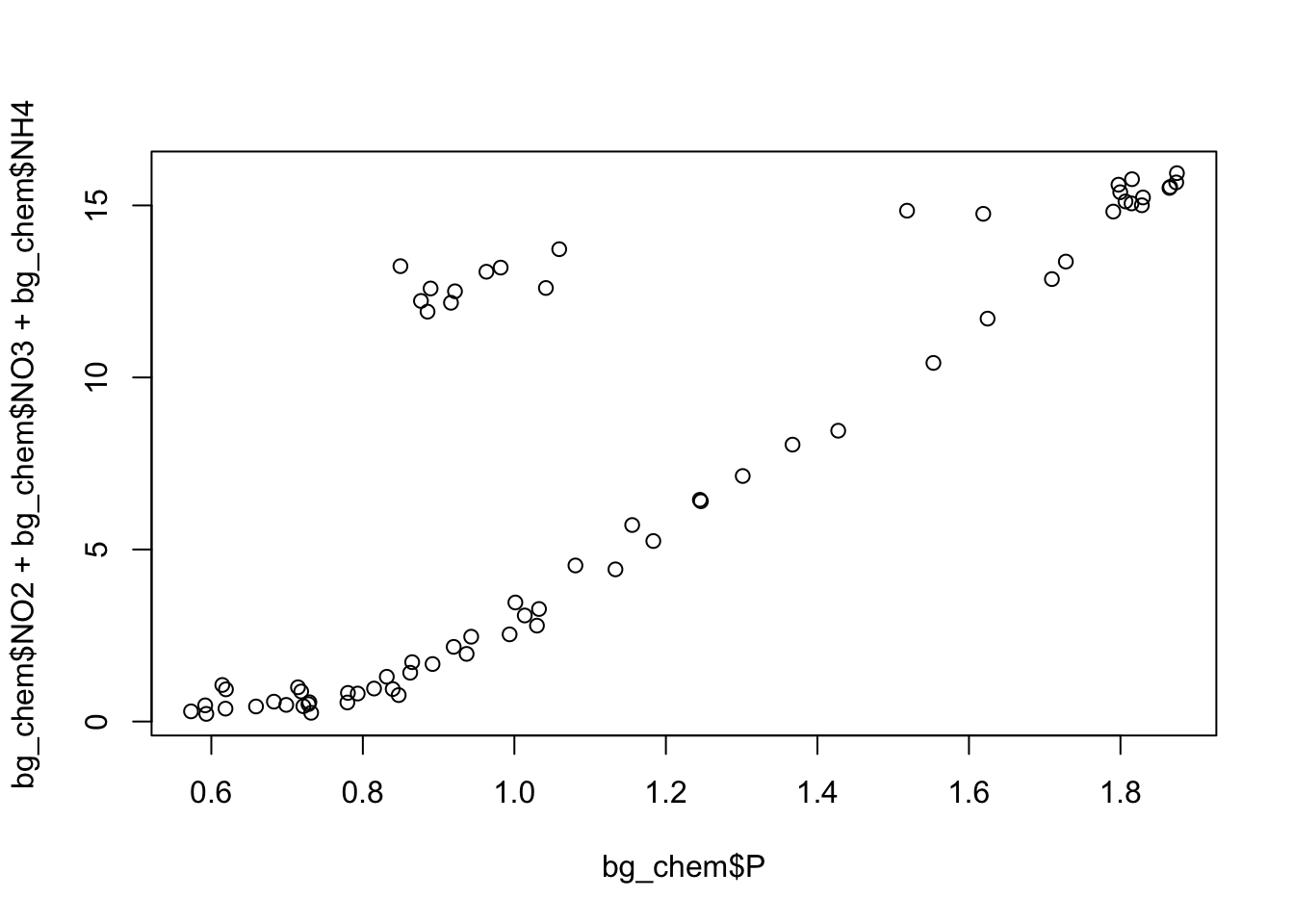

As our “analysis” we are going to calculate some very simple summary statistics and generate a single plot. In this dataset of oceanographic water samples, we will be examining the ratio of nitrogen to phosphate to see how closely the data match the Redfield ratio, which is the consistent 16:1 ratio of nitrogen to phosphorous atoms found in marine phytoplankton.

Under the appropriate bullet point in your analysis section, create a new R chunk, and use it to calculate the mean nitrate (NO3), nitrite (NO2), ammonium (NH4), and phosphorous (P) measured. Save these mean values as new variables with easily understandable names, and write a (brief) description of your operation using markdown above the chunk.

nitrate <- mean(bg_chem$NO3)

nitrite <- mean(bg_chem$NO2)

amm <- mean(bg_chem$NH4)

phos <- mean(bg_chem$P)In another chunk, use those variables to calculate the nitrogen:phosphate ratio (Redfield ratio).

You can access this variable in your Markdown text by using R in-line in your text. The syntax to call R in-line (as opposed to as a chunk) is a single backtick `, the letter “r”, whatever your simple R command is - here we will use round(ratio) to print the calculated ratio, and a closing backtick `. So: ` 6 `. This allows us to access the value stored in this variable in our explanatory text without resorting to the evaluate-copy-paste method so commonly used for this type of task. The text as it looks in your RMrakdown will look like this:

The Redfield ratio for this dataset is approximately `r round(ratio)`.

And the rendered text like this:

The Redfield ratio for this dataset is approximately 6.

Finally, create a simple plot using base R that plots the ratio of the individual measurements, as opposed to looking at mean ratio.

Challenge

Decide whether or not you want the plotting code above to show up in your knitted document along with the plot, and implement your decision as a chunk option.

“Knit” your RMarkdown document (by pressing the Knit button) to observe the results.

Aside

How do I decide when to make a new chunk?

Like many of life’s great questions, there is no clear cut answer. My preference is to have one chunk per functional unit of analysis. This functional unit could be 50 lines of code or it could be 1 line, but typically it only does one “thing.” This could be reading in data, making a plot, or defining a function. It could also mean calculating a series of related summary statistics (as above). Ultimately the choice is one related to personal preference and style, but generally you should ensure that code is divided up such that it is easily explainable in a literate analysis as the code is run.

5.6.6 RMarkdown and environments

Let’s walk through an exercise with the document you built together to demonstrate how RMarkdown handles environments. We will be deliberately inducing some errors here for demonstration purposes.

First, follow these steps:

- Restart your R session (Session > Restart R)